Democratizing Generative AI

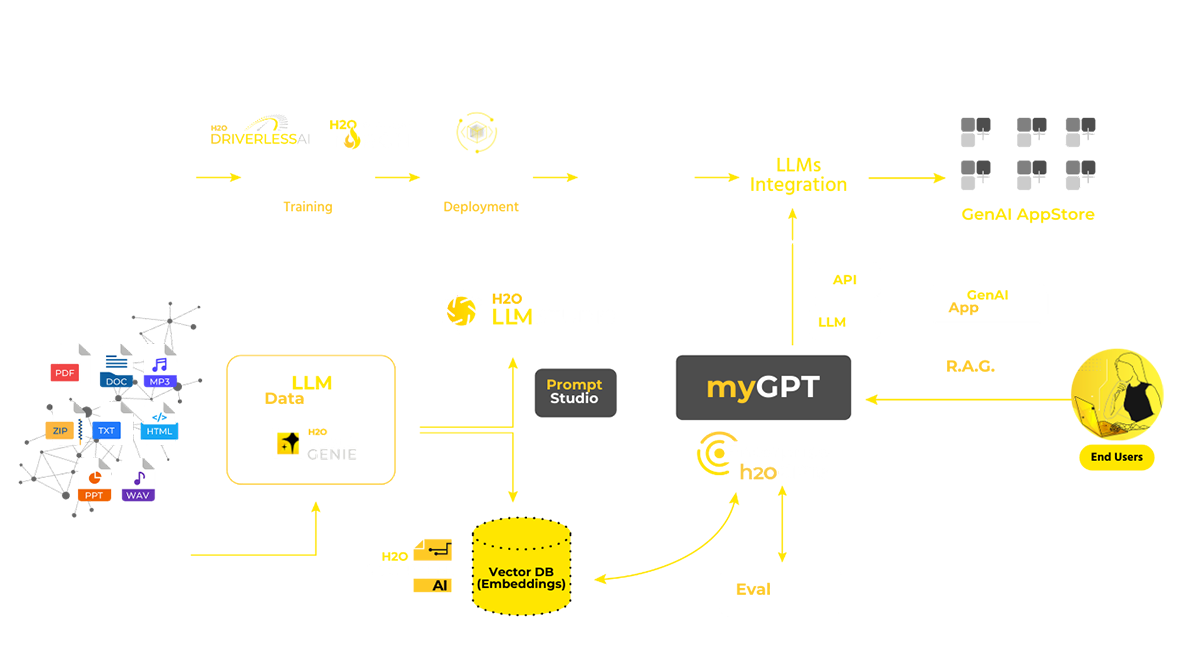

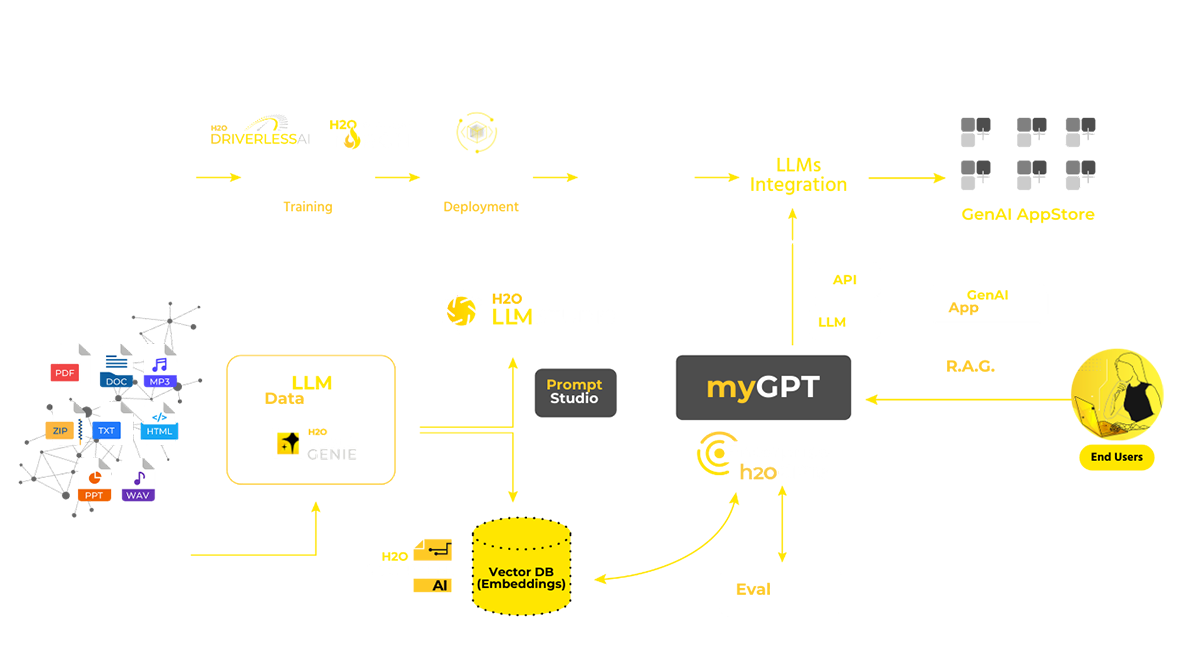

Own your models: generative and predictive. We bring both super powers together with h2oGPT.

Enterprise Generative AI

Enterprise h2oGPTe provides information retrieval on internal data, privately hosts LLMs, and secures data so it stays with you.

Get answers from documents and data (web pages, audio, images and video)

RAG (Retrieval Augmented Generation) utilizing VectorDB, Embeddings, and LLM for advanced data understanding and generation capabilities.

Built with multi-year expertise in Document AI, ensuring robust and reliable performance.

Get fact-based answers (Grounding), data extraction (document to JSON), summarization, and other batch processing tasks, as well as code/SQL generation for data analysis.

Safety, controls and transparency

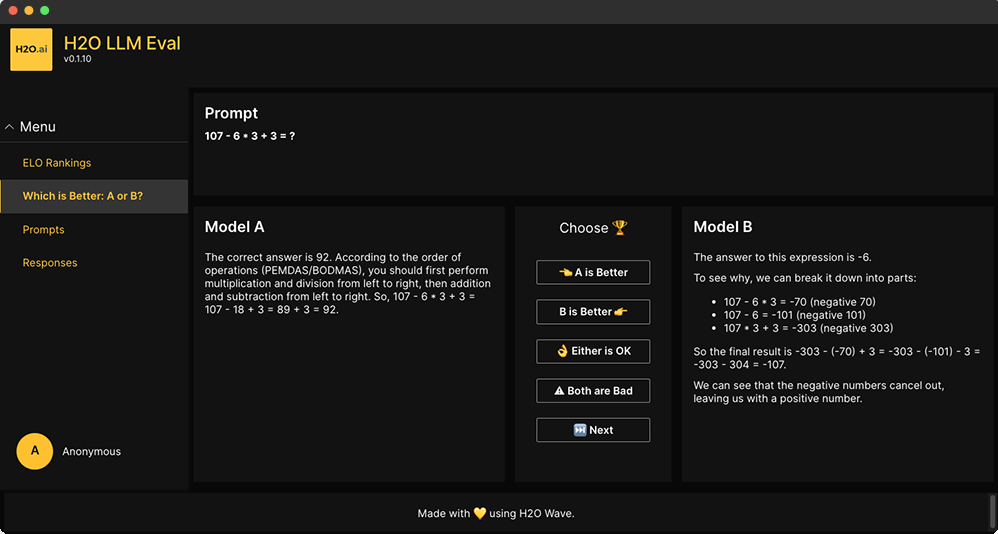

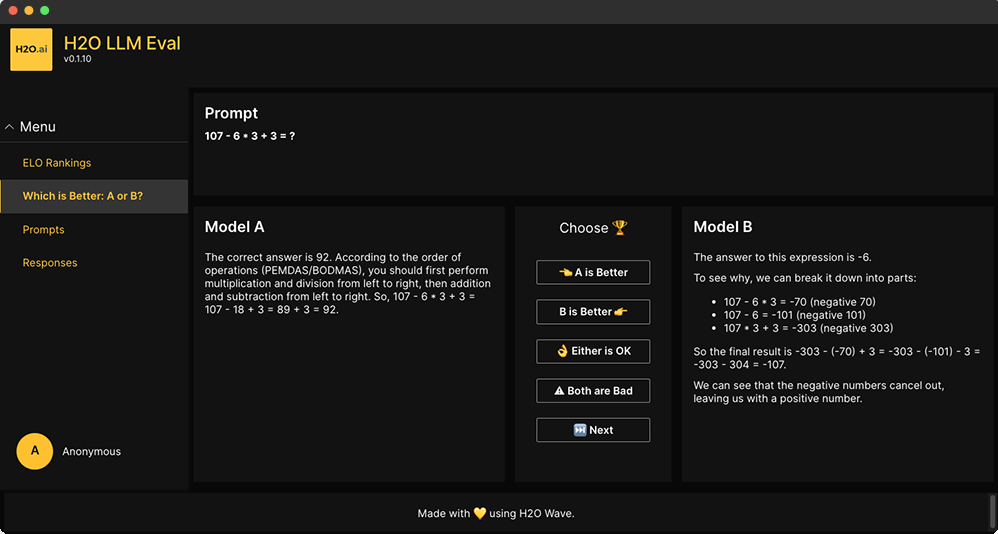

EvalGPT.ai provides a customizable evaluation and validation framework that is model agnostic, allowing for the assessment of various AI models without being tied to a specific one.

Guardrails and response validations to detect and remove personally identifiable information (PII) or sensitive data, ensuring safety and privacy.

Decision Rules to provide AI governance over any model output.

Complete control over deployment and flow of information

Air-gapped, on-premises, your hardware, your software, your data. No dependence on 3rd party services for on-premises deployments.

SSO and SOC2 Type 2 + HIPAA compliant

end-to-end GenAI platform created by the makers of our award-winning AutoML and no-code deep learning engines.

Hosted in your private cloud or on-premise. Supports Cloud VPC in AWS, Azure, GCP. No sharing of hardware with other customers in the cloud, single-tenant.

Make your own GPTs and custom applications with H2O LLM Studio Suite

Client APIs for Python, JavaScript - Customize LLM/VectorDB for your use cases

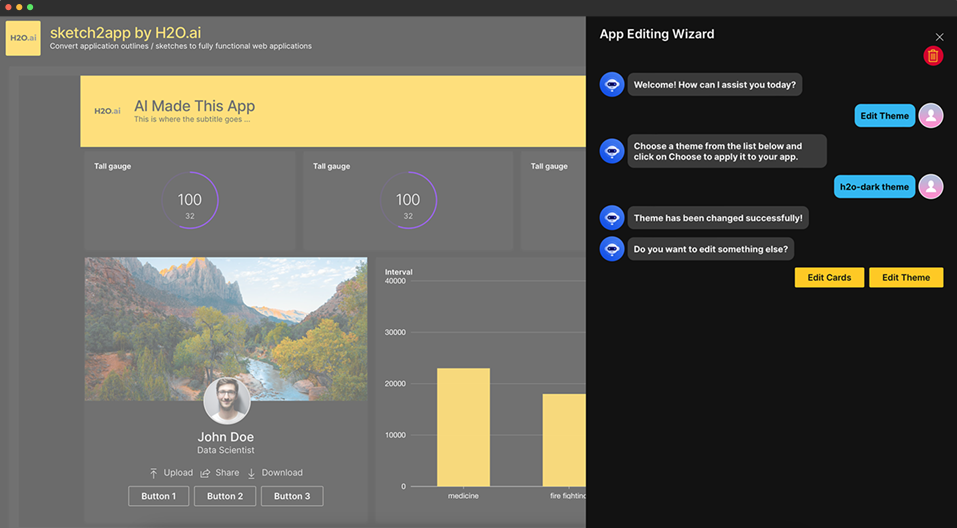

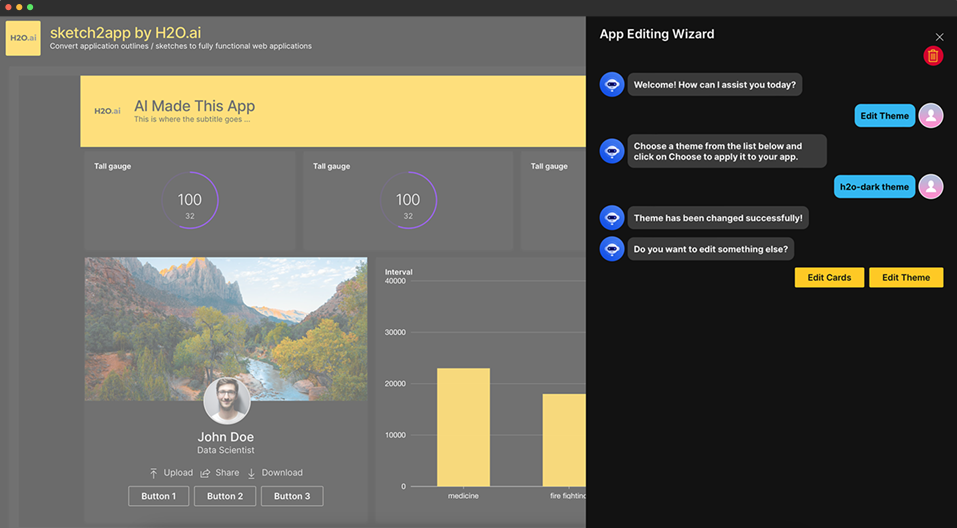

Leverage H2O.ai's Low/No-Code App dev frameworks (H2O Wave, Sketch2Wave)

Pre-built templates for common use cases

Built on open source models

We make open source models enterprise ready

We’ve fine-tuned open source models to perform faster and more accurately than any other open source models available in the market today.

We have built-in GuardRails, or choose the GuardRail of your choice.

We’ve designed and developed the world’s best LLM-powered assistant that is grounded in your internal trusted knowledge base.

We made it easy to deploy, install and upgrade. We take the pain out of fine-tuning, testing, deploying and updating foundational models and algorithms with our API library of connectors and pre-built integrations.

Size matters

We understand that size matters. That's why we offer the smallest operational footprint possible, running on the GPUs you already have. With our retrieval augmentation generation (RAG) technology, you can seamlessly integrate our models into your existing data store.

We give customers total control and customization over their AI models. This level of customization and control is unmatched by anything in the market today.

We offer 13b, 34b or 70b Llama2 models that are up to 100x times smaller and more affordable while maintaining human-level accuracy. We deliver the best open source models that efficiently accomplish tasks at a fraction of the cost to run and operate.

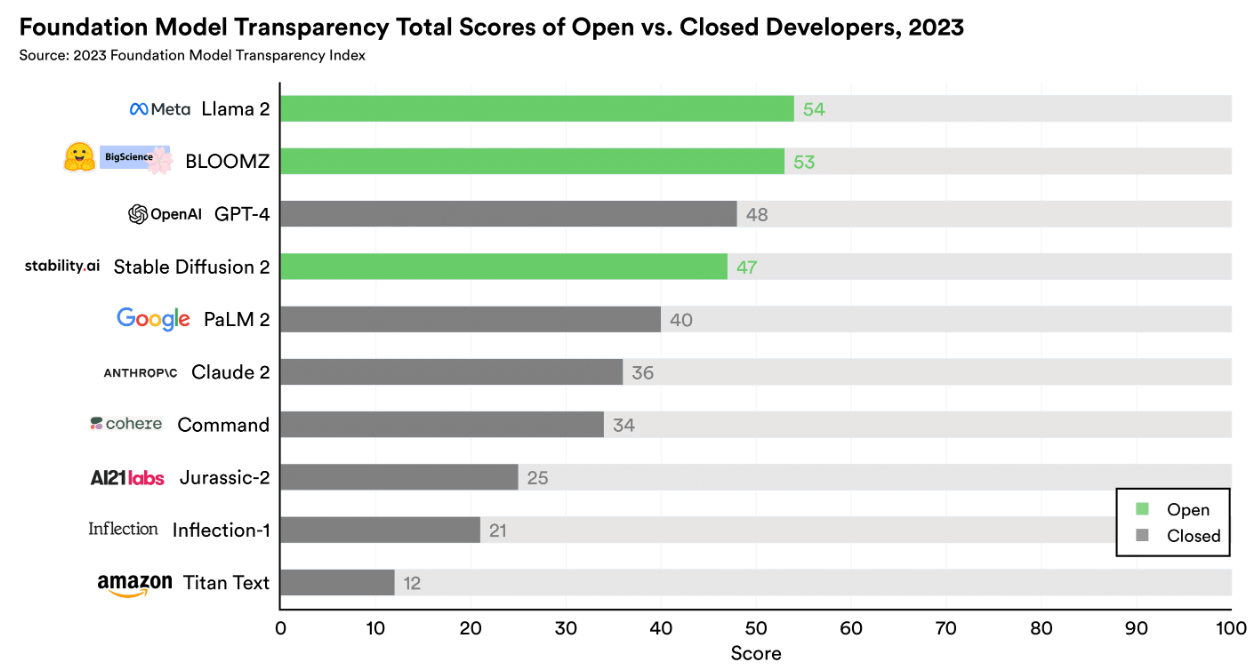

OSS models are more transparent

According to the 2023 Foundation Model Transparency Index by Stanford University Center for Research on Foundation Models:

Open models lead the way: two of the three open models score greater than the best closed model.

Create your own large language models, build enterprise-grade GenAI solutions with the H2O LLM Studio Suite

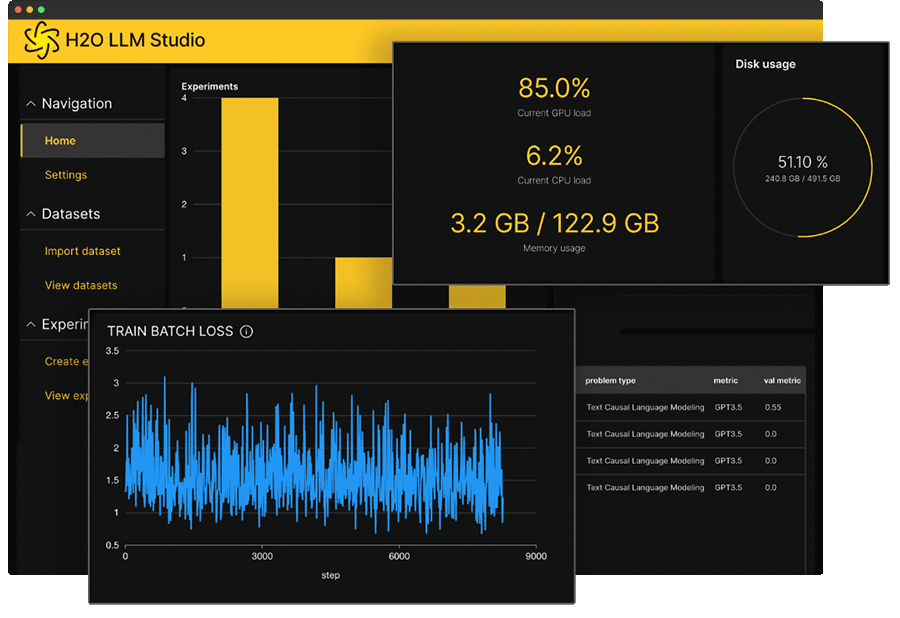

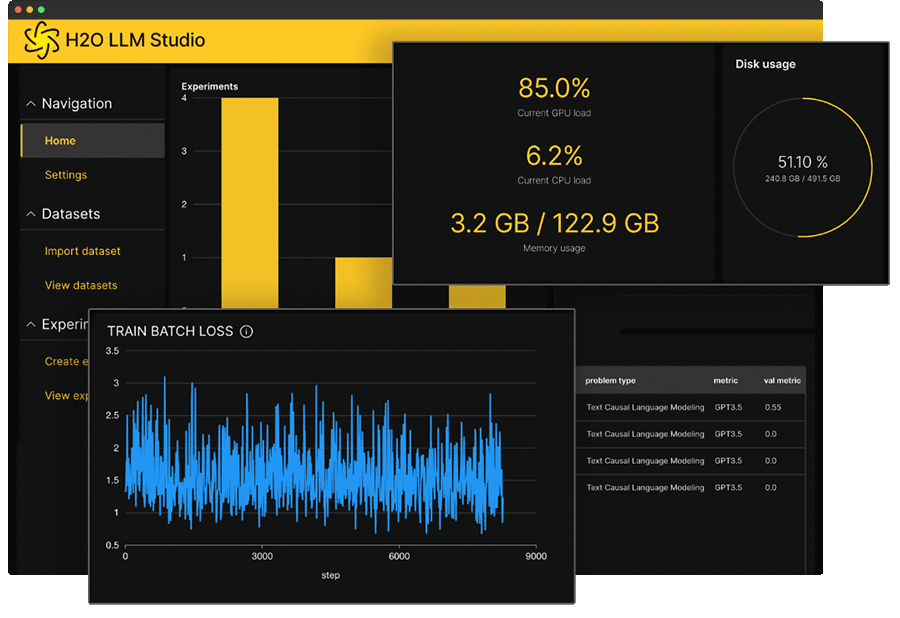

H2O LLM Studio was created by our top Kaggle Grandmasters and provides organizations with a no-code fine-tuning framework to make their own custom state-of-the-art LLMs for enterprise applications.

-

Curate

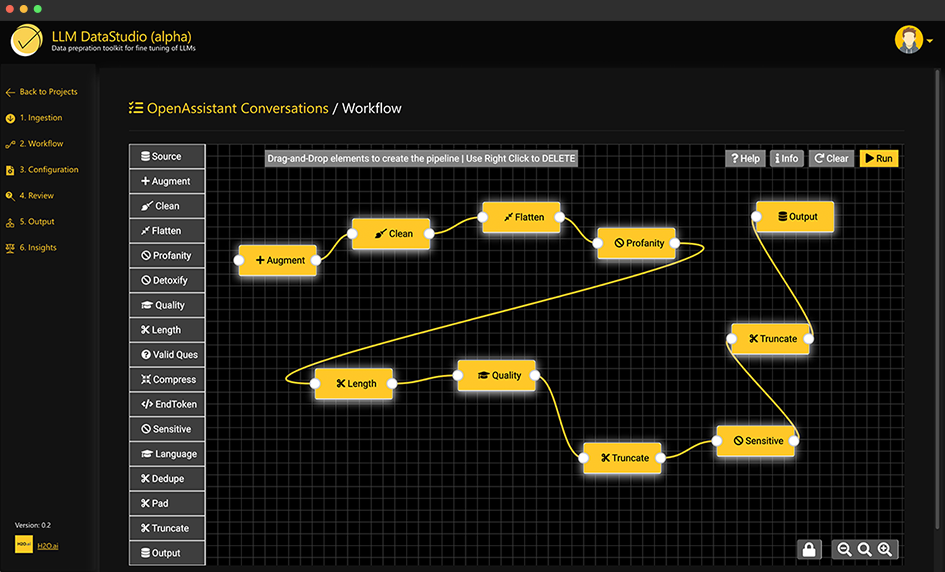

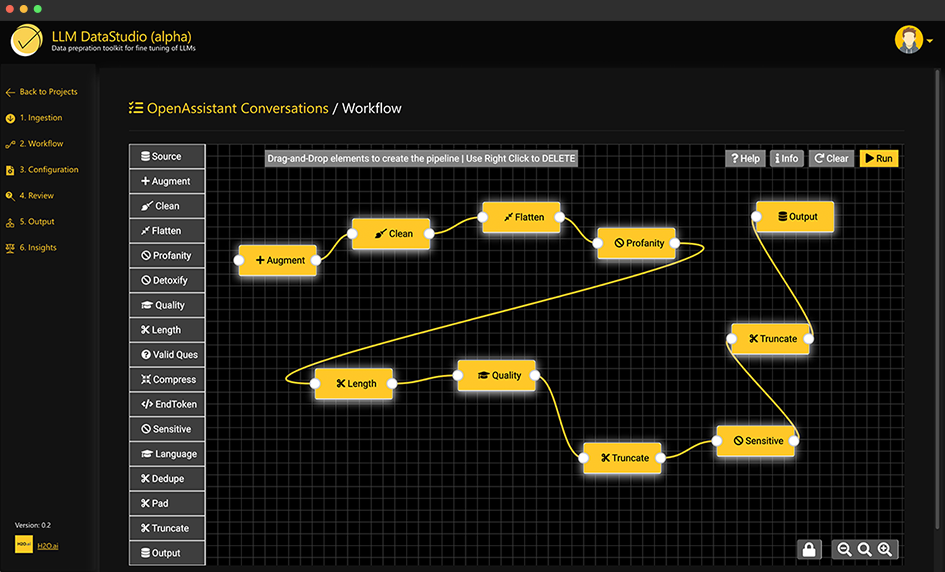

H2O LLM Data Studio -

Prep

H2O LLM Data Studio -

Fine Tune

H2O LLM Studio -

Evaluate

H2O LLM Eval Studio -

Sketch It

H2O LLM App Studio

Convert your unstructured data (documents, audio, files) to Q:A pairs for LLM fine-tuning

Prepare and clean your data for LLM fine-tuning and other downstream tasks

Fine-tune state of the art large language models using LLM Studio, a no-code GUI framework

Get a custom leader board comparing high-performing LLMs and choose the best model for your specific task

Create LLM-powered AI applications as fast as you can sketch it using LLM App Studio!

Develop, deploy and share safe and trusted applications

Keep your data isolated and secure. We handle provisioning and deployment built right within H2O GenAI App Store. Choose the most cost-effective models for your use case, ensure the safety of your data and create reusable components to scale application development.

- Data science expertise

- Leverage OSS LLM models

- Supercharge autoML with GenAI

- Open source vs enterprise

Data science expertise

10+ years of experience serving hundreds of Fortune 2000 companies

H2O.ai created first Open Source AI for Enterprise, first .ai domain

On premises, multi-cloud and SaaS support

Multiple successful generations of ML/AI platforms (H2O-3/Driverless AI)

Consistent visionary leadership in Gartner MQs

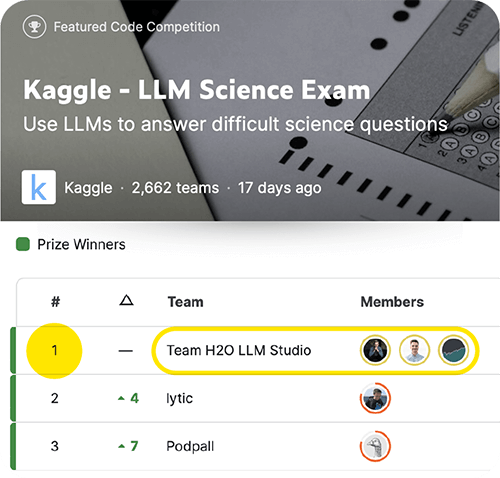

30 Kaggle Grandmasters at H2O.ai

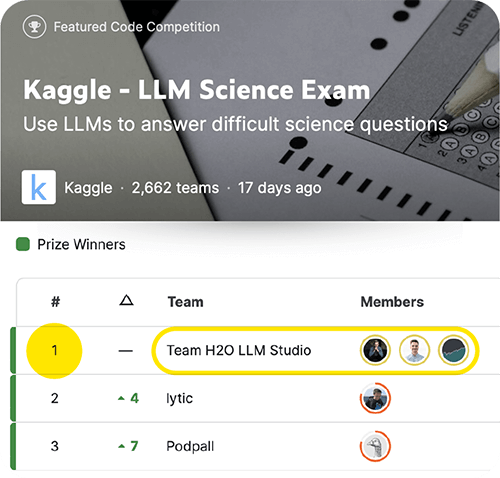

NEWS FLASH ![]()

Team H2O LLM Studio won first place in the 2023 Kaggle LLM Science competition using RAG!

Fine-tuning of LLMs/NLP

Data prep (doc to Q/A, cleaning, filters) for LLM fine-tuning

Fine-tuning for custom languages/styles/tasks

Support all GPU types, all LLM types (Falcon, Llama, Codellama etc.)

Custom Embeddings for VectorDB

Custom NLP models as safety for LLMs (built with H2O Hydrogen Torch)

Lowest TCO, lowest risk

Always on top of the latest open source LLMs and techniques like quantization

Llama2-based models for chat and coding are very similar to GPT-4

Runs on commodity hardware (even on single 24GB GPU)

OSS community has the highest pace of innovation

Performance

Latest open source LLM models are on par with proprietary models for both chat and code

State-of-the-art vLLM-based model deployment and inferencing

Fully scalable architecture designed for multi-user deployment

100+ queries/minute on single GPU deployments (Llama 13B)

All components like VectorDB, Parsers, LLMs are horizontally scalable with k8s

Advanced predictive analytics and decision support from autoML

Easily extract business insights from industry-leading AutoML (H2O Driverless AI)

Ask business questions, get answers based on predictions and Shapley reason codes

Full automation from Data to Business recommendations

Industry-leading AI/ML platforms

Industry-leading Open Source Tabular ML and AutoML (H2O-3)

Industry-leading multimodal AutoML for Time-Series, NLP, Images, with Python recipes (H2O Driverless AI)

Industry-leading standalone Java/C++ deployment (H2O-3/H2O Driverless AI)

Unstructured transformers-based Deep Learning fine-tuning for image/video/audio/text (H2O Hydrogen Torch)

Automatic labeling tools for unstructured data with zero-shot models (H2O Label Genie) - Supervised document annotation engine (H2O Document AI)

Model management, deployment, inferencing, and monitoring for H2O and third-party models

| Features | Enterprise h2oGPTe | Open Source h2oGPT |

|---|---|---|

| Prompt Engineering | ✓ | ✓ |

| Data Prep | ✓ | ✓ |

| Fine-Tuning | ✓ | ✓ |

| Inference | ✓ | ✓ |

| Document Search | ✓ | ✓ |

| On Premise | ✓ | ✓ |

| GCP | ✓ | ✓ |

| AWS | ✓ | ✓ |

| Azure | ✓ | ✓ |

| Retrieval Augmentation Generation (RAG) | ✓ | ✓ |

| Managed Cloud | ✓ | |

| Hybrid Cloud | ✓ | |

| Validation | ✓ | |

| Guardrails | ✓ | |

| Scalability | ✓ | |

| Installers | ✓ | |

| Security | ✓ | |

| Multi-Tenancy | ✓ | |

| Enterprise Support | ✓ | |

| Enterprise RAG | ✓ | |

| LLM MLOps | ✓ |