-

Platform

Generative AI

-

GenAI App Store

Develop, deploy and share safe and trusted applications for your organization

-

Gov GenAI App Store

See the power of GenAI’s potential with public sector use cases

-

h2oGPT and H2O LLM

Create private, offline chatbot applications with open source H2O LLM Studio

- Platform

Predictive AI

-

GenAI App Store

- Solutions

-

Customers

- Partners

-

Resources

- Events

-

Company

BLOG

In-memory Big Data: Spark + H2O

By H2O.ai Team | minute read | March 25, 2014

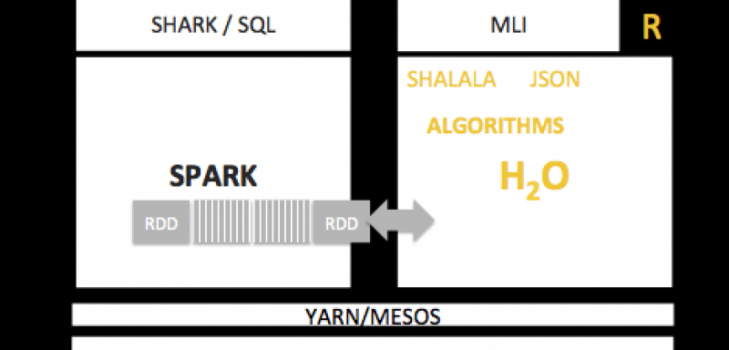

Big Data has moved in-memory. Customers using SQL in their Join & Munging efforts via SHARK and Apache Spark need to use Regressions and Deep Learning. To make their experiences great & seamlessly weave SQL workflows with Data Science and Machine Learning, we are architecting a simple RDD data import-export in H2O. This brings continuity to their in-memory interactive experience. And support for Spark MLI using our native Scala API – Shalala.

Big Data users can now use SHARK to extract and fuse datasets and H2O for better predictions.

hdfs | Spark | SHARK/SQL | RDD | h2o.readRDD() | h2o.deepLearning() | h2o.predict() | h2o.persist(RDD or HDFS)

Calling h2o.deepLearning() from within Scala interface alongside Spark (via Shalala) will make the workflow even more seamless for end users.

Explore similar content by topic

H2O.ai Team

At H2O.ai, democratizing AI isn’t just an idea. It’s a movement. And that means that it requires action. We started out as a group of like minded individuals in the open source community, collectively driven by the idea that there should be freedom around the creation and use of AI.

Today we have evolved into a global company built by people from a variety of different backgrounds and skill sets, all driven to be part of something greater than ourselves. Our partnerships now extend beyond the open-source community to include business customers, academia, and non-profit organizations.

Ready to see the H2O.ai platform in action?

Make data and AI deliver meaningful and significant value to your organization with our platform.