The new H2O release 3.10.5.1 brings a shiny new feature – integration of the powerful XGBoost library algorithm into H2O Machine Learning Platform!

XGBoost is an optimized distributed gradient boosting library designed to be highly efficient, flexible, and portable.

XGBoost provides parallel tree boosting (also known as GBDT, GBM) that solves many data science problems in a fast and accurate way.

By integrating XGBoost into the H2O Machine Learning platform, we not only enrich the family of provided algorithms by one of the most powerful machine learning algorithms , but we have also exposed it with all the nice features of H2O – Python, R APIs and Flow UI, real-time training progress, and MOJO support.

Example

Let’s quickly try to run XGBoost on the HIGGS dataset from Python. The first step is to get the latest H2O and install the Python library. Please follow instruction at H2O download page .

The next step is to download the HIGGS training and validation data. We can use sample datasets stored in S3:

wget https://s3.amazonaws.com/h2o-public-test-data/bigdata/laptop/higgs_train_imbalance_100k.csv

wget https://s3.amazonaws.com/h2o-public-test-data/bigdata/laptop/higgs_test_imbalance_100k.csv

# Or use full data: wget https://s3.amazonaws.com/h2o-public-test-data/bigdata/laptop/higgs_head_2M.csvNow, it is time to start your favorite Python environment and build some XGBoost models.

The first step involves starting H2O on single node cluster:

import h2o

h2o.init()In the next step, we import and prepare data via the H2O API:

train_path = 'higgs_train_imbalance_100k.csv'

test_path = 'higgs_test_imbalance_100k.csv'

df_train = h2o.import_file(train_path)

df_test = h2o.import_file(test_path)

# Transform first feature into categorical feature

df_train[0] = df_train[0].asfactor()

df_valid[0] = df_valid[0].asfactor()After data preparation, it is time to build an XGBoost model. Let’s try to train 100 trees with a maximum depth of 10:

param = {

"ntrees" : 100

, "max_depth" : 10

, "learn_rate" : 0.02

, "sample_rate" : 0.7

, "col_sample_rate_per_tree" : 0.9

, "min_rows" : 5

, "seed": 4241

, "score_tree_interval": 100

}

from h2o.estimators import H2OXGBoostEstimator

model = H2OXGBoostEstimator(**param)

model.train(x = list(range(1, df_train.shape[1])), y = 0, training_frame = df_train, validation_frame = df_valid)At this point we can use the trained model like a normal H2O model, and for example use it to generate predictions:

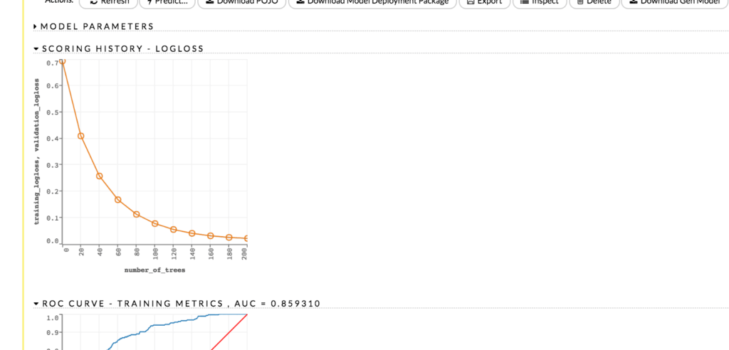

prediction = model.predict(df_valid)[:,2]Or we can open H2O Flow UI and explore model properties in nice user-friendly way:

Or rebuild model with different training parameters:

Technical Details

The integration of XGBoost into the H2O Machine Learning Platform utilizes the JNI interface of XGBoost and the corresponding native libraries. H2O wraps all JNI calls and exposes them as regular H2O model and model builder APIs.

The implementation itself is based on two separated modules, which are enriching the core H2O platform.

The first module, h2o-genmodel-ext-xgboost , extends module h2o-genmodel and registers an XGBoost-specific MOJO. The module also contains all necessary XGBoost binary libraries. Right now, the module provides libraries for OS X and Linux, however support of Windows is coming soon.

The module can contain multiple libraries for each platform to support different configurations (e.g., with/without GPU/OMP). H2O always tries to load the most powerful one (currently a library with GPU and OMP support). If it fails, the loader tries the next one in a loader chain. For each platform, we always provide an XGBoost library with minimal configuration (supports only single CPU) that serves as fallback in case all other libraries could not be loaded.

The second module, h2o-ext-xgboost , contains the actual XGBoost model and model builder code, which communicates with native XGBoost libraries via the JNI API. The module also provides all necessary REST API definitions to expose XGBoost model builder to clients.

Note: To learn more about H2O modular architecture, please, visit review our H2O Platform Extensibility blog post.

Limitations

There are several technical limitations of the current implementation that we are trying to resolve. However, it is necessary to mention them. In general, if XGBoost cannot be initialized for any reason (e.g., unsupported platform), then the algorithm is not exposed via REST API and is not available for clients. Clients can verify availability of the XGBoost by using the corresponding client API call. For example, in Python:

is_xgboost_available = H2OXGBoostEstimator.available()The list of limitations include:

- Right now XGBoost is initialized only for single-node H2O clustersl however multi-node XGBoost support is coming soon.

- The list of supported platforms includes:

Platform Minimal XGBoost OMP GPU Compilation OS Linux yes yes yes Ubuntu 14.04, g++ 4.7 OS X yes no no OS X 10.11 Windows no no no NA Note: Minimal XGBoost configuration includes support for a single CPU.

- Furthermore, because we are using native XGBoost libraries that depend on OS/platform libraries, it is possible that on older operating systems, XGBoost will not be able to find all necessary binary dependencies, and will not be initialized and available.

- XGBoost GPU libraries are compiled against CUDA 8, which is a necessary runtime requirement in order to utilize XGBoost GPU support.

Please give H2O XGBoost chance, try it, and let us know your experience or suggest improvements via h2ostream !