H2O MLOps

Overview

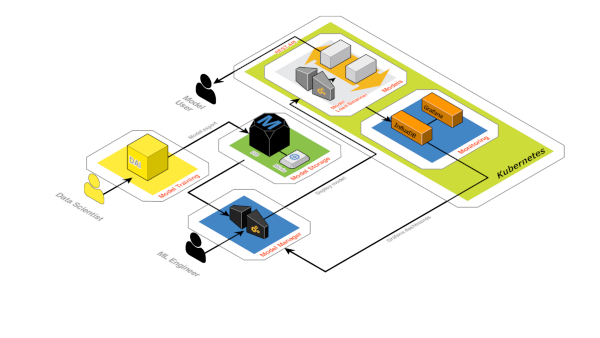

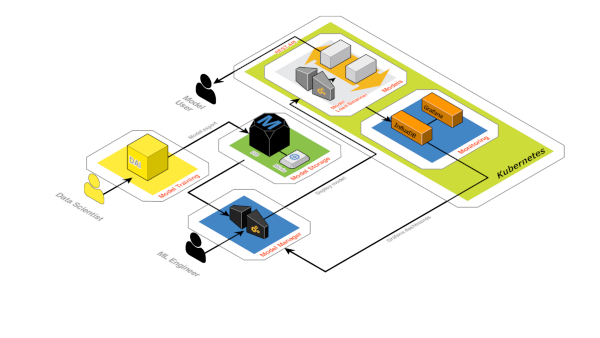

Scaling AI for the enterprise requires a new set of tools and skills designed for modern infrastructure and collaboration. H2O MLOps is a complete system for the deployment, management, and governance of models in production with seamless integration to H2O Driverless AI and H2O open source for model experimentation and training.

H2O MLOps

Low latency MOJO scoring pipeline train once run anywhere

All your H2O models in one place for monitoring and management

Real-time monitoring to detect anomalies, feature drift, and performance issues

Model management made easy with dev-test-prod, built-in A/B testing, and automatic retraining

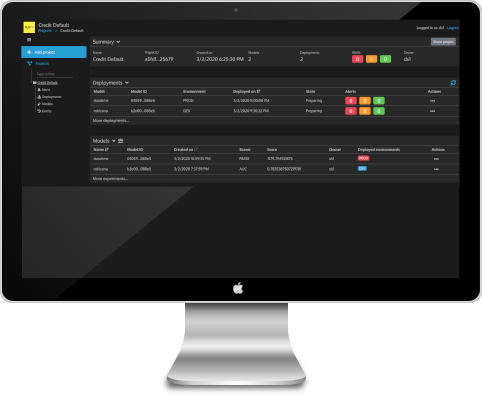

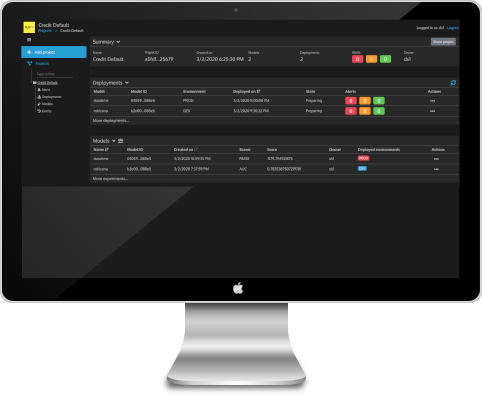

Production Model Deployment

H2O MLOps makes it easy to deploy models in production environments based on Kubernetes. MLOps engineers can quickly containerize and deploy models from the repository to any Kubernetes instance without any coding to create an easy and repeatable deployment process.

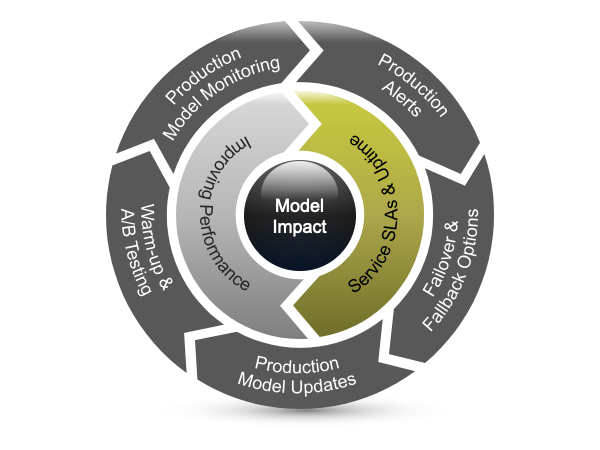

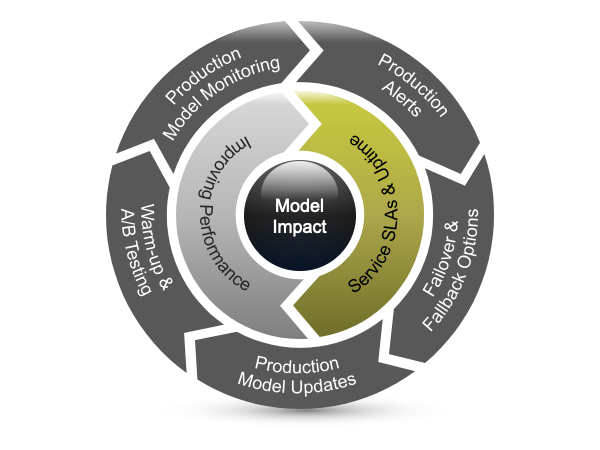

Production Model Monitoring

Changes in production data can cause predictive models to be less accurate over time. Detecting these data drifts is critical to identifying which models might need to be updated. H2O MLOps includes monitoring for service levels and data drift with real-time dashboards and alerts when metrics deviate from established thresholds.

Production Model Lifecycle Management

Models running in production may need more frequent updates than other software applications and without downtime. H2O MLOps gives IT operations teams the tools to update models seamlessly in production, troubleshoot models, and run A/B tests on a test or live production environments.

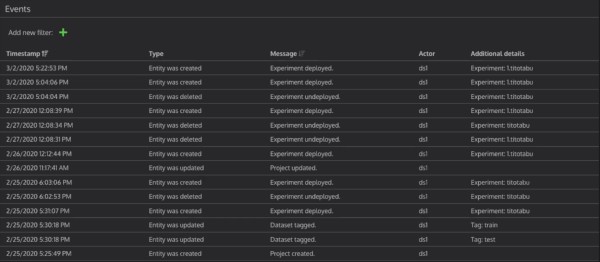

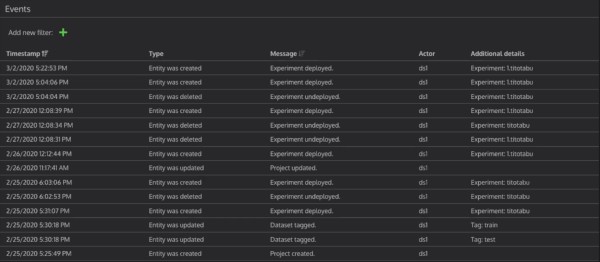

Production Model Governance

H2O MLOps includes everything an operations team needs to govern models in production, including a model repository with complete version control and management, access control, and logging for legal and regulatory compliance.