'Ask Craig'- Determining Craigslist Job Categories with Sparkling Water

This is the first blog in a two blog series. The second blog is on turning these models into a Spark streaming application

The presentation on this application can be downloaded and viewed at Slideshare

One question we often get asked at Meetups or conferences is: “How are you guys different than other open-source machine-learning toolkits? Notably: Spark’s MLlib? ” The answer to this question is not “black and white” but actually a shade of “gray”. The best way to showcase the power of Spark’s MLlib library and H2O.ai’s distributed algorithms is to build an app that utilizes both of their strengths in harmony, going end-to-end from data-munging and model building through deployment and scoring on real-time data using Spark Streaming. Enough chit-chat, let’s make it happen!

Craigslist Use Case Task

We hacked ~ 14,000 job titles off Craigslist Bay Area listings to build a model that classifies the unstructured text data of a job title to a given job category. For example, if the job title is: “ !!! Senior Financial Analyst needed NOW !!!”, our model should predict this particular job title is of the class: “Accounting / Finance” and not the other categories. Here is the distribution of our dataset:

Word Tokenization

The first task is to tokenize the words for each job title while removing words that do not add any context with respect to the job category (these words are known as “stopwords” such as “the”, “then”, “by”, etc, etc.). In addition to removing these words we also:

- Remove non-words (removes words that have a mixture of numbers and letters)

- Strip punctuation & lower-case everything

- Remove words that are used less than a certain # of times.

All of this can be achieved with one simple function in Spark that tokenizes the words for a given job title. For the above example of Senior Financial Analyst, the post tokenization would be:

Word2Vec

One common practice is to represent these words as a TF-IDF (Term Frequency Inverse Document Frequency) vector . Think of this as a global weight scheme for all words in a given text document where extremely frequent terms are “penalized” (i.e. given low weights) for their frequency and words occurring less frequently have higher weights. But for this example, we are going to try another method, which employs the Word2Vec algorithm for our transformations. MLlib supports both TF-IDF and Word2Vec implementations for feature extraction post-tokenization.

What is Word2Vec ? It stands for “Word To Vector” and is a clever way of doing unsupervised learning using supervised learning . I know, you’re thinking “what does that mean?” but bear with me. Simply put, word2vec is a way to encode the meaning of a word in a given text document and represent this meaning as a vector of real numbers (typically ranging from 50-300 features for a given word vector). There are a few implementations of this algorithm but the one that Spark uses is called the “skip-gram” model and takes one word as input and tries to predict the context around the word in question. One cool thing about the word vectors is that they can be added / subtracted; the canonical example is:

V(King) – V(Man) + V(Woman) ~ V(Queen)

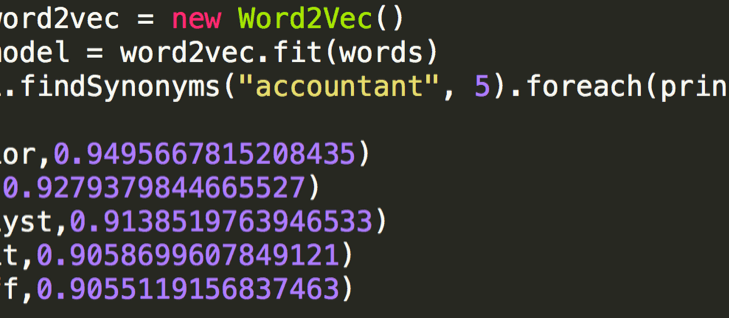

An interesting feature of the model implementation is that Spark allows us to view synonyms for a given input word.

But hold on a second, Word2Vec produces vector representations of single words and NOT on a string of words like how we see in a job title! However, we are going to do something unique by taking the average of the individual word vectors in a given title composed of N words. In our Senior Financial Analyst example, we will sum the individual vectors for each of the words in the tokenized string post-scrubbing (recall that this is: {senior, financial, analyst, needed}). For a given string of words, we are going to a) sum the individual word vectors and then b) divide this total by the number of words in the title – which in our analyst example is four words. The result will be in the form: mllib.linalg.vector for a given string of words for a given title. These average title vectors will act as our input features to build our classifier. Note that in Spark, the default number of hidden features is 100; which means that for each word, the Word2Vec model will generate 100 features in the word vector which encodes the word’s meaning.

Modeling

Now we are ready to get down to modeling! We have our target variable which is of type: String (“education”, “accounting”, “labor”, etc, etc) and our input features which are the average title vectors for a given job title of N words. Luckily we can utilize H2O.ai’s distributed and parallel library of algorithms to train a classifier for our given task. Below is the code that takes our Spark RDD and passes it over as an H2O RDD by creating a new class called “CRAIGSLIST” which will hold the various RDD types:

At this point, you can choose to either continue coding in the sparkling-shell OR utilize H2O.ai’s web-based GUI – known as Flow – for model building, tuning and performance metrics. In this case, we will use Flow for illustrative purposes.

Now we can inspect the data and look at some visualizations such as a histogram of the target variable and the distribution of one of the average title vector features.

Just like any other machine learning task, we randomly split the data into a training (75%) and testing (25%) set; one can continue to make further splits of the dataset by adding a third, validation set but for the purpose of this blog we will utilize two sets. After splitting the data, it’s time to build a model and for this one, we use a distributed (yes, you read correctly!) Gradient Boosting Machine which is a tree-based algorithm. Using pure default values in H2O, we built a model in 16 seconds with the following model metrics:

Confusion matrices for both training and testing sets (Note that one can add more splits of the data as needed):

Summary

To recap what we just accomplished in this first of two blog posts:

1. Read data into Spark and perform initial feature extraction

2. Applied word2vec algorithm on ‘cleansed’ job title words

3. Created “title vectors” which are an average of the word2vec vectors for a given title

4. Passed the above Spark mllib.lingalg.vector as an H2O RDD for machine learning

5. Built a Gradient Boosting Machine that was trained on the “title vectors” to predict a job category

Whew, I know that was a lot to take in but that’s why we split this up into TWO blog posts

Read Part 2 of this blog here

Alex + Michal

P.S. If you are attending Spark Summit 2015 in San Francisco this week, stop by the H2O booth as Michal and I would love to meet you!