Agenda:

H2O AI Cloud – 2:54

H2O AI Feature Store – 15:14

H2O Driverless AI – 32:20

H2O-3 – 40:25

H2O Hydrogen Torch – 54:51

H2O Document AI – 1:10:52

Model Validation – 1:28:36

H2O MLOps – 1:45:19

H2O Wave – 2:03:08

H2O AI AppStore – 2:24:22

H2O AutoDoc – 2:37:20

H2O Health – 2:45:03

H2O AI Feature Store Demo – 3:01:31

Read the Full Transcript

Good morning and welcome everyone to H2O's Innovation Day Summer 22 Summer 2020 to broadcast we have a great program teed up for you over the next couple hours. My name is Tara Beatty, and I'm part of the marketing team here at H2O. And a couple of different things before I introduce our first speakers, you will see at the bottom of your screen, you have the ability to both ask questions and to chat and interact with those who are here, including our speakers. So feel free to ask questions throughout the event. And what we'll go ahead and do is either have our speakers, answer them as content is displayed, or we'll have some time at the end. So again, we have a great program set up, we're going to kick it off with several of our H2O customers. And they're going to share how they are undertaking AI transformation within their organizations. And then we will hand it over to our product leaders. And you'll have the ability to hear some of the latest and greatest features and announcements coming out in H2O solutions with questions at the end. So with that, I would like to introduce our first speakers, Reed Maloney and Vinod, younger, can you go ahead and join us?

Thanks, Tara. Hey, Vinod how are you doing?

I'm good, good. How about you?

I'm doing great, we got a great show for you all. Today, we're going to run through some of the some of the innovations that have that have passed to build our entire AI cloud. And then we're gonna jump into the customers that have been driving an immense amount of success in their organizations, using H2O and drive it and building off a huge number of business use cases across industry. So I'm really excited about the panel. And because we got an hour to go through all the new innovations that Vinod and his team have gone through in the next hour. The other thing I saw the note is we got people from all over the world.

Yeah, I'm just seeing this from Canada. Cute like lately. Last one. So like, you know, Quebec, Canada, Montreal, Greece, Germany,

Germany, and then and then someone said they're from North Carolina. I didn't see that. It was I got a quick glimpse. I went to school there. I went to Duke. I miss it, but I do not miss it in the summer. So I am out of Seattle. We are having we had the worst spring ever. Great now that I know how many people are on here. It's like 75 Everyday right now. And that's like, we get eight weeks of that. And then all of a sudden, we're back into knots that so we have summer and not summer here. And I know that is not your case. For now.

It is much, much nicer. Yeah, we got great weather. Today is a little cloudy. The morning. I'm in San Francisco Bay Area, California. So welcome everyone that says jelly, very sunny, and we're gonna call it sunny California. But today's a cloudy day. But hopefully it'll get sunny before we get too far along. Absolutely. Well, this is great.

So you want to get into what we want to do is just sort of show a little bit about the maker culture and just how innovative H2O is and sort of why we innovate as well. And you know, really comes back to helping the customers be successful. And so it really started with H2O Three, H2O Three is our open source distributed machine learning. It's really what made H2O famous as a company. We believe we have a million data scientists using H2O. And it really comes from the fact that this is the easiest way to run machine learning across a broad data set. And as we built out H2O, and we had a huge number of customers using it, we started listening to what they needed. And that led to H2O driverless AI. And so instead of driverless AI helped our data scientists and our community build even faster and often find insights in their data that maybe they hadn't seen. And then they were able to go and move much more quickly in terms of solving business problems and delivering value to the business. And honestly, in the last couple of years is even like the last few years, we built so much more this year, we built H O hydrogen torch, which is no code deep learning. Just talk a little bit more about today, we've built a show document AI, which helps helps to build intelligent document models. So you can have a wide variety of formats. And if you're looking at the B roll video we just had started with and that was Bob Rogers talking from UCSF, they're able to take this huge variety of referrals coming in, they all look different. And they as able to say this is a referral and then also find where the information it needs on the on the fax even though it's in different places. So it's really unique. And that's why we think about as intelligent document models. And then we've also wants h2 a wave. And this is this helps our makers make AI apps. So we're able to have the AI consumed much more easily by the business user. And that also helps to bridge a communication gap, which we're likely talk about on the panel today between the business and the data science teams or the analytics teams that are trying To help them solve those problems, and that's such a common issue. And Shawn, I know we've talked about this. And I want to do Shawn in a second. That linking that that business unit with the data science often leads that doesn't happen leads to challenges. In a lot of organizations about getting AI adopted and H2O Wave is really built, and built to help bridge that bridge that divide. And we've lost a whole set of innovation around helping customers operate AI at scale, or on Hu ml ops, or H2O ai feature store. And so prince who, who's really involved with that from AT and T, and as a co created product, we'll talk a little bit more, he's on our panel today, it's awesome. And then we've been able to deploy this where you can basically run your models that you're building and score them anywhere. So you could score him in snowflake, you can score them in your Java environment, you can score them on a shoe on the lops. And then you can run it on any cloud. And then we've launched our a COA App Store. So we're again, helping the business find the apps that they can use to consume the API. And then we have a huge set of pre built applications, not just for, for a whole variety of different departments, but also different industries like financial services, insurance, etc, health care that you can go in and use to help accelerate your journeys even more. So it's really exciting. And this is all happened, everything I just sort of went through one by one. That's all happened the last couple of years. And again, that's just to make that's the maker culture here. We're really innovative. And that pace of innovation as we grow and as our customers adopt is only accelerating. And so, again, why are we building what we're building? Well, it comes down to meeting the requirements of our customers for them to be successful. So what we hear from customers is they want us to support all use cases, everything from big data to text and audio data, time series data, we support it all. We want to be able they want to be able to deliver very quickly, but they want to be able to deliver quickly on on projects that matter to the business. And so that's that's different interfaces. No no code, deep learning, like we just talked about one click to deployment advanced auto ml that we support. We want to support multiple users so they can democratize AI, which we also have, it's easy to explain, monitor and govern provides the highest level of accuracy, which gives them the most business value. And they're able to integrate into their existing data apps and tools.

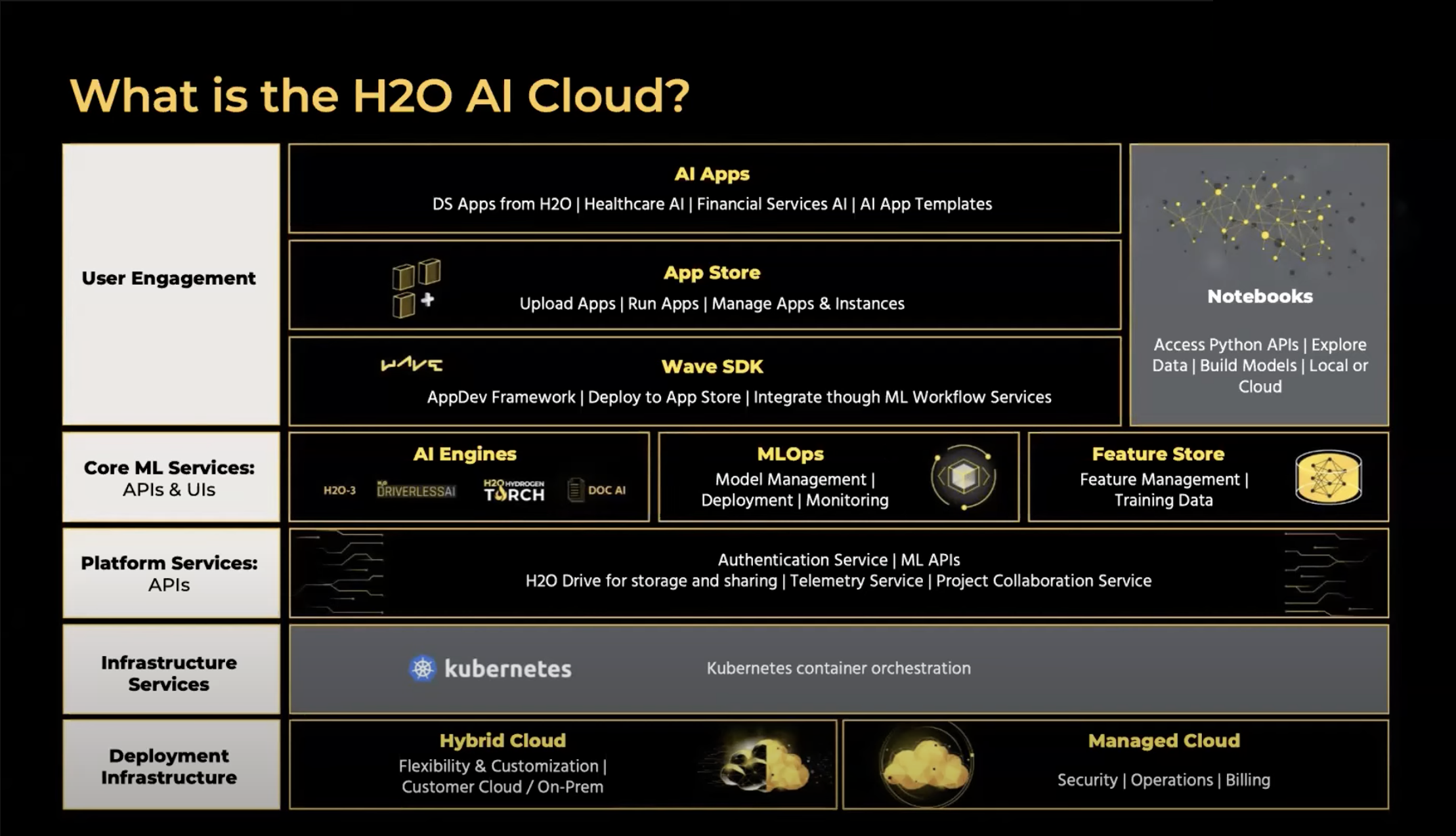

And so when we look at that all that innovation we put together into the AI cloud, why they issue a cloud provides the fastest time to value for any use case, across having multiple interfaces, having the best auto ml having intelligent document AI, no code, deep learning, optimized recipes, a huge plethora of pre built applications. It's really designed to help you deliver value to your business, we have the most comprehensive set of Explainable AI capabilities, so that you can trust the AI that you're building. And then we have the most flexible architecture. So we can integrate across any of the systems you have, you can score anywhere, you can run it in any cloud. And so we really built it so that and again, it goes back to open source as well. You're just providing flexibility, so it can work within the systems that you have to drive business value. So with that, let's talk to the customers who are really driving you know, helping to drive our roadmap and driving our innovation and are doing some really cool things with AI. So we have Prince Prince is a VP of data insights from AT and T. Shawn welcome, Director of Advanced Analytics from AES and Chris, welcome. Thanks so much for being here. He's the Managing Director, Head of Global Head of data science from Casselton commodities International. And you know, what we're going to do is just kick it off. Jensen, we'll go we'll go in that order. If you don't mind. Just you can introduce yourself, the company you work for, I'm sure no one no one's ever it. And a little bit about your role, you know, what are you doing? And where are you on your journey? You know, from the from an AI maturity perspective, you know, how far along are you just getting started? Are you really do you have 1000s of models in production? Do you have business units creating their own AI? Are you guys mainly doing it centrally? And just add a little bit of that and then we'll go deeper into some other questions about how you guys are driving success?

Sure, thanks. This isPrincipal Raj from AT&T. I lead the data science team here for fraud prevention and detection and take care of global supply chain management, AI standpoint and tracks and then various across you know, be yours. We kind of play as another role and how each and every business unit or innovate with the data and AI. So that's pretty much what I do in ATMP. to their office. And talking about little bit innovations. Obviously, we've been spending quite a lot of time and putting AI and data first in terms of any business industries that we are doing an ATM fee, as a good result, and I will say that even we partner with a hedge to wall to develop the AI Peter. So of course, I'll be talking about a bit later when with my friends here, but overall, in talking about the maturity, we are doing a great job and they're part of a journey. But still, we need to room to grow, do you want to make AI is being integrated every part of our business, and really adding a lot of value to our core business. And we want to be at the fabric of the company. So that's the goal we are going towards. But we are seeing ourselves great now I mean, in terms of scaling up, big time across enterprise, but we want a world more. So that's the current state of na TNT and what we're doing it.

Great. Thanks, Prince. Shawn, do you mind going next?

Yeah, Director of analytics today. Yes. Basically, though, I'm responsible globally for all things AI and ML at the company. We do think globally. So we're in about 13 Different countries that makes it fun with different languages that we execute with. And we do power generation commodity, we own a few utility companies are really broad in the energy industry, lots of fun, crazy, difficult challenges. It's exciting. It really is. I love what we do. And the work that we have, when it comes from a transformation. We've been on our journey for about two and a half years, building things up from the ground. We've made a variety of mistakes. But I think we've also made some good successes. So be happy to talk about those.

Thanks for sharing, Sean. Yeah, ideally, what we'll talk about is some of the mistakes our audience can, can avoid those and also the successes, so they can learn from some of the cool innovations and ideas you've come up with, to help you know really get AI going and scaling within the organization. Chris, you go next.

Sure. Hi, Chris Throup, I'm Global Head of managing director of Data Science here at CCI, similar to Shawn we are in the energy space. But we focus on everything from not just energy, but also natural gas, petroleum and all the derivatives. So it's been an exciting summer, I will say that, you know, we've we've used data for many, many years, and many of the commodity space us have used data for for quite some time. You know, we've spent the last three and a half years transforming to a common platform, and then applying AI on top of that to drive better business decisions. Historically, you know, the primary focus has been, you know, front office decisions around, you know, our natural gas plants, or how we want to handle the markets. But we're also now currently exploring with H2O, some of the middle and back office capabilities, specifically around document management, etc. Because we have a lot of contracts for debt data, etc. I think that one challenge that we face, and I'm sure Shawn maybe faces some of the same is, you know, we bring in hundreds and 1000s of datasets from around the world, including governments, vendors, etc. And so we spent a lot of time not just on the, the data, AI side of things, building that out, but also, you know, getting our data curated and organized and structured, so that it can be properly analyzed. So that's been kind of a joint effort and ongoing effort. It's very boring work and painful. But, you know, we're starting to see the value of it, as we apply H2O to a variety of use cases.

Great, thanks. Thanks, Chris. Appreciate that. We'll definitely dive into it. When we get there. I know we're going to talk about some challenges. And I saw Shawn, you nodding your head, like full agreement about this being one of your biggest challenges. And I hear this when I talk to a lot of our customers as well. So we'll get into that more in depth. The note, I know you got a couple questions you want to jump in on so?

Yeah, definitely. I mean, well, first question is since the group I had came to mind for the current macroeconomic climate, market conditions and a lot of pressures, impatient being high and you know, there's fears of recession coming about I'm just curious because in the in the past thing, you know, AI was kind of like a nice to have but now I think we believe that do you think that AI is becoming more and more critical to help organizations operate better, more efficiently? Maybe even like, be smart about where they spend their money what to do? I'm just curious like, how are you seeing that how are you using AI are seeing the economic climate impact and what changes are you bringing to your journey?

Alright, so I would say that I know we are going through some sort of an a tough time in omics standpoint. But first AI is not a nice to have an AI. In fact, you want to have AI in all the places. And now being inserted this AI and all the machine learning models part of our business process, right from our sales, you know, it's playing a huge role, you know, across a TNT, either it's a fraud or churn or, you know, bringing some customer insights or global supply chain management or field operations, it's everywhere, it's out there. So, it is very important for us and difficult time like this. But now, in fact, you will, we are thinking we want to move faster, right? I mean, you want to improve our operational efficiency, we want to really look at our ML operations, what how we do it. And when millions of transactions been, you know, coming through daily basis, you can think of company like an AT and T produced integrated part of our sales channels, you know, just taken a fraud as an example. Or, like more than 10 million transactions every day, it's been scored in real time, you know, the help of H2O, the module and the ML ops and all those things that needs to really work fast. So we are in a situation now we want to actually improve the operational efficiency, and we want to move faster. And we want to identify the faster patterns in real time and, and go and put the new model in place. You know, either we are retraining or putting a new model in place. So that's the kind of sentiment what we have here, though, in all of the things that are happening. In fact, it's encouraging us to, you know, move faster. I mean, if you want to fail, I mean, you want to fail faster, so that they can recover it, and really improve operational efficiency.

That's, I'm curious, what are you? Chris, I know, from the trading side, are you seeing headbands? And how are you like using this to change your models? Maybe they're, like, you know, you're listening about like a black swan events where we need to backtester all our models or change all our models, and give us what you're doing?

Yeah, so one of the reasons we chose going back to the beginning of H2O was, you know, we saw, you know, the need for faster data science, I think, Prince hit on it, you know, we saw a changing environment, we saw that many data scientists kind of, you know, my team is, you know, people are comfortable, they write custom code, they use it packages, they investigate features, and it's a lengthy process. And so, you know, I wanted to rapidly like, kind of just revolutionize that, because as we see the market changing, we see new datasets coming to bear, you know, every day, there's, there's new datasets, whether they're satellite based data, you know, new weather products, all kinds of different things that are happening out there. And so as we look at this kind of changing economy, we've got to be testing data, and new datasets all the time to try and understand what's going to help us better predict the future. And, you know, it's a real challenge. You know, we're, we're not at&t, I'm sure Prince has a team of hundreds, if not 1000s, you know, or as you know, so we have a small team of, you know, three or four data scientists. And so we need to be highly efficient and highly reactive to what's going on in the market and look at things and come up with projections, impacts of inflation, in fact, you know, impacts of slowing GDP, you know, etc, etc. So it's, it is absolutely driving us to be even faster. And h2 is very important to me, as part of this process, because it truly accelerates the feature engineering and model selection.

What do you show me any thoughts?

I would be echoing both of what has been said already here. You know, Chris, when I had mentioned a little bit about the data challenges, I like in H2O is to the fast checkout lane in the grocery store, okay, going in identifying everything that I need. And now okay, I've got it, let's move. And it helps enable that. You know, you originally asked me about the economic environment impacting the AI journey, being in the energy space, there's a lot of turmoil going on right now, a lot of changes. And I think it's going to continue changing how we use energy is going to change, which is going to impact how responsive we in our business need to be. And H2O was pivotal to that. But along with, you know, dovetail what Chris said, you know, also getting people to understand the power of using a tool like this is also part of the transformation. And I made a little joke to the data scientists the other day, more of a little bit of a jab at them, because one of them was complaining about the business. They're like, doesn't the business see how important this is and how easy it helps them do their work? And I'm like, yeah, it's kind of like getting a data scientist to figure out that H2O. helps them move faster to you guys. It goes with all right to che point taken, you know, getting them to understand that I think is important. And it's an evolution overall and a journey that we're all on. You know, Shawn,

you say that, Shawn, I'm sorry, sorry, I didn't mean to cut you off. But I think adoption by data scientists have a platform is one of the hardest challenges that that, that I've faced. And I'm really proud of my team, because I asked them to, to put aside their historical reliance on code and coding everything in total control, and trust in the platform. So it's a, it's been a real journey on that.

It has been and I have a data scientist, particularly with the Pythonic way of doing things that H2O is enabled outside of other tools. I mean, that helps them do the code monkey stuff that they like doing. But I've got a data scientist who loves it. He's like, every new day, I've got a new model that I get to, you know, see what H2O comes up with for the project that we have. But yeah, adoption is, is a challenge, but it's fun.

And maybe you find out a little bit, too, Shawn, you said the adoption? Yes. One is this, you know, the platform and data scientist? And if we go a little bit north, you know, we have the business customers there. Right? I mean, the domain expertise, I'll just use one example that, you know, the fraud. Yes, I mean, this machine learning models are scoring in real time and predicting whether it's a fraud or not. And based on the results, you know, the fraud analyst remedied the situation, particular transaction with a customer. Right, but, but, I mean, what is the threshold that fraud analyst should work with? Right? I mean, should I just use the range, anything about 80 percentage, you know, because it's, it's a balance activity that we're going to give it to the customer experience as well. You don't want on either customer too much because of our AI suspecting, you know, suddenly person for fraud. And so for balance activity, but at the same time, okay, now, the data science understands this, you know, AI, and it's always the predictions, but now, if you think about from the business angle, the fraud analyst, even the product analyst needs to adopt, you know, the to let the AI is giving me a recommendation using that now, I'm gonna take some some trepidation. You know, and I want to keep the customer, you know, the frictionless experience as well. So it's a kind of a challenging definitely, when the AI is really playing in real time, in a world of a situation. But But everybody's learning I mean, for example, I will say in this situation, what we have done, we use H2O. And our data scientists in a put together, they used to have a app, and they explained it and show them each and every pressure, what is the value of it to a fraud analyst. The business standpoint, the fraud analyst understood those, the pressures where they need to play a role, because they they go really tight the process, they're going to have a lot of color to the Caribbean, you know, they can they handle the volume. So it's kind of a balance activity. But definitely, it's to they want to sort of the explanatory tool really helping us to build a bridge between the data scientist and the platform and the business.

Yeah, I mean, I think that's a great one to sort of dive into maybe even more, which is, you know, what are some lessons learned for the audience, as you look to scale AI within your organization's you talked about helping the data scientists and science science teams go from code to sort of using a platform, and they still might use code as you were talking about Shawn, but they're using elements of the platform to help accelerate or the fast checkout lane? As I think you said, to move through that, you know, what are some things that that could help the audience say, Look, when you're trying to operationalize AI at scale? How is this going to, you know, what are some things they can they can avoid, from a pitfall perspective that maybe you guys ran into? And what are some maybe unique solutions that you came up with, to? To address those pitfalls? So, Prince, I don't really just to keep going on? Where were you were like, do you have just a couple of points to help maybe help the audience out if they're trying to operationalize and scale AI right now?

Yeah, I mean, you know, definitely this problem was there, I mean, just taking this AI, you know, into the business process. In the scale of like, I talked about millions of transaction, which should be scored in real time and things like that. But if I talk about a little bit of a technical challenges that what we have faced before even going there, you know, our data scientists, we always say that, okay, the typical process is again to data, then do the feature engineering, the magic happens, then we build a model, and then we hand it over to another team. So they're gonna take the model, and they're gonna put it in production. Right. So in other words, we kind of see it. This process is kind of duplicated. I mean, in the sense, you're coding twice, actually part of your training, and then part of the scoring time. So we want to avoid that. I mean, we see that, you know, data scientists building on model and an ML engineer is kind of putting into production because the data is not going to be, you know, clean and easy and during the time of your training, and it's not going to be the same during the demo for scoring. So we definitely look at, you know, how we can avoid that sort of a problem, right? I mean, that is a one lesson learned. We don't want to quote twice. And sometimes even we see it in the same team, that you have two different data scientists and developing the same feature, they're working on the same variable, right. I mean, we see that duplication also happens, that's one of the challenges that we are seeing. And always the speed to market is very important, you know, because like, like Chris mentioned at the beginning, and Shawn to the data is changing, you know, before COVID, during COVID, after COVID. You know, when you have a company like AT NTV, how many channels, they have retail stores, we have digital platform and care, things changes, we close down all the datasets during the pandemic, okay? Now, the fraudsters are taking a different pattern of things to victimize our customers. So, so this change is all happening. And at the same time, the speed that we are developing this machine and models, and we don't want a duplication of work, and we don't want to quote twice the same sort of work we do. So these are some of the challenges that we have seen. And, and when we saw those challenges, and definitely worked with H2O, and and then discussing because we are, you know, a big fan of the history module, and we use various tools and technologies from you guys. And that's where the core development started happening. Kind of an innovation from both our side Peter store, isn't it?

Great. Thanks, Prince. Shawn, what about you? Miss, I think,

I think it's, I mean, mistakes, you can took a look at it from like people process technology. And at the end of the day people are making are what make things happen. And I would say, for the last year, when I talked with business, and they talked about a project that they want, I say, okay, AI ml, it changes your business process, are you ready to change? And if they say, Well, what do you mean? I said, Well, let's take a look at your business problem that you're trying to solve with a tool called AI ml. And trying to reframe the conversation to help them see that this isn't just something that you pull off the grocery shelf, that it is something that you invest time energy into, and that it requires additional subject matter experts on their team, because data scientists don't know everything about the business side. So I think the you know, I look at the mistakes is not engaging the business in the proper way. From a people side, I think from the technology side, we did a good job of getting in data and creating data pipelines and a data lake that even the business could leverage at the same time that the data scientists are leveraging. And that allowed us to bolt on technology to to accelerate, and that's really what I call H two O is an accelerator of things. And, you know, then it gets into the challenges. Now as we're executing projects, I sometimes lead data scientists play too much. They experiment, that's what they love to do. So that's kind of how to keep them focused on the business value to with life. Yeah,

how have you been able to increase? I saw you nodding. So maybe I'll actually throw this question to you. But like, how have you been able to bridge that divide between the business and the data scientist or improved communication? Is that like a requirements doc? Is that using things like H2O Wave to help, you know, show and demonstrate and prototype? Or, you know, how are you guys start tried to, you know, if that's one of the biggest problems to scaling? Have you guys tried to find solutions to that that work?

Yeah. So I had a list of mistakes that we've made that was almost endless. So I'm glad you've, you've redirected me know the, you know, we've spent a bunch of time upfront. And a couple in a couple matters. And it's outside of H2O for a lot of it, but some of it isn't H2O. First and foremost, I've aligned my team into domains, right, so that my data scientists kind of rework in the same area over and over so they learn the content from our domain owners. So whether it's natural gas, shipping, etc. I think that is a key thing. Secondly, and directly to your point, we've actually built out a Data Science portal, and a data catalog all integrated into one tool set. So end users can investigate all the data products that are available, they can see and put in data science projects, and the point that Shawn made about scope is a huge one. So actually in our data science project, Um, we've we've created we use a local tool called Zuhdi, to build basically a whole platform for this. So we have straight through processing on everything from, you know, a data subscription request all the way through to a data science project. And the methodology is all instantiated in this platform. And we have, we basically forced the identification upfront of what is the target, and what is the business goal, and they have to be filled in by the commercial users. So we put in place processes and controls to help with that. And then I think when I think about H2O, some of the things that have been super helpful from my point of view, you know, along this process is one is explainability. Right? So we record all Shapley scores on every run. Right. So, you know, that's just one example of where we use the tool set to help communicate back to the commercial users, they can see the features that were impactful they can understand. And they can help kind of QA. And it's just, you know, it's been useful. There was one question that came up here on the side, I just wanted to hit on somebody said, can auto ml apps make data scientists lose skills. And I would actually say, at least from my experience, it's the exact opposite. What's happened and I've seen is that my team, instead of doing a single, simple, take Single, single spending, you know, weeks creating a single table forecast, you know, that basically shows seasonality, and growth. Instead, what we're able to do is push that to H2O, get that out of the way quickly. And then focus on combining multiple datasets, disparate datasets, really complex problems, having them really focus on the hard problems. And I just want to say this, and maybe this is going to break the marketing speech that you guys are doing. So apologies in advance to H2O, because they probably shouldn't have invited me, I guess. But this is not a dataset, this H2O is not a platform where you can just hand over to an end commercial user and say, Hey, go do machine learning. And you'll get perfect results. It is a sophisticated toolset. It's got a lot of features and bells and whistles. And that's actually what's helped with the adoption of my data scientist because they, they can see how it helps them solve problems by by tweaking the features and the you know, the settings etc. So it's a it's a very sophisticated accelerator. That's really good at it good results. It's not, it is not a Data Prep engine, like data IKU. It is not a, you know, a framework, like sage maker is a true auto ml and ml ops engine, which is exactly what we needed.

That's amazing, actually. Oh, that was a really interesting question from the audience. I thought that'd be we'll just ask it live to this group. The question was basically, do we think that the goal for AI and most organizations is to fully automate the decision making or augment human decision making? Think security against that line of thought you brought up especially in the channel and I would love to hear your thoughts on that.

I'll be happy to jump in at least start off. And I echo completely your sentiment there, Chris, that I think it matures, the data scientist even more. And it really embeds more passion in what they do by using a sophisticated accelerator like H2O. You know, what is the goal for AI ml? In most organizations? First, it depends on the organization, it depends on the maturity of the organization. I truly believe that it all right, my background, I got a PhD in psychology. So you're gonna get some psychology thrown at you here. As we take a look at people and how people react in the the evolution of work itself, we've always been on the way of accelerating things that we do to get to value and removing things that that add non value. And so is, is I look at AI ml, there are things that are going to automate jobs, right. And that's what we call middle work. But you've got low end work that's just very hands on stuff that you cannot automate away. I mean, we're still gonna have construction. But can we put in their understanding and automate, use AML to make our construction projects go faster? Or better or be more efficient? Yes, we can. Can we identify pre identify equipment failures on a construction project so that it maintains its time? Yeah. So there's things that we can do on that side, has that replaced a human or has that actually enabled us to be more efficient at our jobs? Okay. And then, I've got on my side, we've got wind turbines, right? We design models that predict failures for components on wind turbines and a technician wakes up in the morning and they're like, What should I go look at? I can never replace that technician, but I can focus him in the right direction. Saying you get these five wind turbines here are the probable faults that that are occurring. Or here's a potential gearbox, which for us is an expensive replacement, go and do something. So I don't have to bring in a crane, that's $150,000 At the start to replace that. So it's, it's more human augmentation. But I hesitate with that word, it's more human in the loop, it's making us be better at what we do. And you know, which, with all of this information, it's helping us cogitate through things faster, it's helping us identify things, I see it's going to be a fun evolution over the next 2030 years, and AI is going to be that mainstay that continues to help us be happy, do our jobs more effectively, efficiently. It'll be just be a part of our life, like Excel is today, which we can get rid of, I don't mind.

I agree with everything, except for getting rid of Excel. I love Excel, I have to admit it. I know, I know, it's caused so many problems. But it's so great, anyway. No, and the other thing I would just point out that it's helpful for us on is, you know, we're a smaller organization, you know, about 1000 people. And, you know, historically AI has required a huge infrastructure build, to really do at scale and prints, I'm sure you guys probably have quite quite some some servers and compute capacity. One thing that, you know, that we're really focused on is utilization, we use snowflake as our back end. And we are very, very focused on the product innovations that Eric and his team is leading with the usage of snowpark, from snowflake. And it to me, that is the future of machine learning is in database, hyper scalable infrastructure, compute infrastructure, from from a toolset like snowflake, managed, you know, by H2O. So, you know, to me, this is it's all the things that John said about kind of like, making people's job higher level and doing more insightful analysis that allows us to gather, you know, trillions of rows of shipping points, or weather points, or whatever, whatever the case is, and really processed them at scale. And that used to take 10s of millions of dollars of infrastructure build, and now we're starting to see a world where we can do that kind of in a in a very much more attainable, achievable fashion, without the massive infrastructure build. And that's scary to some people, right? Many people have, you know, some maybe on the infrastructure team, you know, built their life on maintaining an Oracle database or servers and things like that. So you, you will face resistance with some of these visions, not just on the data scientists, but also potentially on your database or infrastructure teams. And, you know, hopefully they get that vision of they can elevate their skills, but I don't think people always can. A prince, you guys have massive, massive servers, I assume? Oh, yours, you're on mute.

Yes, Chris. I mean, sorry, I was new to whatever you said, because it's easy, you know, the moving to the cloud, and, and bringing all the data in one place. But definitely, you know, the cost will play a big role for your computations, and how efficiently you know, you run your queries and, and manage that, you know, the workload, it's really important. I mean, that's a big lesson, you know, we all learned I mean, we burn our fingers, not only in snowflake, we use data bricks. And we use Palantir. I mean, do some strategy additions, you know, the valuation of technology, we have multiple cloud players in place. But But But overall, when I look at it, I mean, either I look at Palantir, or I look at their products, or I look at snowflake, or even Salesforce in the science standpoint, I mean, all those things we see. We don't want to duplicate the data sets in all over the cloud. I mean, we are looking for a platform. I mean, that's another thing that why it makes a lot of sense for us to partner with H2O is to have some sort of, you know, technology, which can really work along with all these pipelines. Right? Some people do this machine learning and barrel breaks, some people do it in snowflake, some people do it in typical Jupiter, some people doing and Palantir. But doesn't matter where you do. And as long as the data, the features that the machine learning assets that we create, that is, you know, we are able to democratize across this platform and keep it in one place and share it and that actually brings the, you know, the real power of a democratization. So, knowing this disturbance and knowing this technology, you know, evolution that we all going through, but something that we want to appreciate and and keep To our machine learning assets in one place. And still we need to drive, you know, we don't want, we want to avoid the duplication. We don't want to eat a swamp. But at the same time, use it efficiently. That's the big challenge. And that's what we are currently experiencing, or pretty much trying to get the job done there.

called, I like a two pronged question. Maybe and I think was lead say nicely to the next question. That really right. Yeah. Do you want to ask? Yes. So we have built this really nice AI maturity model. And I think we've worked very closely with adnd, and other customers as well. And as part of that, as you know, we help customers find out where they are on the curve. So the first question I have is, if you were to like, if I posed you to all three of you, you have to give feedback, let's say someone starting new someone in the audience has behavior, like really ahead, like, like starting out in the amateur career, like step one or step two, how do you go about this, right, so what like, let's say one piece of advice, or a couple of pieces, advice you would give someone to get started.

Maybe I'll jump in quickly on this. So, so we started this journey, exactly what you said, you know, the basic thing, what we have done is, we didn't worry about the automation, you know, we didn't worry about, you know, using sophisticated tools and technology. But we started with very simple, we were like, Okay, let's get the data. And in a platform where we can actually, you know, do the model, and show the results. So always we started with a POC, any business value that, you know, we want to just go in front of the business, and say that, hey, I can bring some value, so that we always go start with a POC, and do a POC and do the show and, and show the value the value or the cost avoidance or cost savings, you know, what are the value that's gonna bring it and then so that and that really helps the leadership are it's kind of a change in the culture, right? In our company and our people, we've been working on a different enterprise style of, you know, making things happen, pretty much rules driven, you know, pretty much, you know, logic driven approach, but you're again, getting into the predictive side of it. So really, what it helps is doing a simple POC to the show and, and make the leader to understand and and then, you know, go there from from from the POC. So that's what really helped us. And we have done many POCs, I would say in beginning of in cheated office, across various views, but I can see the results now after the fact we did all this good work. But now, we are really in the curve of you know, the maturity model to get into their well wishes.

That's great. Thanks, Prince. First of all,

I I agree with everything prince said we did some POCs actually, we we made a mistake there. We showed too much value. And so some things were done too quickly. And so people just assumed Oh, well, data science can all be done now in two days. So you know, it was kind of ironic, that we actually oversold actually, internally with the POC process. But that's a whole different discussion. You know, I think a couple thoughts. You know, one thing that, you know, I had my team layout, end to end was a methodology and process. And we instantiated that through a tool. I mentioned that earlier. So if you're getting started, you have to start with a data. But you also have to start with a methodology and governance model. And the problem with this is it sounds great. And if you meet with commercial leadership, and they say, we're going to prioritize, this all sounds great. And if you start with a data, and you get good quality data, and you're properly tagged, and organized, and good data quality, and you do all these things. And then you build a methodology at all, it's great, and it solves some of the problems around too much exploration, etc. The other challenge that it creates, though, is your business users may feel disenfranchised. So they may never make it to the very top of the priority list for the data science function for the team to work on. You know, we don't have unlimited capacity. So you know, what we've done by documenting all this, as we've a few people are very frustrated, because their projects never get to the top of the list. So you know, thinking about a hybrid engagement model, where maybe two thirds of the team is focused on strategic projects. And then 1/3 of the team is focused on tactical point in time, you know, really rapid market ascent, you know, things setting, working with commercial leadership to set aside kind of capacity. And that passion, I think, can be helpful, because as good as the tool is to accelerate the analysis, there's always more, there's always more to do. And so prioritization is important. So I think that's the lesson we learned along the way is that we, we built a platform, we had a platform approach, you know, my data scientists push all data engineering into you know, views, we use machine learning views, we don't use, you know, code to transform we did I think a lot of things right, but we also didn't necessarily be He's responsive to all of our commercial users. And that caused some frustrations, some real frustrations.

That's a great, thanks. Thanks for sharing that, Chris. Shawn, what about you? Like what, what helps you guys really get get going and then scale?

I think, you know, we, we started at least with the data, you know, if somebody ever asked me today, how do you have a new company? And how do I start my AI ml practice? I've never done it before? Is this brand new? Part of me wants to say don't design a model for 12 months. Okay, start off talking about advertising, get the foundation set up, which is how are you going to run your compute? How are you going to create your endpoints, where's your data, what's doing, you know, start bringing all of that in, start working with all the DBAs get them in from an infrastructure level to start pulling that data in, and then start creating some models. Because to Chris's point, as soon as you give them candy, they want more candy. And sometimes these guys are kids in a candy shop, saying, let's do this, let's do this, can you do that? Yeah, we can, that's gonna take five months that may take a month, you know. And then you're pausing. Sometimes, because you're stuck on the data side, I always have a hesitation with governance, the wording I like to use is minimum viable governance, back to the point that Chris was making is that you can get disenfranchised business people, which is not what you ever want to have happen when you're doing this, because then they start doing their own shadow AI ml. And that I almost fear is more destructive than the shadow IT department. So, you know, how do you how do you help keep that engagement? You know, one thing that I that we did at the very beginning was we created Design Thinking sessions. And so we went to the business, and we're going to just talk for hours about your problems, and they just talk about them. I say, Okay, that is good. That is not that works. And then we just talked about some value, and some timing. And we talked about creating expectations. So it's a lot of conversations in the beginning. And now every 1218 months, we hold a design thinking session with the group, and they all love coming to it, because we get to discuss things and talk about prior successes and where we are. And then here's all the new things on the horizon. But at the same time, it's how do you help enable some people who, who aren't getting that feedback? And one of the things that we've been doing on our maturity, I would say is, I call it enabling it, or I call them Python enabled people. All right, we're getting more people into the workforce that can leverage and use Python, but they're not data scientists, but they can do some things with Python. So how do you help enable them for some of the simple things that they may be doing, but that also helps elevate their maturity overall. And it also helps identify people who have good potential to upskill in data science inside of a company. We're not even talking about finding data scientists, that's a whole nother panel discussion in and of itself.

It's probably going we actually had a blog recently on just hiring and retaining Data Science Talent come out, because it is such a such a topical issue, you know, just sort of going back into the the POC, concept prints and Christina, you're talking about, you know, your first, your first project adding so much value, you know, this is something I've seen talking to a lot of our customers is, you know, they're really start by getting the first few Lighthouse wins that really actually show value is what's helping them scale, you know, is it do you feel like there's a number like is that like two or three? Like, how many projects do you think you need to like really have that business value in to really help the organization say, Oh, yes, we need to make a much bigger or faster investment into AI ML to really help drive the businesses? Can you do that with just one project? Or is it typically like a small handful?

I think it's started with always one project and, and, and it's like, you know, I will tell you, it's not necessarily such project needs to be a, you know, super predictive model, or a beautiful forecasting model or some optimization problem you're trying to solve it could be you know, finding a needle in a haystack problem, okay, so, so it's not necessarily, you know, how big of the problem you're trying to solve. It's all about you know, like, like Sean mentioned customers and I mean data is also plays a very important role right? So so if you can able to get like three months history of data like multiple data sets, and then join them and bring some features and then build some model on that, so do something that's small and show the value and once you get the value then people are you know, seeing the candy and then obviously, they want more candies like and then you kind of dictate the terms and say, Okay, now what not me to do that I need more data sets to coming in. Like three months it's taking to build the model and and of course, once you have more data coming in place, definitely the building the model and the lifetime is reducing it and and the two It's like h2 or what we use it right? I would, I would, you know, I always say that it's I'm a big fan of h2 abajo. Because you guys made us to run these models in production. It's not an experimentation, right, we are running these models in production. So we need to run this model in 60 milliseconds, 200 milliseconds, 500 milliseconds. So that's the sort of speed we needed. So you're not going to do that speed while you're doing this POC, but our data scientists focused on building those models, but use the H2O technology, where we can scale that POC to production in quite reasonable and I would say, sometimes it's much faster, I mean, we are able to, you know, take to the model very fast to the production now. So, so yeah, I mean, it started with the one model show the value, you get more candidates than ask them more in terms of the data and assets that you want. And with the help of tools, like it's too, you know, then we can scale it up to the bigger level.

No, related to that, Chris, you know, you talked about that one, you know, you had that use case that had this super strong value to get started, like, how did you select that use case? You know, I think that's an area where other people struggle, which is, you know, how do you with those, get your ones to get started? How do you get the right business problem to start with to know that you can then bring that up to leadership and start building the function?

That's where, you know, you have to have I think, as a head of a data science group, you have to have strong relationships back to your commercial owners. So I went through a process of talking to each of them and finding out what was kind of the the areas where there was a pain point. And where I could find an intermediate sized problem, I didn't want them something that was so simple, that everyone would look at and say, well, that's, that's no big deal. Why should we spend money on this, we can use open source packages to solve this problem. On the other hand, I didn't want a multi year, or a multi month project that would take you know, six months to assess so. So we really spent a little bit of time talking to the commercial owners, and then figuring out our model. And the other part that was, you know, good about this is, or I would talk about a little bit about is how we communicated the results. So what we actually did in this POC is we did, we took, I had one of my data scientists do the project and measure all their time, in JIRA. Then we took four different platforms. And we assessed them. And we had the same project done. And we actually did the timings through the different platforms. And then we also looked at the actual RMSE. To compare actual results and accuracy. And H2O, as I said before, came out extremely well, on the automation of the feature engineering and the model. And, you know, it was the only platform with those features that came out with an RMSE that was basically the same as my data scientists could do on on his own. So that was a pretty credible result. But then going back to take the POC back what we did, we created several different decks. For our, for our COO, we created the time savings and efficiency deck for for our, for our sophisticated commercial users who have a technical mindset. We showed Shapley scores for our commercial users who only under you know, our more Excel type and, you know, really more fundamental in their their skill sets. You know, we actually created visualizations, which showed visually how much closer the forecasts were, versus existing existing work that had previously been done. So, you know, we really prepared multiple messages off the same work product, and that was tailored to the commercial needs, but it was, there was a fair amount of time and needed to go communicate. And I you know, because I always get the pressure, well, why do we spend money on a platform, I could just go hire another data scientist for the same cost? Right? That was a real question internally. And we have all this free open source. I've read about this, why, you know, our CEO is like I've read about this open source revolution, why can't I just go do that? So you know, I really had to show the value of the platform in terms of end to end process, not just engineering or not just a but ml ops side as well. So those were all messages that I had to listen for my commercial leaders and then prepare communication to

write no thanks for that. I think that's a really common challenge that many, many companies have Getting started is that communication piece, so that's a great, that's a great insight. Um,

I think one of our mistakes is that we weren't able to find the right t shirt size project to work on. So our projects were nine to 12 months, which was a little bit longer than what was needed. I think it needs to be a little less than six months, but it's okay. I would say to show value iteratively alright, because, you know, we had one project act where, you know, the business was doing about 65%. On accuracy we came in, we moved it up to 70%. I'm like, Hey, that's a little bit more, you know, does that prove that we can show? You know, we know we can improve that? Right? We had a strategy behind that. And it slowly got the business involved. I mean, sometimes you're not going to have these big Whammies. So you've got to go for smallness. But, you know, as you as you pose your question there read, I think what's important to note, I'm going to go back to my psychology side here is that business can only change so fast. And we did something successful with one business unit. And they said, Okay, we want to do this. And I said, but you're not done actually working, fixing out or, you know, figuring out what we did on how to implement it. No, go spend three months doing what we already gave you, then let's go talk. And they were a little bit miffed at it, but three months, they came back, and I said, Okay, tell me how you what happened over your three months, they're like, well, thank you, you know, we actually spent time using it. Now we know what we want on this next iteration more so than we thought we did. It's important to keep in mind that we're humans, we can only change so fast. If I give a business five projects in a year, I'm going to overwhelm them. So you know, there's a balance to play in this. And it's nice that I can move fast. But you need to work on how to, you know, keep this holistic with the organization.

Now, it makes sense.

And only almost untampered close out the one question for everyone. What's next? What's the next innovation that you guys are working on? Maybe you can share a sneak peek of something cool that you're working on? Shawn, I think I know. You've been doing some really cool stuff with like drones, streaming the different drones to do like improved like, you know, predictive maintenance and stuff. RFPs something you're happy to share or maybe something cooler you're working on. Maybe it's same for others as well.

You want to talk about innovation, where we're going? How do we use graph DBS and integrate them more? I think that's important. There's a lot of relational things going on inside the data. How do we leverage that? Drone data's has been fun, I'm actually excited more about satellite data on what can be done. Satellite data can actually reduce drone data costs. There's a variety of things out there that that are the technology is being becoming more available, where the resolution is there, that you can get it and you know, honestly, for 20 grand, I can start tasking some satellites to do stuff. That's not a big number for our organizations in testing and trying new things. So I'll leave it at that.

Thank you, cool. Crispin's? Yeah.

I'll jump in. So yeah, for us the innovation are part of our a maturity model, right? I mean, we are in a phase where how we can democratize AI, across our enterprise, right? I mean, internally, we run a program called as a service. So definitely, you know, that's why we figure it out, in order to scale, such a big level. And when I say the democratize AI, it's not just our data scientists and data engineers, just using from the platform standpoint, but it's more like how citizen data scientists in our business, people can really use the AI part of their day to day operations, right. So that's the journey that we are, you know, going through, and definitely the innovation there for development, you know, with your team on H2O Flixter. That's really a big one for both of us. And we are putting a lot of our efforts to innovate that product, and really, you know, help us to take to the journey that, you know, what we are going on that that's from our fine.

I think from our side, you know, unstructured data is very, very interesting. I've wasted a lot of my time of my life on NLP projects in the past. So, you know, I'm hoping that with some of the new tool sets out of H2O, maybe we can start to explore that truly as a value add versus a time suck. So that's one area I think that we're going to explore. Secondly, as I mentioned, we're we're obviously investigating middle and back office use cases where I think there's some data anomaly detection, I think we don't have a retail focus that maybe like prints might have. So we, we don't have fraud, but we have, we have other potential uses. I think there maybe in terms of, you know, glitches from our brokers or whatever. And then really, third, I would say the biggest and most important focus for us in terms of innovation is and I mentioned earlier, is creating the most scalable platform possible, right? Because, as I said, we're a somewhat smaller organization. I mean, I'm sure AT and T has like 1000 People just in one call center. So you know, we are you know, much smaller and so We need to take the tool sets that we use, which is snowflake in H2O, we need to use them together and use them to attack larger and larger data problems is like Shawn, we're in the internet of things, right? The real world, that world world of commodities is all about the real world. And so as you can imagine, there's almost endless data in the real world, that that can be pulled in. So these abilities to take on larger and larger datasets, without kind of a huge cost infrastructure built is a core focus of ours. And really, frankly, when we compared it not to sell H2O, but when we compared H2O to some of the other different products out there, you know, we saw that tightness of partnership and utilization, maximum utilization of, of, of, of the new upcoming feature sets within snowflake. So that was a big decision point for us last year.

Thank you, I think we are at the top of the hour, I really want to take the time to opportunity to thank Chris, Shawn and Prince for joining us for this amazing panel. It was a great discussion, I think. Yeah. And some great questions as well. I think the audience is super engaged, a lot of questions will out, definitely try to answer those questions offline, or maybe even after the panel. But once again, thank you for joining us chatting your insights, just fun, as always. And I hope you'll stick around for the rest of the hour, where we are going to talk about some of the product innovations, and share some of new innovations that are coming up as well. Okay. Thank you all, then, Prince. If you want to stay on for a bit, we can talk about feature store real quick. That's one of the first things in the proclamation.

Thank you, Shawn. Thank you, Chris. All right, we go ahead and kick it off. All right, so we got a fun agenda coming up a bunch of new products, like our existing products, and new innovations on those products with solid features are obviously good. Hydrogen targe, document ai, h2. And Cloud, of course, is to wave Remo labs, responsible AI, and then we'll get page two or three. So we got new new innovations, we got PMS waiting, ready to share some of the new innovations and all those different products. To kick it off, just we thought by telling you what we've done in the last sort of year, year and a half. Right? So we've been in this journey for you know, 10 years now. But last year, and have you been announced phenomenal, like set of new innovations, right, so we launched HDX. Cloud, actually, early last year. So it's basically you don't have for the platform, right. So this is our platform for the overdrive, the CH two three, sparkling water and steam all these products. Customers are loved and use for many years, but we brought them all together into a icon. And then we had basically 100 Plus apps in the App Store as well, very quickly. We followed it up with feature store, which was which went live in q3 of last year, joint announcement of the TNT Princess team. We also launched Travelocity at one time, which is a big release phenomenal ton of improvements and of new innovations there.

late in the year, we launched our managed cloud, which is a big announcement as well, this is first time we have a disclaimer, software as a service solution, the entire AI cloud fully managed by us. So it's a turnkey solution for customers to come in start using it. We also launched a whole bunch of health apps for our health app store. In June of this year, we announced document AI, which is also co innovation with one of our other customers, UCSF that you probably saw a webinar earlier in the year. We also launched we launched hydrogen torch and q1 of this year, which is no code deep learning platform. We have a lot of innovations coming updates coming on that as well. And then we upgraded our ML ops. So this is all in the field. If you attended our last product day, which was in April, you've probably caught a lot of these things. So what's coming up, right, we have a phenom set of things that we're going to talk about today. But more importantly, we are also introducing things like labeling we are feature store going more generally available for customers stock media becoming generally available, managed cloud with more tiers, auto ml as a service. We can also have more tooling for business users to make it really easy for them to consume the platform. We have a lot of App Tool building toolkits, which will showcase a little bit later like how making it really easy to build more apps and have them available on the platform. Okay, so stay tuned for the entire rest of the hour. I will cover a lot of these things. I want to start off with feature store first. This is a product that we launched late last year in partnership with at&t especially prints and team we've been working on this SWAT actually more than a year and a half, right like those two years of working closely with the team, the gun, a lot of phenomenal requirements, like very core requirements were like we understood what data scientists were doing at scale, what what challenges India was facing when they were launching all these models, with all these datasets that were being taught that upon us models and prints mentioned earlier, right, like, the key was to ensure that the same data that is used for training is made available for inferencing at a really low latency. So with that challenge in mind, Mr. ratable, this is just your, as you can see, one of the key tenants for us along was to ensure that data can come from anywhere. You know, we earlier Chris mentioned how snowflake is a key data store for them, they need the snowflake, data breaks, and all the other tools exist as well. We also saw that customers have data and let's say, environment, Secretary data and Palantir replaces it with a whole bunch of pipelines. So the key was to bring in data from wherever you are, whether it's real time data sources, or batch data sources, we have connectors for all of those different data sources. So you can bring in data to the feature store from wherever they are, whether you're featuring pipelines exist or your data transformation pipelines exist, you can just use the same pipelines to bring in data to the feature store. And once it's in the feature store, we have the offline and online store to support for batch predictions of our training, and then online for the real time predictions. And more importantly, we have a full metadata registry, which allows us to keep all the historical information about the features like information about audits like lineage, whereas the data created, and this is critical for discovery purposes. So one of the big values of feature store is you don't just create a feature pipeline rolls, you reuse it again and again. So someone has created an amazing feature and put it into production. How nice would it be if others can benefit from that without having to recreate the wheel? So the metadata becomes critical to help expose help, discovery and collaboration other features? You want to add a few comments and how it's been working out? And what's the journey been at end?

Sure, sure. I mean, I mean, we're not, you know, you nailed it. I mean, you kind of mentioned, all the key functionalities are some of the challenges that we had, and in the past, and how really the feature store is solving those problems. It's awesome, right, so so like, you know, we're not mentioned, we launched this in a TNT internally, we have more than 1000 data scientists and data engineers on this platform, we have more than 10,000 features, it's been in our feature store, across different BU, they have features coming from different the BU like global supply chain fraud and, and customer insights. And and, I mean, it's happening, you know, really a big time right now. So the whole purpose is how we can reuse this features, and how we can, you know, minimize that, you know, the offline online challenge, and how fast we can go, right? I mean, new problem comes in, you know, let me go and, and research what features that I have in features to quickly go on to look at it, I see a couple of feature sets have been posted by someone else. But really, it's useful. Let me combine that and see, oh, yeah, I got a good look to my existing model, or I'm able to put a new model quickly, is there is a already, you know, two three great success stories with at&t Using this feature store. So it's nice collaboration. And I love the daily standup that our team is doing with beneath an SDR team there in order to do this in a code development on daily basis. So it's a really a great journey. And we see a lot of return of investment already for this feature store and looking forward to you know, enrich more functionalities and, and really doing that magic that what we are looking for with the feature store product or as a service.

Thank you for itself slowly. So with that, let me just jump ahead and show a very quick demo of how the feature store actually works and how you can use it. Alright, so if you've come to the feature store, this is the front end for the feature store. This is the user interface where users can come in. And so nice way to look at all the projects you have in place, you can also look at all the feature sets. And there is also a full access control mechanism. We have a full permissioning workflow. So when you create a feature set or project you can, it's it has different tiers and people can try and see who can access access to it right. And I said once the project is created, you can go into the project you can see the individual feature sets that are inside of the project. You can pick one of them and you can open it and you get a whole bunch of details about the feature set right it tells you when it was created, who it was created by the pipeline itself processing interval other information like Time To Live and etc. So, these are all things that can be tracked. But important thing is you can also call track or disk carboard features to be whether they are they are PCI data or or SPI data or special data, right? This is important because if you are want your data to be sensitive, sensitive, you want to track it so that others cannot see the raw values, even if they can see the actual feature set itself. And that's something that's supported out of the box with the feature store. And once you see the list of features, you can obviously go and peek into them, you can get some summary statistics very quickly, like mean, median, etc accounts. And this is useful again, to see the data like get a sense of what the data has shaped the data looks like. We are adding some more innovations over here coming soon, we can actually generate an auto Insights report for you and have it available. So that's nice, because that entire report can be used for us again, to get a sense of what the data looks like. Right? There is the ability to add quotes, artifacts. So this is nice because you can have a report or maybe sometimes you can have like a PRD attached to the project itself, the selected dates, and it's really great set of features, they can put in additional information that will help others determine if that project is useful for them. So you can add links, PDFs, etc. You can also get a code snippet, this is nice because you can essentially create take the PI, the PI spark or Python coordinate to go connect to the client, and then use the feature set from the client, which is how we will most commonly use it right. So if you go to, let's say, your spark environment or your Python environment, you can just pick up the score to start using it. And that's what we'll do today, right will very quickly show you how you will interact with the feature store, right. So if I go to my Jupiter environment you come in, you will see basically, this is a notebook I have I've already installed the feature store client. And I've already logged into the feature store. So it's very easy, you use your autocon and login. If you use Jupiter, it's it's super simple to log in, it'll this will pop up essentially a new window that will help us whether you're, you're using Azure RT or a database or whatever your auth mechanism, you go and login and authenticate yourself. Once that is done, you can then come in as a user, and you can do a few things, you can obviously read datasets, you can extract the schema, you can use that to create a new feature set, register features or schema as a feature set and then ingest data into the feature store. So this is your second canonical workflow. If you're a data scientist, you build a great model, and you have a great set of features, you want to then supply like put that publish the feature set into the feature store. And this is how you do it, you basically do it right. So you would ingest your data, you read the source file, you would run it, basically bring up your credentials, and then you would extract the schema, this is the key part. Once you extract the schema, you can also then this is the opportunity for you to specify whether certain fields are sensitive. So in this case, I'm saying that, hey, gender is a sensitive field and marking it as SPCA field right? That means that it's going to be masked by default. It's easy for you to then change it later. But this is how we start off right. Once that's done, you then go create a project in this case, I created a project called Willow demo. And in that project, I can then register the feature set. As I do that, I have the ultimate opportunity register ingest data into the feature service set. And by default, the data will be ingested into the offline store. But just as easy it is to register the data into the online store as well. So this is how you determine whether you want the data to be online or offline. And this is a call you may make based on your application requirements and stuff. And once the data is ingested into a load of an offline, super easy to retrieve it. So you can as a user can come in and start retrieving it. So in this case, I'm just going to try and retrieve the data from the we're going to do the offline in a minute. But I'm going to do the online example. So sorry.

Of course of the live demo for some reason, but this is the command to retrieve it to retrieve and then quickly show you basically the payload, we can see the actual payload that was retrieved. And this retrieval can happen in like milliseconds, right. So because we are using a really fast online store, you can get the data out in milliseconds. And then you can have some millisecond latency if you need to, depending on the requirement of the model. Well, once the data is in here, I can now do something interesting, I can go to something to like drive a car, for that matter, you can use park or some other tool. And you can just go directly and connect to the feature store, you go and select the project you are looking for. In this case, I'm going to use the minute demo project. And within that project, you can go look at the feature sets that are available, right. So what are features that you want, pick it and start using it. In this case, I already ingested the data. So let me just go and show you. So the data will show up over here. Once you click ingest. That means that the data has been retrieved and put into the into the Travis CI instance. And now I can operate just like any other dataset. I can then do details on it. I can look at the rows, I can build models off of it, and so on. And once I build the model and deploy it to production, I'm ready deployed to production. I I can use the same online store to score it right. So my ml ops instance can basically call features an online store, score the results with our model and then deploy, right. So all this can be done very seamlessly. One other nice thing you could do is, let's say you build a model in driverless AI. And as you are familiar, diversidad, not just builds your great model, it also does feature engineering for you, it can create some really nice features for us, in this case, creating like an interesting feature, cross validate, target encoding, or maybe it's something else that you find useful, you can use the module to publish the features back into the feature store. So if you saw some really nice features created by driverless AI, you can put them back into the feature store. And basically that becomes a new pipeline, you create a new pipeline that will publish features into the feature store. Okay, so that was a very quick overview of what the feature store does. This is again, available in our React clouds available, it's going to be available very soon in our managed products, worlds, if your customer wants to try it out, let us know we'd love to do a POC and like show you how it can help you in your AI journey. Any last thoughts prints on this? Project on mute.

Sorry, one point you mentioned about you showing the demo of the Jupyter Notebook. It can work the same CLI functions across any, any any ml pipeline, right. I mean, whether you're in snowflake, whether you're in data, bricks, or in a Palantir of sales for like, it could be any pipelines. The CLI is makes life easier from feature store. And we use the same piece of code all the time. And it really works. So you can for example, you can create these features, you know, being in Jupiter pipeline, let's say but your scoring pipeline is completely different, let's say in snowflake, but still, you can use the same features using the online feature store or even offline to just store the time of the scoring. So that's why it's kind of a work across all these tools and technology. But keeping all this in one place. And another point that I would also mention is that data privacy and compliance, because this feature is you know, democratizing these features across different views in your enterprise. But at the same time, it is also well handled in terms of the data encryption and security about these features. And, and, and also the PCI RPI and SPI information. And, and there is a little bit of an internal process that you want to do it right. I mean, a company like a TNT forest, it's very important that there are privacy and compliance and even the ethics of this AI. So you know, that's also been baked inside this feature store. That's why it's makes life easier, just a one place and and really helping us bring the data scientist or data analyst, and our business people and the lives on legal everybody in one place. But it's centralized, and it can be reused. So so that's the, that's the power of our future stories here. And that's how it's helping at the end to democratize the, across the enterprise.

And then prince, I think a lot of you can share this, but just talk about scale, right, like think add on, if you're able to share how big of the how many feature sets, you mentioned, a lot of features with like, just from the size of data, how much you're loading?

Yeah, I mean, it's a different in our teams, they are bringing the data in different sizes, right? I mean, it's sometimes I would say, if I want to just maybe, quote not necessarily internal at&t data, but we also get data from external. And we could able to load, you know, two terabytes of data, like in the form of the features, like there are like two or 200 features and two terabytes of data, we could be able to load it in 30 minutes of time into the feature store. Right? That is a one thing I would say in offline data ingestion interfaces to standpoint. Number one during the online scoring, we want, you know, the feature of lookups at runtime, during the scoring time in less than 50 milliseconds. So that's also happening, right? So it's happening both sides, I mean, whether you're going with the big volume, or how fast you want to retrieve the feature. So. So that's the that's the good numbers, I would say, considering an a big ml operations,