In conversation with Kunhao Yeh: A Data Scientist and Kaggle Grandmaster

In these series of interviews, I present the stories of established Data Scientists and Kaggle Grandmasters at H2O.ai, who share their journey, inspirations, and accomplishments. These interviews are intended to motivate and encourage others who want to understand what it takes to be a Kaggle Grandmaster.

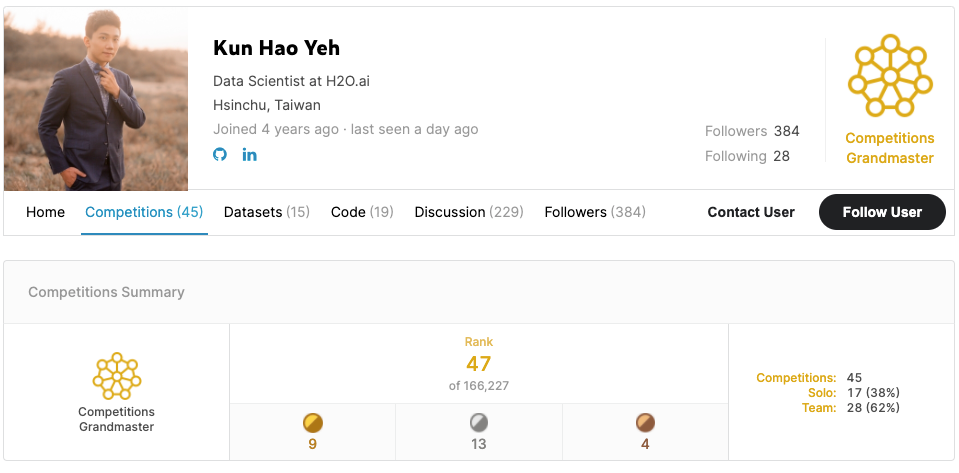

I recently got the chance to interview Kun-Hao Yeh — a Kaggle Competition’s Grandmaster and a Data Scientist at H2O.ai . Kun-Hao holds a Master’s degree in Computer Science from National Chiao-Tung University in Taiwan. His focus was on multi-armed bandit problems and reinforcement learning applied to computer games, including but not restricted to 2048 AI and computer Go. He started his professional career as a software engineer, working on the latest 5G chipset product competing for the upcoming 5G market.

In this interview, we shall know more about his academic background, his passion for Kaggle, and his work as a Data Scientist. Here is an excerpt from my conversation with Kunhao:

Could you tell us about your background and transition from Electrical Engineering to Computer Science and eventually to data science?

Kun-Hao: While pursuing my Bachelors’s, I was majoring in Electrical Engineering(EE) and Computer Science(EECS). Even though I was getting good grades in EE and was also not so good at coding, yet Computer Science(CS) appeared more interesting. Henceforth, I spent more time on CS and even pursued a Master’s degree in computer science.

In graduate school, I was advised by a professor, I-Chen Wu, who mainly worked with RL (Reinforcement Learning) algorithms applied to computer games like Chess, Go, and game 2048. I came to my advisor due to my keen interest in Go and AI . In fact, I even dreamed of becoming a professional Go player but was kept away from their career path by my parents

After graduation, I started my first job at Mediatek as a software engineer for 4G and 5G modem chips. Even though I worked in the semiconductor industry, my interest in Machine learning never faded away. Knowing that Reinforcement Learning would require some time to be fully applicable in the industry, I started focusing on supervised learning instead. I enrolled in courses on Coursera, including Andrew Ng’s — Deep Learning Specialization and even Marios’s (a fellow Kaggle GransMaster at H2O.ai) — Learn from Top Kagglers, to get my fundamentals right. Gradually, I started participating in a lot of Kaggle competitions. The idea was to learn from others while also applying my knowledge to solve real-world problems.

What initially attracted you to Kaggle, and When did the first win come your way?

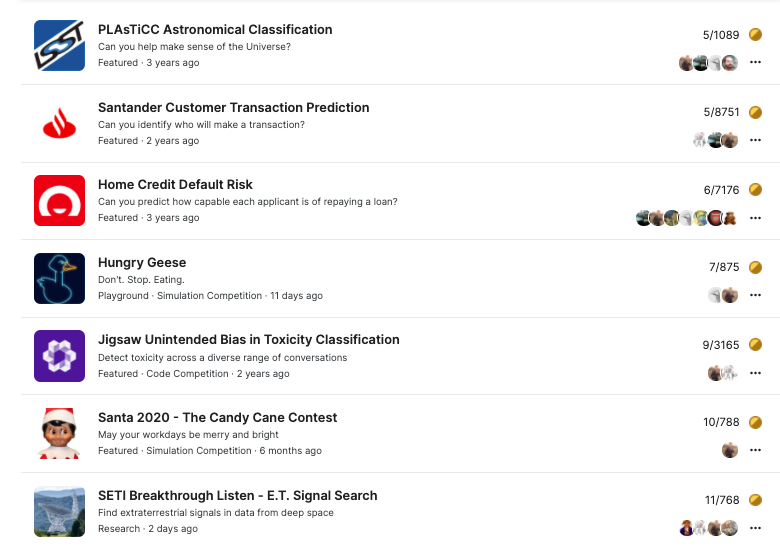

Kun-Hao: I joined Kaggle because I wanted to prove myself somehow by solving problems on the well-known Data Science platform. My first gold medal was in the Home Credit Default Risk Competition , a competition to predict the clients’ repayment abilities. My team was a massive group of people working together on very different aspects to improve our scores.

You have been consistently doing great in Kaggle competitions. What keeps you motivated to compete again and again.

Kun-Hao: After a few wins on Kaggle, I got addicted to the feeling of doing good at something that I was enjoying. I appreciate that there is such a platform where I can connect with people and brilliant minds worldwide, prove myself, and learn something new over the competitions that help solve real problems better!

You became a Grandmaster by getting the 10th position in the Santa 2020 — The Candy Cane Contest. Does having a background in RL gave you an edge in this contest?

Kun-Hao: As I mentioned before, having some RL experience during my time at graduate school definitely gives me some advantages in such competitions.

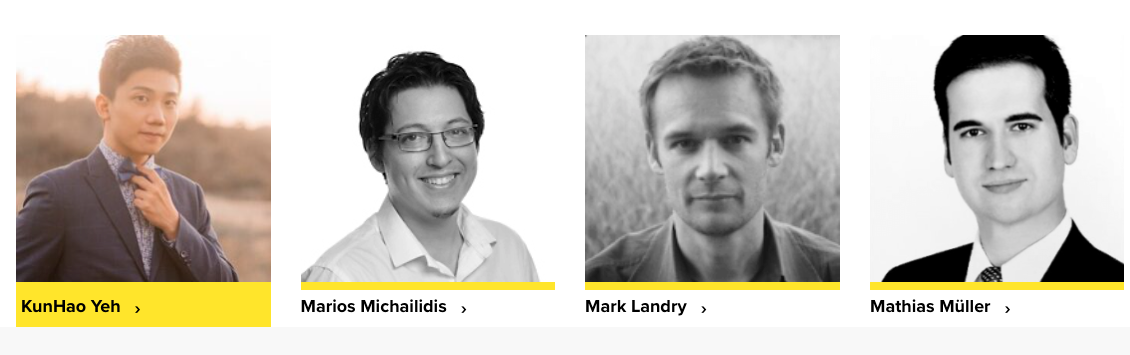

You have teamed up with Marios Michailidis, aka KazAnova , who was already a KGM then. What is your advice on teaming up with more experienced Kagglers?

It’s a good idea first to prove oneself in some competition and try out all possible solutions. Only then is it advisable to team up with more experienced Kagglers. Also, teaming up after you have a significant understanding of the competition makes sense since then you’ll be able to ask the experienced Kagglers about their past experiences in similar competitions, advice, and how they would approach such problems.

You were invited as a Speaker for the Kaggle Days China 2019. Could you share some of your experiences from there?

Kun-Hao: I was invited as a guest speaker in Kaggle Days China in 2019. This happened before joining H2O.ai. At that time, I was a Kaggle Master and was working as a software engineer at Mediatek. During the event, I met Marios Michailidis and Mikhail Trofimov , who happened to be mentors of a Coursera course that I had taken. It was such an exciting moment to be in the audience and listen to their talk. I also met and enjoyed meals at the same table with Gabor Fador, Dmitry Larko, and Yauhen Babakin, who later became my colleagues at H2O.

As a Data Scientist at H2O.ai, what are your roles, and in which specific areas do you work?

Kun-Hao: I work as a competitive data scientist in H2O.ai. I’m still learning how to play this role well. Being competitive means :

- I need to keep improving myself with ML knowledge and problem-solving capabilities, which I do by participating and getting good results on Kaggle or other data science competition platforms.

- Applying the learnings from competitions to improve H2O.ai’s product competitiveness.

- Quickly learning new things and helping out anywhere needed, which means I can work as a software engineer or as a customer data scientist as the need arises.

For now, my main focus is Kaggle competitions, working on H2O Wave apps , and helping out in pre-sales in both APAC and China regions.

What are some of the best things you have learned via Kaggle that you apply in your professional work at H2O.ai?

Kun-Hao: Some of the skills that I consider essential and learnt mainly from Kaggle are:

- State of the art machine learning knowledge,

- how to apply them in solving real problems, and

- how to validate the model to generalize on unseen test cases.

These skills helped me switch my career from a software engineer to a data scientist and play an important role in my job. However, the best things I’ve learnt from Kaggle are understanding the problems quickly, searching for existing solutions, and implementing a better solution myself, which is a methodology that I repeat in almost every competition I participate in.

For instance, I knew nothing about BERT, how to use it, and the idea behind it before joining the Toxic comment classification competition held by Jigsaw . I tried to learn from public kernels, improved my models, and extended my knowledge to get a good final position (9th place) on the leaderboard.

At work, I’ve worked on an optimization Wave app with my colleague, Shivam . Before working on this app, I had little knowledge of pure numerical optimization. However, our customers were requesting this feature. We worked on the optimization app to extend the completeness of our cloud product . I researched different existing frameworks, their limitations, how well or effectively they solved the problems and created an integrated version of an optimization feature in a Wave app. I haven’t had a deep understanding of it before, but when there is such a need, I applied what I learned from Kaggle to the problem, which includes but is not limited to machine learning-related problems.

Any favorite ML resources (MOOCS, Blogs, etc..) you would like to share with the community?

Kun-Hao: For fundamentals, I would suggest taking online courses from Coursera. After getting all fundamentals prepared, I recommend diving into competitions and searching for related papers and blog posts. Every paper/blog/idea that works/helps in a practical scenario (like competition) is a good resource!

A word of advice for the Data Science aspirants who have just started or wish to start their Data Science journey?

Kun-Hao: I would like to share what advice I shared on Kaggle Days China.

- Start Small: Define your small success

- Aim High: Define your own great goals

- Keep Fighting: Work hard, enjoy any small success, and unconsciously get addicted to the process.

Takeaways

Kun-hao’s Kaggle journey has been phenomenal, and this interview is a great way to understand his hard work behind it. His mantra of learning something from every competition is inspiring, and his recent successes don’t he platform clearly show his mastery towards his craft.