Adversarial analysis seeks to explain a machine learning model by understanding locally what changes need to be made to the input to change a model’s outcome. Depending on the context, adversarial results could be used as attacks, in which a change is made to trick a model into reaching a different outcome. Or they could be used as an explanatory tool to understand the easiest way to change a predicted outcome. For instance, a denied loan applicant may be interested in recommended changes they could make to obtain loan approval, perhaps reducing debt or increasing income.

An essential aspect of adversarial examples is that they should be similar to the original instance. The goal is not just to change the model outcome but to find the smallest and easiest perturbation to change the outcome. For tabular data, it is frequently possible to find a reasonable definition for the degree of similarity between instances. However, for text data, the concept of similarity is trickier to define. Some NLP adversarial attack methods rely on changing one or more words to a synonym and then using an embedding to determine the distance between examples. While this will likely produce a similar example, the changes may not preserve meaning or grammatical correctness. One such algorithm is the TextFooler algorithm 1 , which seeks to find a text adversary by changing the most critical words in the text to a synonym, searching for an example that changes the model’s outcome while keeping the distance between embeddings small. In this post, we will explore the performance of that algorithm as well as LLM-based techniques for finding adversaries.

In order to explore vulnerabilities in downstream applications involving LLMs, we’ll consider a sentiment analysis dataset that assigns a positive or negative sentiment to 50,000 IMDB reviews 2 . Without fine-tuning a zero-shot chatgpt-ada model correctly predicted the sentiment of the reviews 63.7% of the time on the 1,000 item test set. With multi-shot prompting in which 4 examples were presented in the prompt, accuracy increased to 77.9%. After the chatgpt-ada model was fine-tuned on 47,592 reviews with a 1000 review validation set, the model accuracy was 96.2% on the 1,000 item test set.

For this experiment, we used a modified version of the TextFooler algorithm via the TextAttack pipeline 3 to attempt to find an adversarial example for each of the 1000 items in the test set. It found an adversary 48 times for the fine-tuned model, 335 times for the multi-shot model, and 198 times for the zero-shot model. We note that the fine-tuned model was both the most accurate and most resistant to adversarial attack. The models that were not fine-tuned were less accurate and more susceptible to attack. The added examples in the multi-shot prompt increased the accuracy of the multi-shot model compared with the zero-shot model, but also increased the model’s susceptibility to adversarial attack, an effect that could be due to the increased complexity of the prompt.

| Model Training | Accuracy | Number of Adversarials |

|---|---|---|

| Zero-Shot | 63.7% | 198 |

| Multi-Shot | 77.9% | 335 |

| Fine-Tuned | 96.2% | 48 |

Experiment Summary

Example 1

Unperturbed text (correctly classified as positive by the fine-tuned model)

Enjoyable in spite of Leslie Howard’s performance. Mr. Howard plays Philip as a flat, uninteresting character. One is supposed to feel sorry for this man; however, I find myself cheering Bette Davis’ Mildred. Ms. Davis gives one her finest performances (she received an Academy Award nomination). Thanks to her performance she brings this rather dull movie to life. **Be sure not to miss when Mildred tells Philip exactly how she feels about him.

Perturbed text (incorrectly classified as negative by the fine-tuned model)

Pleasurable in spite of Leslie Howard’s performance. Mr. Howard plays Philip as a flat, uninteresting character. One is supposed to feel sorry for this man; however, I find myself cheering Bette Davis’ Mildred. Ms. Davis gives one her finest performances (she received an Academy Award nomination). Thanks to her performance she brings this rather dull movie to life. **Be sure not to miss when Mildred tells Philip exactly how she feels about him

Example 2

Unperturbed text (correctly classified as negative by the fine-tuned model)

“ When I refer to Malice as a film noir I am not likening it to such masterpieces as Sunset Boulevard, Double Indemnity or The Maltese Falcon, nor am I comparing director Becker to Alfred Hitchcock, Stanley Kubrick, Stanley Kramer or Luis Bunuel. I am merely registering a protest against the darkness that pervades this movie from start to finish, to the extent that most of the time you simply cannot make out what is going on. I can understand darkness in night scenes but this movie was dark even in broad daylight, for what reason I am at loss to understand. As it is, however, it wouldn’t have made much difference if director Becker had filmed it in total darkness.”

Perturbed text (incorrectly classified as positive by the fine-tuned model)

When I refer to Malice as a film noir I am not likening it to such masterpieces as Sunset Boulevard, Double Indemnity or The Maltese Falcon, nor am I comparing director Becker to Alfred Hitchcock, Stanley Kubrick, Stanley Kramer or Luis Bunuel. I am merely registers a protesting against the darkness that pervades this movie from launch to finish, to the extent that most of the time you simply cannot make out what is going on. I can understand darkness in night scenes but this movie was dark even in broad daylight, for what reason I am at loss to understand. As it is, however, it wouldn’t have made much divergence if director Becker had filmed it in total darkness.

The first example produces a grammatically correct perturbed instance that changes the model’s prediction from positive to negative. In the second example, the adversarial method changes four words, changing the model prediction from negative to positive. However, the perturbations create a couple of grammatically incorrect sentences, which could make the attack easier to identify.

LLMs as a defense strategy:

Because LLMs are good at generating fluent text, one logical use case for them would be detecting awkward perturbations created by adversaries such as TextFooler. An open AI davinci-003 model was used to rephrase each of the 48 adversarial examples thrice. For 75% of the 48 successful adversarial attacks, the majority of the rephrasing were assigned the same label as the original instance, reverting the adversarial attack. When the same defense strategy was applied to the original unaltered reviews, the unaltered reviews were falsely flagged as adversarial in 3.8% of cases. The relatively high success rate for detecting adversarial observations suggests that LLMs could be useful in defending against simple adversarial attacks. The below shows an example of an adversary that was defended against by a rephrasing to correct some awkwardness in the adversary and return the prediction to its original value.

Original example labeled correctly as positive by the fine-tuned model:

If you delete the first twenty minutes or so of this film, you will be left with a fantastic comedy. As it is, I still found it to be a pretty good movie, which is no small feat considering the coma I was put in by the opening scenes. To put it mildly, this film has a dreary beginning that wasn’t even remotely funny, or even upbeat . Once things get sillier, however, you are left with a comedy that still holds up well after more than three decades. Definitely worth checking out, especially if you’re a younger fan of Lemmon and Matthau who wants to see their earlier work.

Perturbed example labeled incorrectly as negative by the fine-tuned model:

If you delete the first twenty minutes or so of this film, you will be left with a gorgeous comedy. As it is, I still found it to be a pretty good movie, which is no small feat considering the coma I was put in by the opening scenes. To put it mildly, this filmmaking ha a dreary commencement that wasn’t even remotely funny, or even optimistic . Once things get sillier, however, you are left with a comedy that still holds up well after more than three decades. Definitely worth verify out, notably if you’re a younger fan of Lemmon and Matthau who wanna to see their earlier work.

Rephrased perturbed example labeled correctly as positive by the fine-tuned model:

If you skip the first twenty minutes of this movie, you will be left with an enjoyable comedy. The beginning of the film was not funny or uplifting, and almost put me to sleep. However, once it gets more lighthearted, it is a great movie that still stands up after more than three decades. It is definitely worth watching, especially if you are a fan of Lemmon and Matthau and want to see their earlier work.

From the perspective of an attacker trying to avoid detection, it makes sense to try using LLM rephrasing to create adversarial examples in the first place. For each of the 969 test set items, davinci-003 was used to rephrase the original review three separate times. In 6.8 percent or 66/969 cases, at least one rephrased review resulted in a change of sentiment, a significant improvement over the original word replacement algorithm. An example of a rephrased review resulting in a change in sentiment is given below.

Original example correctly labeled as negative by the fine-tuned model:

I thought “Intensive Care” was quite bad and very unintentionally funny. But at least not as bad as I thought it might be. Sometimes it\’s somewhat suspenseful, but never a good shocker.

*SPOILER AHEAD*

The fun lies in ridiculous moments. But the all-time classic moment is this: Peter (Koen Wauters) is stabbed and beaten by the killer. He lies moaning in the corner of the hallway. Amy (Nada van Nie) kneels beside him and asks, “Poor Peter, shall I get you a band-aid?”.

This movie was shot in Dutch and English. To spare costs, all license plates are USA, and the background in the news studio is a skyline of Manhattan. Very funny if you\’re Dutch and watching the original version in Dutch

Rephrased example incorrectly labeled as positive by the fine-tuned model:

I found “Intensive Care” to be quite humorous, although not in the way it was intended. There were some suspenseful moments, but it was not a great thriller. The funniest moment of the movie was when Peter (Koen Wauters) was stabbed and beaten by the killer, and Amy (Nada van Nie) kneels beside him and asks “Poor Peter, shall I get you a band-aid?” It was even more amusing for those watching the Dutch version, as all license plates were American, and the news studio had a skyline of Manhattan in the background.

Unlike the word replacement method, the rephrased review contains numerous changes. None of which would be flagged as ungrammatical. The above examples demonstrate that language models have the potential to be useful both in creating and defending against adversarial attacks.

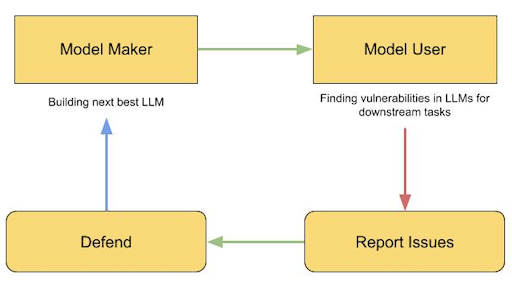

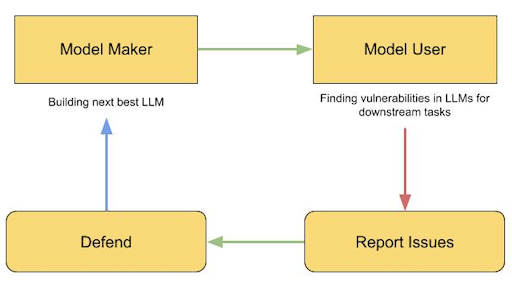

We are continuously exploring new ways to find model vulnerabilities and defense strategies as part of the Model Analyzer initiative,

Reach out if you would like to know more https://h2o.ai/demo/ .

Acknowledgements: We thank Prof. Sameer Singh@uci.edu for helpful feedback on this effort.

References

- Jin, Di, et al. “Is BERT Really Robust? Natural Language Attack on Text Classification and Entailment.” arXiv preprint arXiv:1907.11932 (2019) https://arxiv.org/pdf/1907.11932.pdf

- https://www.kaggle.com/datasets/lakshmi25npathi/imdb-dataset-of-50k-movie-reviews https://www.kaggle.com/datasets/lakshmi25npathi/imdb-dataset-of-50k-movie-reviews

- John Morris, Eli Lifland, Jin Yong Yoo, Jake Grigsby, Di Jin, and Yanjun Qi. 2020. Textattack: A framework for adversarial attacks, data augmentation, and adversarial training in nlp. https://arxiv.org/abs/2005.05909

- https://huggingface.co/blog/red-teaming

- https://cyberscoop.com/def-con-red-teaming-ai/

- https://vulcan.io/blog/owasp-top-10-llm-risks-what-we-learned/#h2_6