TELECOM

Transforming Call Center Operations:

AT&T Achieves 90% Cost Savings with Fine-Tuned Small Language Models

90%

Lower cost

Higher ROI

3x

Faster processing

Time savings at scale

75%

Latency improvement

Faster time to value

5x

Scalability

Increased business velocity

AT&T, a renowned broadband connectivity provider, partnered with H2O.ai to optimize its call center operations and enhance customer experience. The company handles 15 million customer calls annually, generating a vast amount of recorded, transcribed, and summarized interactions. To extract value from these conversations, AT&T leveraged AI and language models to improve customer service, boost operational efficiency, and inform business strategies with data-driven insights.

Solutions powered by H2O.ai’s Gen AI

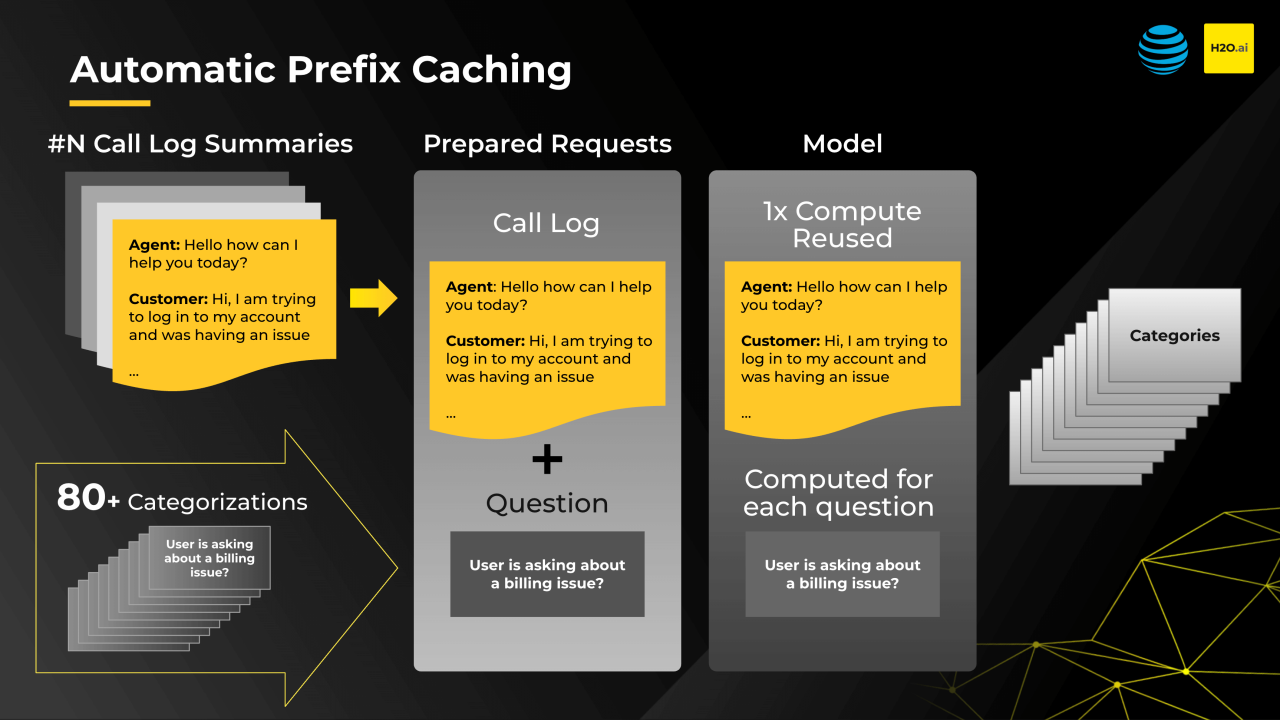

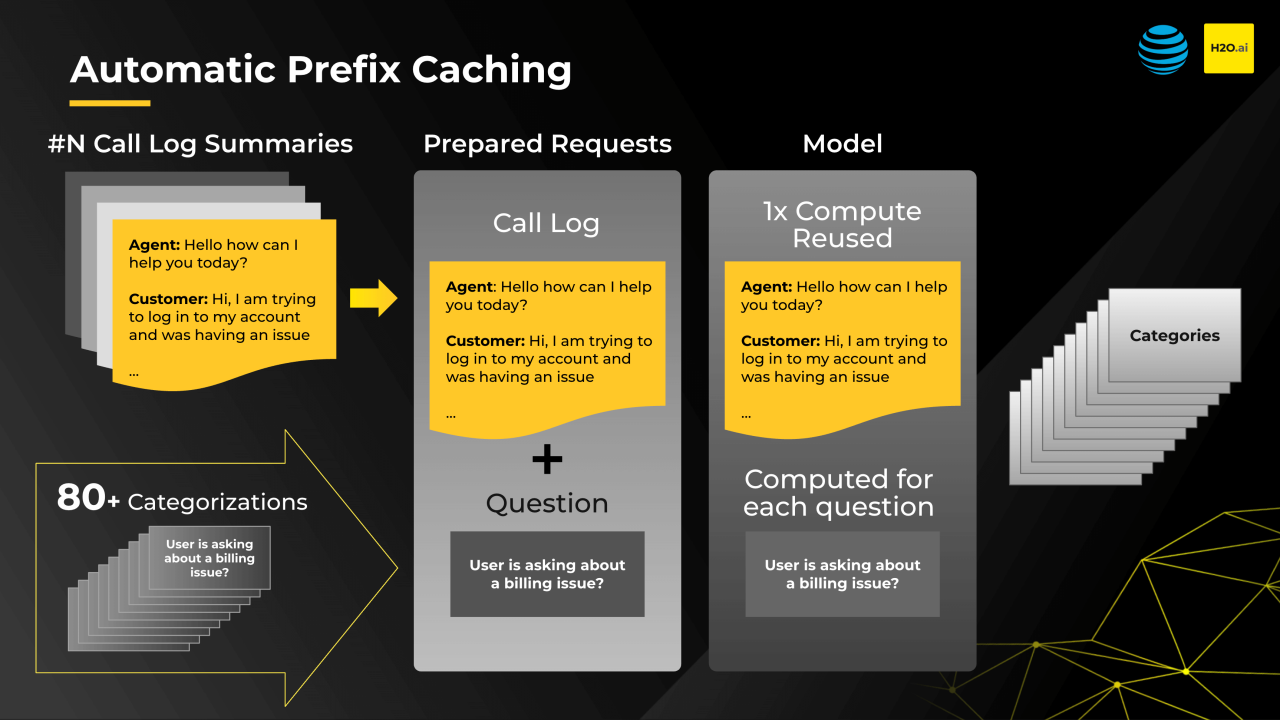

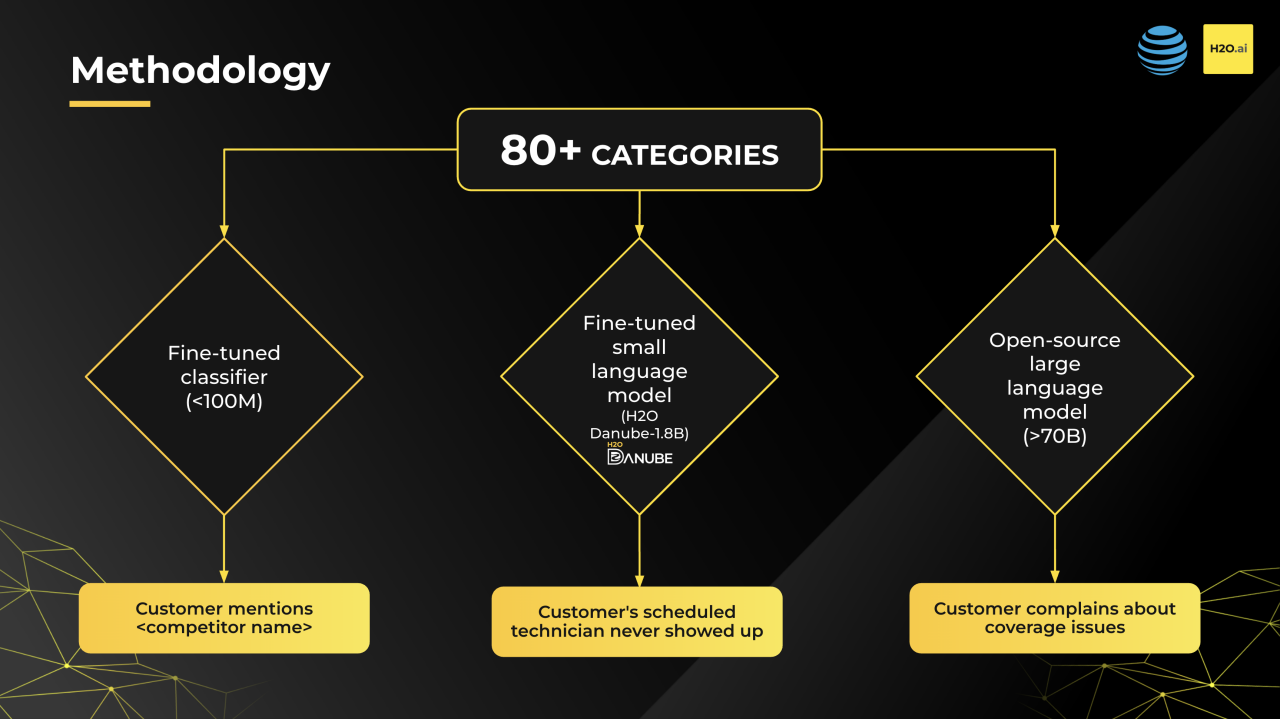

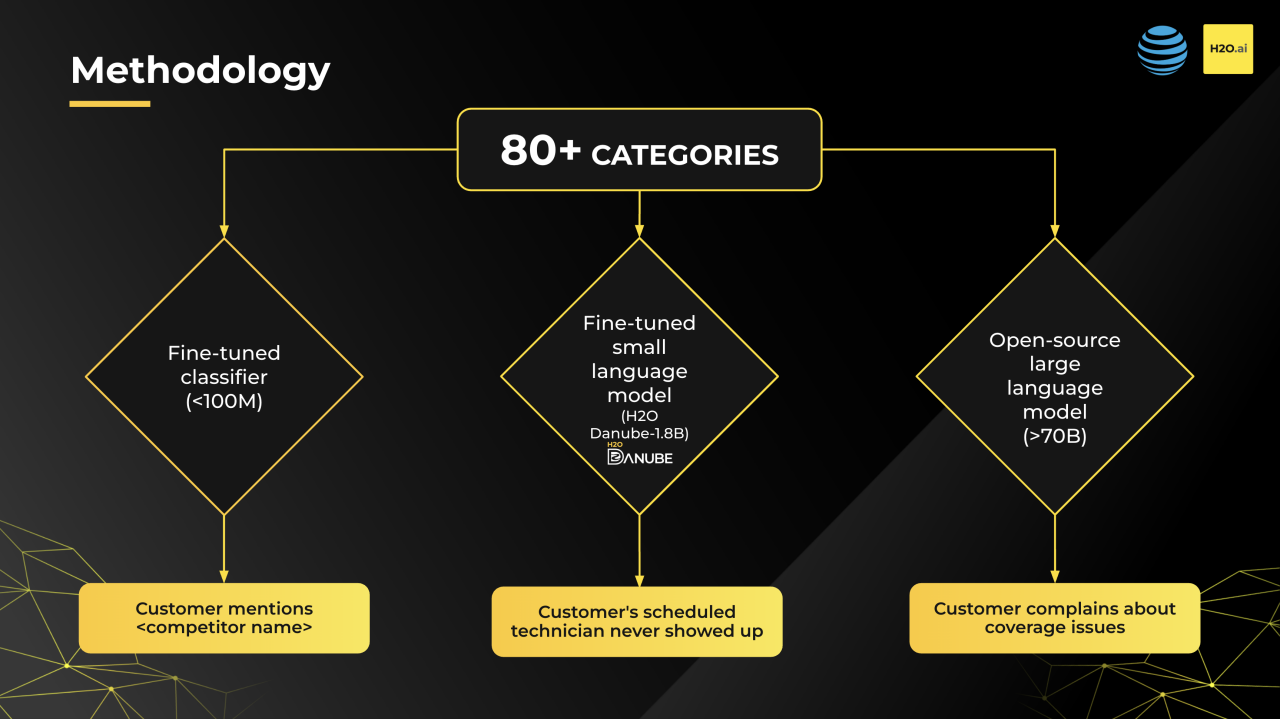

To reduce computational costs and improve scalability, AT&T distilled large language models, such as GPT-4, into three smaller fine-tuned open-source models. This approach enabled AT&T to deploy AI-powered language models in production environments, delivering business value with proven ROI.

For 20 key categories in their 80-label classification system, they utilized H2O.ai’s Danube 1.8B, a cutting-edge small language model fine-tuned with H2O LLM Studio. This allowed AT&T to extract actionable insights from customer interactions (e.g., identifying instances where customers scheduled appointments but technicians did not show up).

Business Value

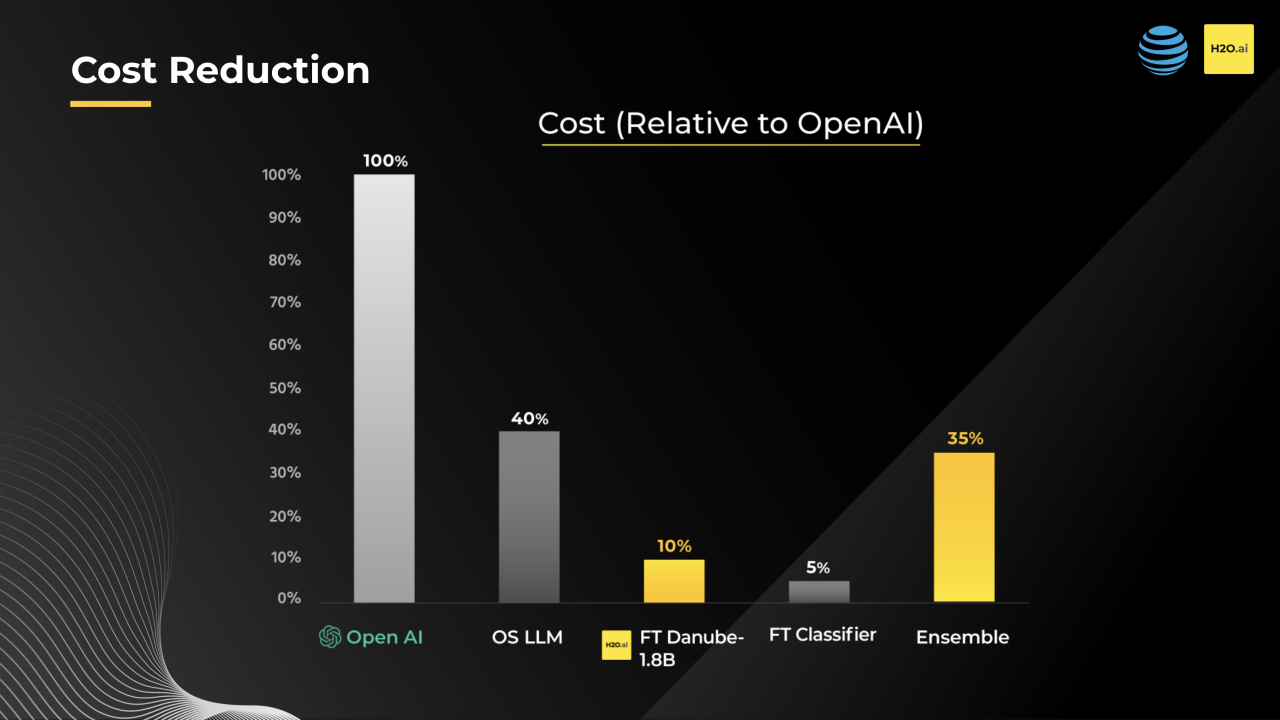

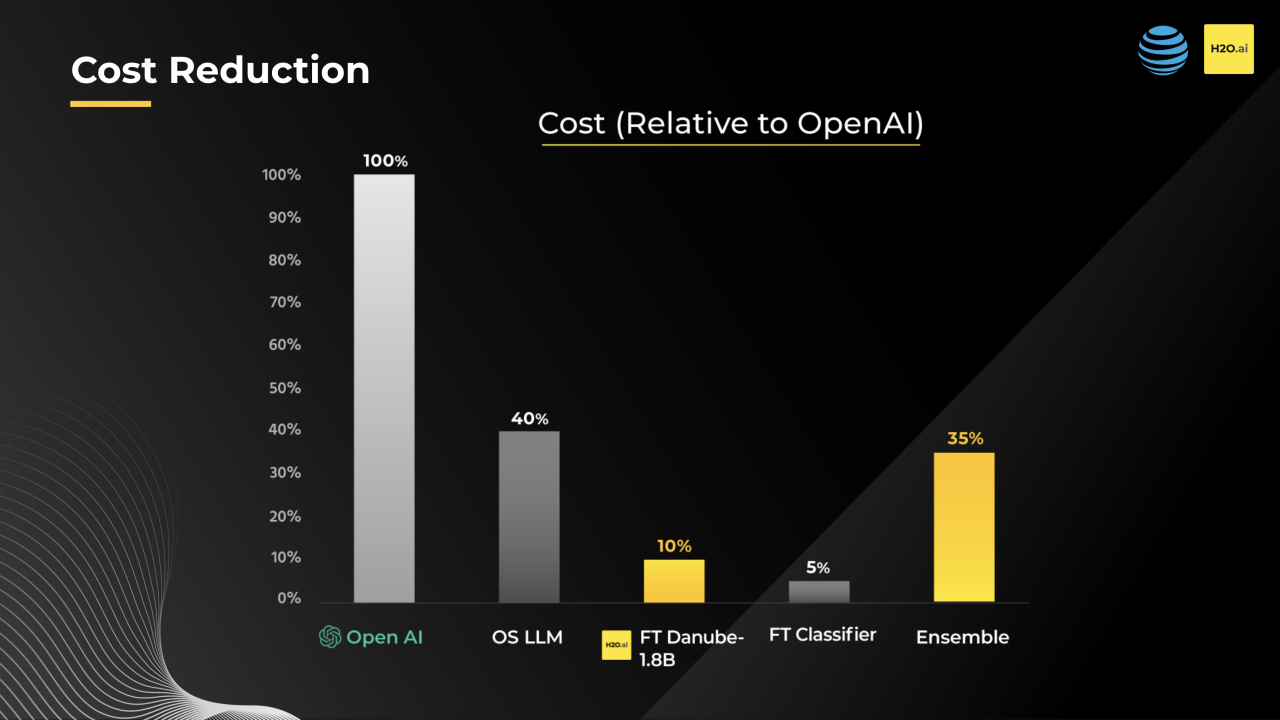

The ensemble of small language models achieved 91% accuracy, closely matching the performance of the previous, more expensive solution. By combining 10 categories from Llama, 20 from Danube, and 50 from the classifier, they reduced costs to 35% of the previous solution, with Danube accounting for just 10%.

Results

The new approach, built upon small language models, yielded significant improvements, including a substantial reduction in processing time, increased transcript processing capacity, and reduced costs.

Why Small Language Models and H2O.ai?

Small Language Models (SLMs) are revolutionizing AI-powered applications. They are more compact, nimble, and require significantly fewer resources to deploy compared to large language models. Additionally, SLMs excel at handling specific tasks and can be tailored to perform well within a narrower scope.

The H2O Danube series includes compact foundational models with 1.8 billion parameters. These models are designed for speed and efficiency, making them ideal for a wide range of enterprise use cases—including retrieval-augmented generation, open-ended text generation, summarization, data formatting, paraphrasing, extraction, table creation, and chat.

When organizations need custom solutions, H2O LLM Studio offers a no-code fine-tuning framework built by our Kaggle Grandmasters. It enables teams to fine-tune models on their own data, delivering state-of-the-art performance tailored to specific business needs.