Open-weight H2O Danube3 Series

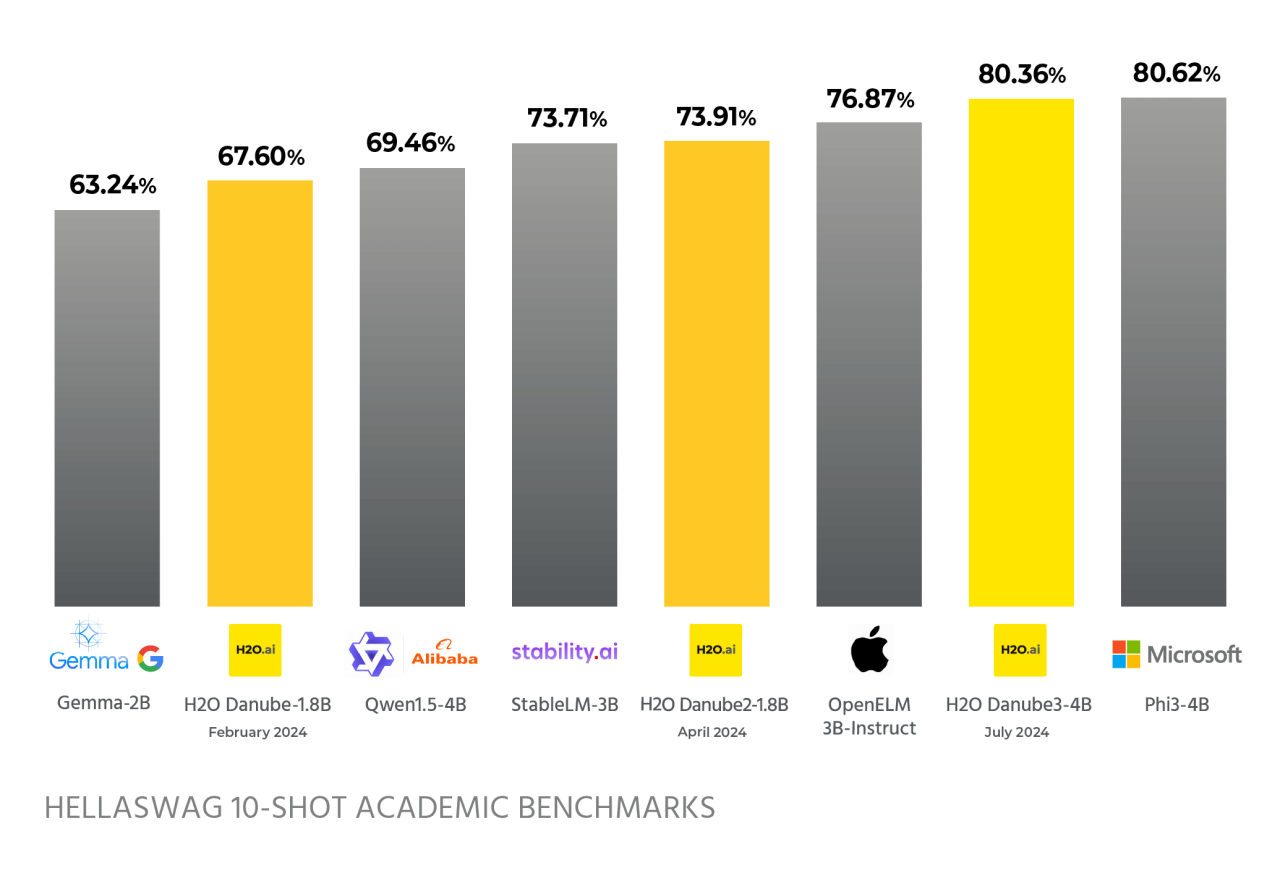

H2O.ai overtakes Apple and matches Microsoft with Danube3-4B, scoring over 80% accuracy on 10-shot HellaSwag benchmark

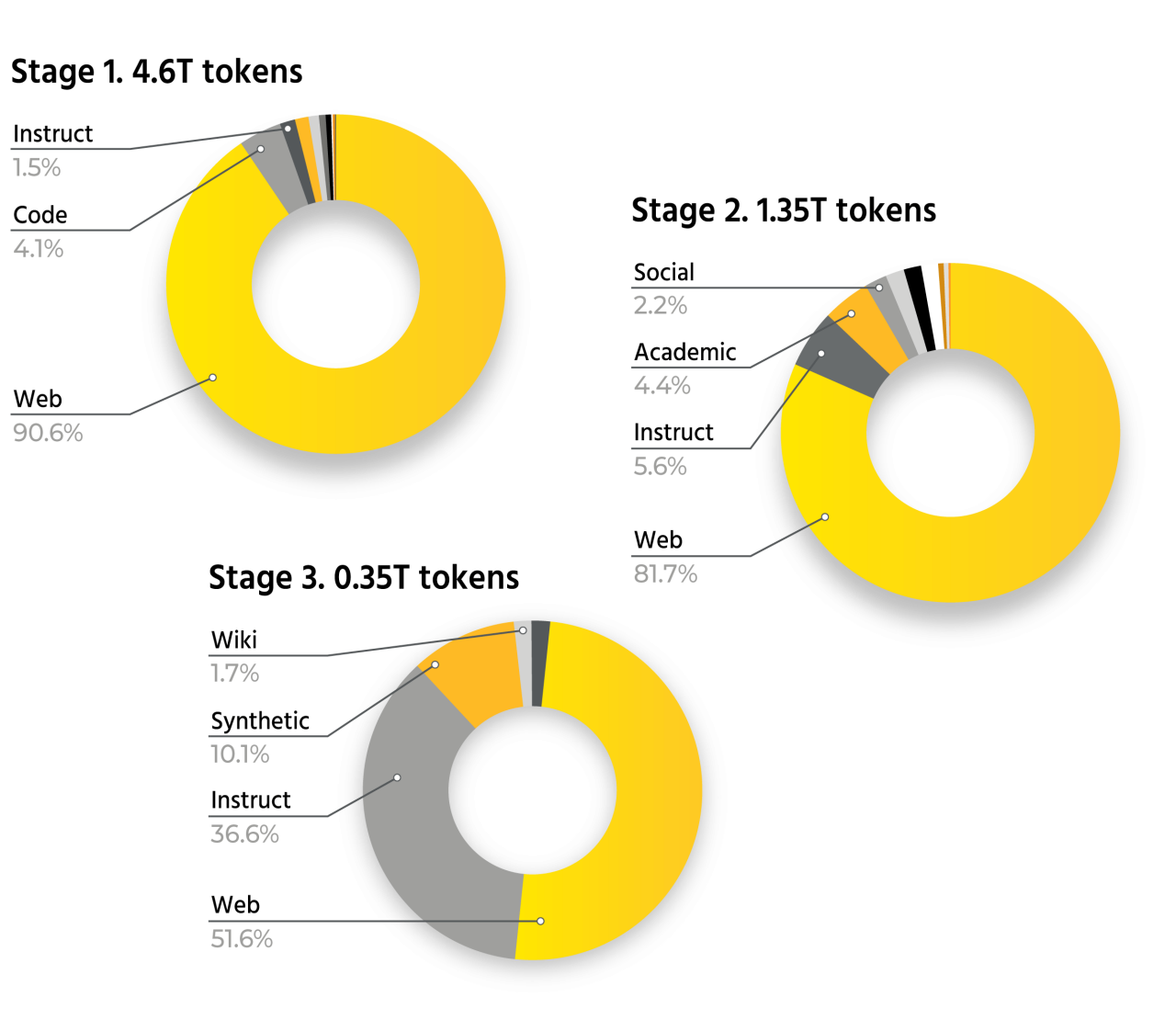

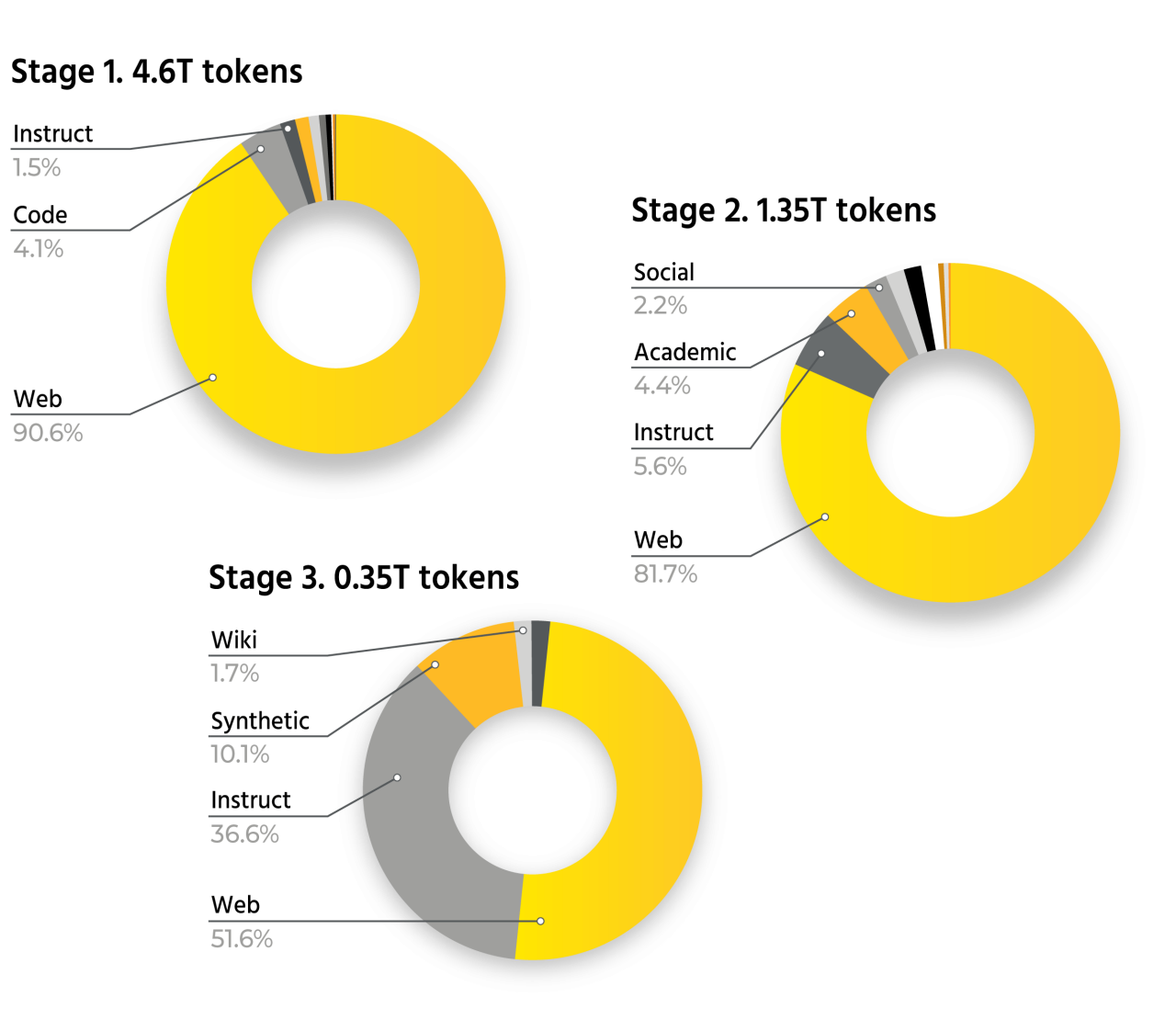

Data stages for H2O-Danube3-4B. The model is trained over three different stages with different data mixes. The first data stage consist of 90.6% of web data which is gradually decreasing to 81.7% at the second stage, and to 51.6% at the third stage. The first two stages include the majority of the tokens: 4.6T and 1.35T tokens respectively, while the third stage comprises of 0.05T tokens.

H2O Danube3-4B and .5B now available on Hugging Face

We trained H2O Danube3 models from scratch on ~100 H100 GPUs using our own curated dataset of 6T tokens. H2O LLM Studio was used to fine-tune Danube3 foundation models for conversational use cases and outperformed GPT-4 both in price and performance.

H2O Danube3 Applications

Cost Efficiency and Accessibility

H2O Danube3-4B runs on smartphones and edge devices, eliminating the need for expensive GPUs and data centers. It makes advanced AI accessible to enterprises of all sizes, reducing hardware costs and democratizing AI capabilities.

High Performance in a Compact Size

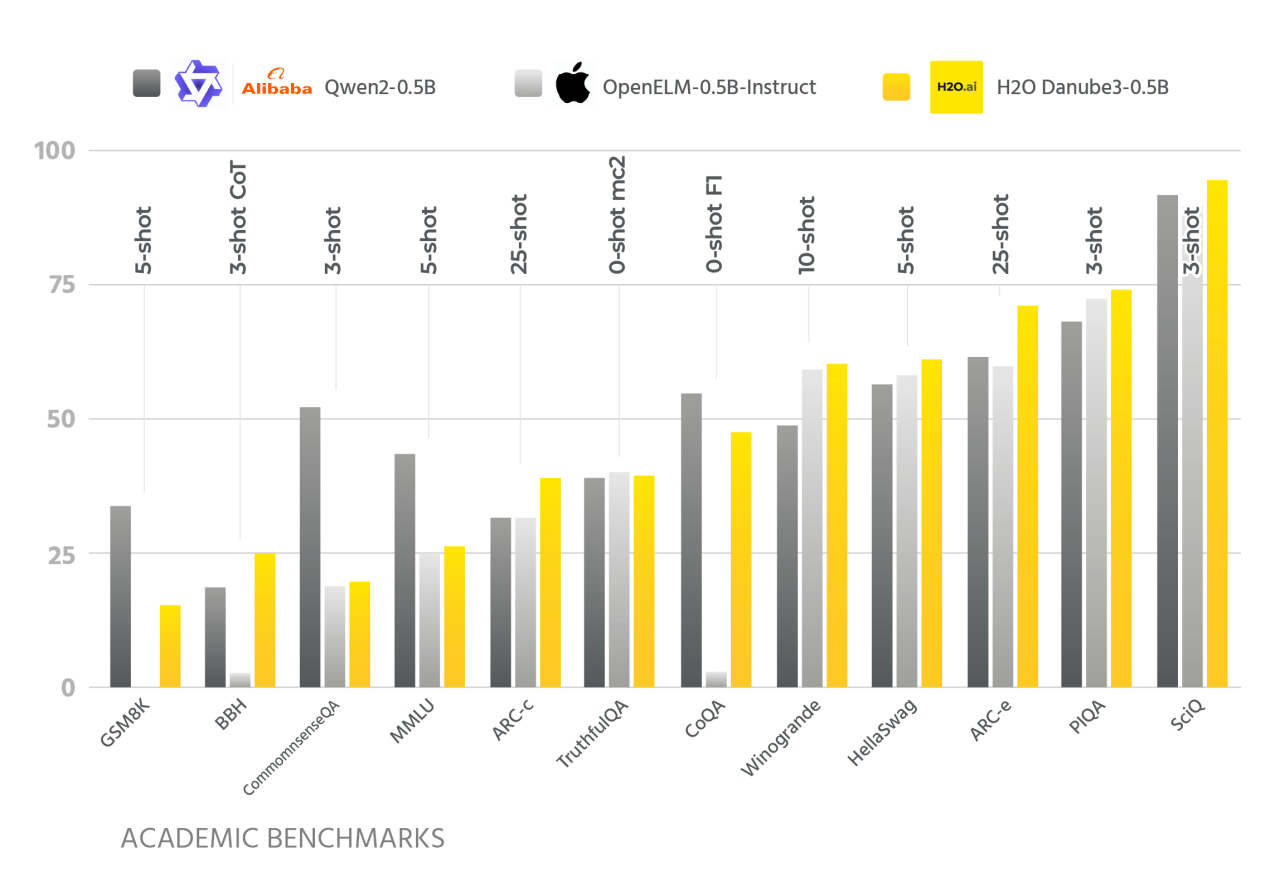

Trained on 6 trillion tokens, H2O Danube3-4B matches or outperforms models like Apple's OpenELM-3B-Instruct and Microsoft's Phi3 4B in commonsense reasoning tasks.

Enhanced Privacy and Security

By processing data locally on edge devices, H2O Danube3-4B enhances data security and privacy, and allows enterprises to post-train and fine-tune LLMs on their tokens for optimal price/performance on commodity hardware.

AI Content Detection and Safety

H2O Danube3-4B AI detection capabilities on everyday devices help verify the authenticity of digital content, maintaining integrity in communications and transactions. It can also enhance the safety of GenAI applications as a cost-effective and fast guardrail LLM.