This is a guest post from our friends at Kensu.

In the space of Data Science development in enterprises, two outstanding scalable technologies are Spark and H2O. Spark is a generic distributed computing framework and H2O is a very performant scalable platform for AI.

Their complementarity is best exploited with the use of Sparkling Water. Sparkling Water is the solution to get the best of Spark – its elegant APIs, RDDs, multi-tenant Context and H2O’s speed, columnar-compression and fully-featured Machine Learning and Deep-Learning algorithms in an enterprise ready fashion.

Examples of Sparkling Water pipelines are readily available in the H2O github repository , we have revisited these examples using the Spark-Notebook.

The Spark-Notebook is an open source notebook (web-based environment for code edition, execution, and data visualization), focused on Scala and Spark. The Spark-Notebook is part of the Adalog suite of Kensu.io which addresses agility, maintainability and productivity for data science teams. Adalog offers to data scientists a short work cycle to deploy their work to the business reality and to managers a set of data governance giving a consistent view on the impact of data activities on the market.

This new material allows diving into Sparkling Water in an interactive and dynamic way.

Working with Sparking Water in the Spark-Notebook scaffolds an ideal platform for big data /data science agile development. Most notably, this gives the data scientist the power to:

- Write rich documentation of his work alongside the code, thus improving the capacity to index knowledge

- Experiment quickly through interactive execution of individual code cells and share the results of these experiments with his colleagues.

- Visualize the data he/she is feeding H2O through an extensive list of widgets and automatic makeup of computation results.

Most of the H2O/Sparkling water examples have been ported to the Spark-Notebook and are available in a github repository.

We are focussing here on the Chicago crime dataset example and looking at:

- How to take advantage of both H2O and Spark-Notebook technologies,

- How to install the Spark-Notebook,

- How to use it to deploy H2O jobs on a spark cluster,

- How to read, transform and join data with Spark,

- How to render data on a geospatial map,

- How to apply deep learning or Gradient Boosted Machine (GBM) models using Sparkling Water

Installing the Spark-Notebook:

Installation is very straightforward on a local machine. Follow the steps described in the Spark-Notebook documentation and in a few minutes, you will have it working. Please note that Sparkling Water works only with Scala 2.11 and Spark 2.02 and above currently.

For larger projects, you may also be interested to read the documentation on how to connect the notebook to an on-premise or cloud computing cluster.

The Sparkling Water notebooks repo should be cloned in the “notebooks” directory of your Spark-Notebook installation.

Integrating H2O with the Spark-Notebook:

In order to integrate Sparkling Water with the Spark-Notebook, we need to tell the notebook to load the Sparkling Water package and specify custom spark configuration, if required. Spark then automatically distributes the H2O libraries on each of your Spark executors. Declaring Sparkling Water dependencies induces some libraries to come along by transitivity, therefore take care to ensure duplication or multiple versions of some dependencies is avoided.

The notebook metadata defines custom dependencies (ai.h2o) and dependencies to not include (because they’re already available, i.e. spark, scala and jetty). The custom local repos allow us to define where dependencies are stored locally and thus avoid downloading these each time a notebook is started.

"customLocalRepo": "/tmp/spark-notebook",

"customDeps": [

"ai.h2o % sparkling-water-core_2.11 % 2.0.2",

"ai.h2o % sparkling-water-examples_2.11 % 2.0.2",

"- org.apache.hadoop % hadoop-client % _",

"- org.apache.spark % spark-core_2.11 % _",

"- org.apache.spark % spark-mllib_2.11 % _",

"- org.apache.spark % spark-repl_2.11 % _",

"- org.scala-lang % _ % _",

"- org.scoverage % _ % _",

"- org.eclipse.jetty.aggregate % jetty-servlet % _"

],

"customSparkConf": {

"spark.ext.h2o.repl.enabled": "false"

},With these dependencies set, we can start using Sparkling Water and initiate an H2O context from within the notebook.

Benchmark example – Chicago Crime Scenes:

As an example, we can revisit the Chicago Crime Sparkling Water demo. The Spark-Notebook we used for this benchmark can be seen in a read-only mode here.

Step 1: The Three datasets are loaded as spark data frames:

- Chicago weather data : Min, Max and Mean temperature per day

- Chicago Census data : Average poverty, unemployment, education level and gross income per Chicago Community Area

- Chicago historical crime data : Crime description, date, location, community area, etc. Also contains a flag telling whether the criminal has been arrested or not.

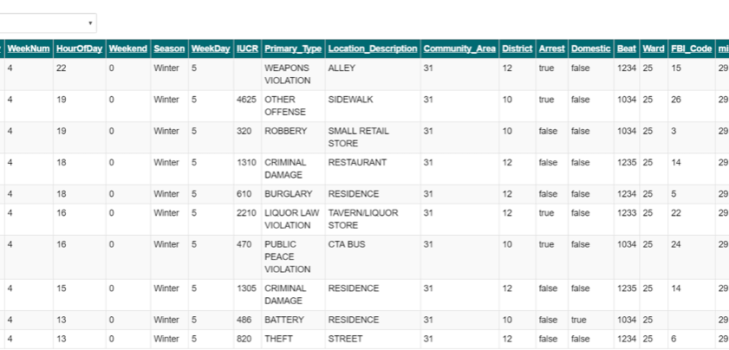

The three tables are joined using Spark into a big table with location and date as keys. A view of the first entries of the table are generated by the notebook’s automatic rendering of tables (See a sample on the table below).

Geospatial charts widgets are also available in the Spark-Notebook, for example, the 100 first crimes in the table:

Step 2: We can transform the spark data frame into an H2O Frame and randomly split the H2O Frame into training and validation frames containing 80% and 20% of the rows, respectively. This is a memory to memory transformation, effectively copying and formatting data in the spark data frame into an equivalent representation in the H2O nodes (spawned by Sparkling Water into the spark executors).

We can verify that the frames are loaded into H2O by looking at the H2O Flow UI (available on port 54321 of your spark-notebook installation). We can access it by calling “openFlow” in a notebook cell.

Step 3: From the Spark-Notebook, we train two H2O machine learning models on the training H2O frame. For comparison, we are constructing a Deep Learning MLP model and a Gradient Boosting Machine (GBM) model. Both models are using all the data frame columns as features: time, weather, location, and neighborhood census data. Models are living in the H2O context and thus visible in the H2O flow UI. Sparkling Water functions allow us to access these from the SparkContext.

We compare the classification performance of the two models by looking at the area under the curve (AUC) on the validation dataset. The AUC measures the discrimination power of the model, that is the ability of the model to correctly classify crimes that lead to an arrest or not. The higher, the better.

The Deep Learning model leads to a 0.89 AUC while the GBM gets to 0.90 AUC. The two models are therefore quite comparable in terms of discrimination power.

Step 4: Finally, the trained model is used to measure the probability of arrest for two specific crimes:

- A “narcotics” related crime on 02/08/2015 11:43:58 PM in a street of community area “46” in district 4 with FBI code 18.

The probability of being arrested predicted by the deep learning model is 99.9% and by the GBM is 75.2%. - A “deceptive practice” related crime on 02/08/2015 11:00:39 PM in a residence of community area “14” in district 9 with FBI code 11.

The probability of being arrested predicted by the deep learning model is 1.4% and by the GBM is 12%.

The Spark-Notebook allows for a quick computation and visualization of the results:

Summary

Combining Spark and H2O within the Spark-Notebook is a very nice set-up for scalable data science. More examples are available in the online viewer. If you are interested in running them, install the Spark-Notebook and look in this repository. From that point , you are on track for enterprise-ready interactive scalable data science.

Loic Quertenmont,

Data Scientist @ Kensu.io