This post originally appeared here. It was authored by Daisy Deng, Software Engineer, and Abhinav Mithal, Senior Engineering Manager, at Microsoft.

The focus on machine learning and artificial intelligence has soared over the past few years, even as fast, scalable and reliable ML and AI solutions are increasingly viewed as being vital to business success. H2O.ai has lately been gaining fame in the AI world for its fast in-memory ML algorithms and for easy consumption in production. H2O.ai is designed to provide a fast, scalable, and open source ML platform and it recently added support for deep learning as well. There are many ways to run H2O.ai on Azure. This post provides an overview of how to efficiently develop and operationalize H2O.ai ML models on Azure.

H2O.ai can be deployed in many ways including on a single node, on a multi-node cluster, in a Hadoop cluster and an Apache Spark cluster. H2O.ai is written in Java, so it naturally supports Java APIs. Since the standard Scala backend is a Java VM, H2O.ai also supports the Scala API. It also has rich interfaces for Python and R. The h2o R and h2o Python packages respectively help R and Python users access H2O.ai algorithms and functionality. The R and Python scripts that use the h2o library interact with the H2O clusters using REST API calls.

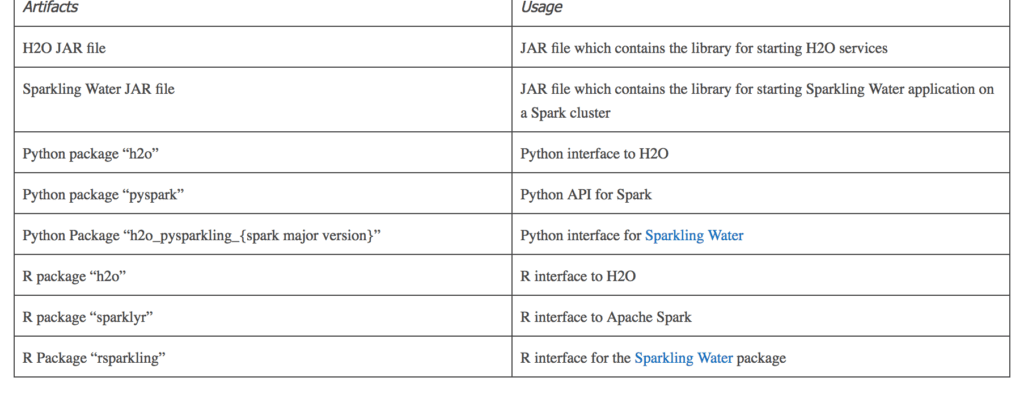

With the rising popularity of Apache Spark, Sparkling Water was developed to combine H2O functionality with Apache Spark. Sparkling Water provides a way to launch the H2O service on each Spark executor in the Spark cluster, forming a H2O cluster. A typical way of using the two together is to do data munging in Apache Spark while run training and scoring using H2O. Apache Spark has built-in support for Python through PySpark and pysparkling provides bindings between Spark and H2O to run Sparkling Water applications in Python. Sparklyr provides the R interface to Spark and rsparkling provides bindings between Spark and H2O to run Sparkling Water applications in R.

Table 1 and Figure 1 below show more information about how to run Sparkling Water applications on Spark from R and Python.

Model Development

Data Science Virtual Machine (DSVM) is a great tool with which you can start developing ML models in a single-node environment. H2O.ai comes preinstalled for Python on DSVM. If you use R (on Ubuntu), you can follow the script in our earlier blog post to set up your environment. If you are dealing with large datasets, you may consider using a cluster for development. Below are the two recommended choices for cluster-based development.

Azure HDInsight offers fully-managed clusters that come in many handy configurations. Azure HDInsight allows users to create Spark clusters with H2O.ai with all the dependencies pre-installed. Python users can experiment with it by following the Jupyter notebook examples that come with the cluster. R users can follow our previous post to set up the environment to use RStudio for development. Once the development of the model is finished and you’ve trained your model, you can save the trained model for scoring. H2O allows you to save the trained model as a MOJO file. A JAR file, h2o-genmodel.jar, is also generated when the model is saved. This jar file is need when you want to load your trained model in Java or Scala code while Python and R code can directly load the trained model using the H2O API.

If you are looking for low-cost clusters, you can use the Azure Distributed Data Engineering Toolkit (AZTK) to start a Docker-based Spark cluster on top of Azure Batch with low-priority VMs . The cluster created through AZTK is accessible for use in development through SSH or Jupyter notebooks. Compared to Jupyter Notebooks on Azure HDInsight clusters, the Jupyter notebook is rudimentary and does not come pre-configured for H2O.ai model development. Users also need to save the development work to external durable storage because once the AZTK spark cluster is torn down it cannot be restored.

Table 2 shows a summary of using the three environments for model development.

Batch Scoring and Model Retraining

Batch scoring is also referred to as offline scoring. It usually deals with significant amounts of data and may require a lot of processing time. Retraining deals with model drifting where the model no longer captures patterns in newer datasets accurately. Batch scoring and model retraining are considered batch processing and they can be operationalized in a similar fashion.

If you have many parallel tasks each of which can be handled by a single VM, Azure Batch is a great tool to handle this type of workload. Azure Batch Shipyard provides code-free job configuration and creation on Azure Batch with Docker containers . We can easily include Apache Spark and H2O.ai in the Docker image and use them with Azure Batch Shipyard. In Azure Batch Shipyard, each model retraining, or batch scoring, can be configured as a task. This type of job, consisting of several separate tasks, is also known as an “embarrassingly parallel” workload, which is fundamentally different from distributed computing where communications between tasks is required to complete a job. Interested readers can continue to read more from this wiki .

If the batch processing job needs a cluster for distributed processing, for example, if the amount of data is large or it’s more cost-effective to use a cluster, you can use AZTK to create a Docker-based Spark cluster. H2O.ai can be easily included in the Docker image, and the process of cluster creation, job submission, and cluster deletion can be automated and triggered by the Azure Function App. However, in this method, the users need to configure the cluster and manage container images. If you want a fully-managed cluster with detailed monitoring, Azure HDInsight cluster is a better choice. Currently we can use Azure Data Factory Spark Activity to submit batch jobs to the cluster. However, it requires having a HDInsight cluster running all the time, so it’s mostly relevant in use cases with frequent batch processing.

Table 3 shows a comparison of the three ways of running batch processing in Spark where H2O.ai can be easily integrated in each computing environment.

Online Scoring

Online scoring means scoring with a small response time, so this is also referred to as real-time scoring. In general, online scoring deals with a single-point prediction or mini-batch predictions and should use pre-computed cached features when possible. We can load the ML models and the relevant libraries and run scoring in any application. If a microservice architecture is preferred to separate concerns and decouple dependencies, it is recommended to implement online scoring as a web service with Rest API. The web services for scoring with the H2O ML model are usually written in Java, Scala or Python. As we mentioned in the Model Development section, the saved H2O model is in the MOJO format and, together with the model, the h2o-genmodel.jar file is generated. While web services written in Java or Scala can use this JAR file to load the saved model for scoring, web services written in Python can directly call the Python API to load the saved model.

Azure provides many choices to host web services.

Azure Web App is an Azure PaaS offering to host web applications. It provides a fully-managed platform which allows users to focus on their application. Recently, Azure Web App Service for Containers, built on Azure Web App on Linux, was released to host containerize web applications. Azure Container Service with Kubernetes (AKS) provides an effortless way to create, configure and manage a cluster of VMs to run containerized applications. Both Azure Web App Service for Containers and Azure Container Service provide great portability and run-environment customization for web applications. Azure Machine Learning (AML) Model Management CLI/API provides an even simpler way to deploy and manage web services on ACS with Kubernetes. We have listed below a comparison of the three Azure services for hosting online scoring in Table 4.

Edge Scoring

Edge scoring means executing scoring on internet-of-things (IoT) devices. With edge scoring, the devices perform analytics and make intelligent decisions once the data is collected without having to send the data to a central processing center. Edge scoring is important in use cases where data privacy requirements are high, or the desired scoring latency is super low. Enabled by container technology,

Azure Machine Learning, together with Azure IoT Edge provide easy ways to deploy machine learning models to Azure IoT edge devices. With AML containers, the use of H2O.ai on edge comes with minimal effort. Check out our recent blog post titled Artificial Intelligence and Machine Learning on the Cutting Edge for more details on how to enable edge intelligence.

Summary

In this post, we discussed a developer’s journey for building and deploying H2O.ai-based solutions with Azure services, and covered model development, model retraining, batch scoring and online scoring together with edge scoring. Our AI development journey in this post focused on H2O.ai. However, these learnings are not specific just to H2O.ai and can be applied just as easily to any Spark-based solutions. As more and more frameworks such as TensorFlow and Microsoft Cognitive Toolkit (CNTK) have been enabled to run on Spark, we believe these learnings will become more valuable. Understanding the right product choices based on business and technical needs is fundamental to the success of any project, and we hope the information in this post proves to be useful in your project.

Daisy & Abhinav