In an era where Large Language Models (LLMs) are rapidly gaining traction for diverse applications, the need for comprehensive evaluation and comparison of these models has never been more critical. At H2O.ai, our commitment to democratizing AI is deeply ingrained in our ethos, and in this spirit, we are thrilled to introduce our innovative tool, H2O EvalGPT , designed for the efficient and transparent evaluation of LLMs.

Why Evaluate LLMs?

With a surge in the adoption of various foundation models and fine-tuned variants of LLMs, we see a spectrum of capabilities and potential use cases. Some LLMs excel at summarizing content, while others shine in answering questions or reasoning tasks. The task of selecting the most suitable LLM for a specific application becomes challenging due to these varying strengths. It’s crucial to comprehensively evaluate a model’s performance concerning one’s use case, facilitating informed decisions when developing or using LLMs.

Introducing H2O EvalGPT

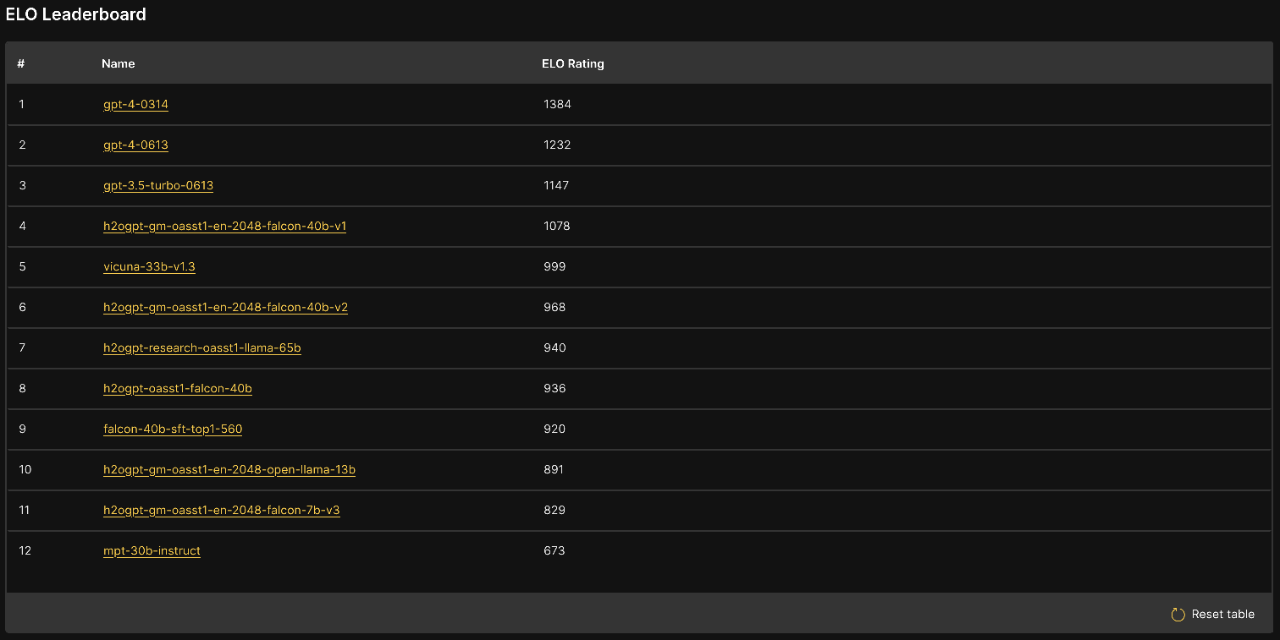

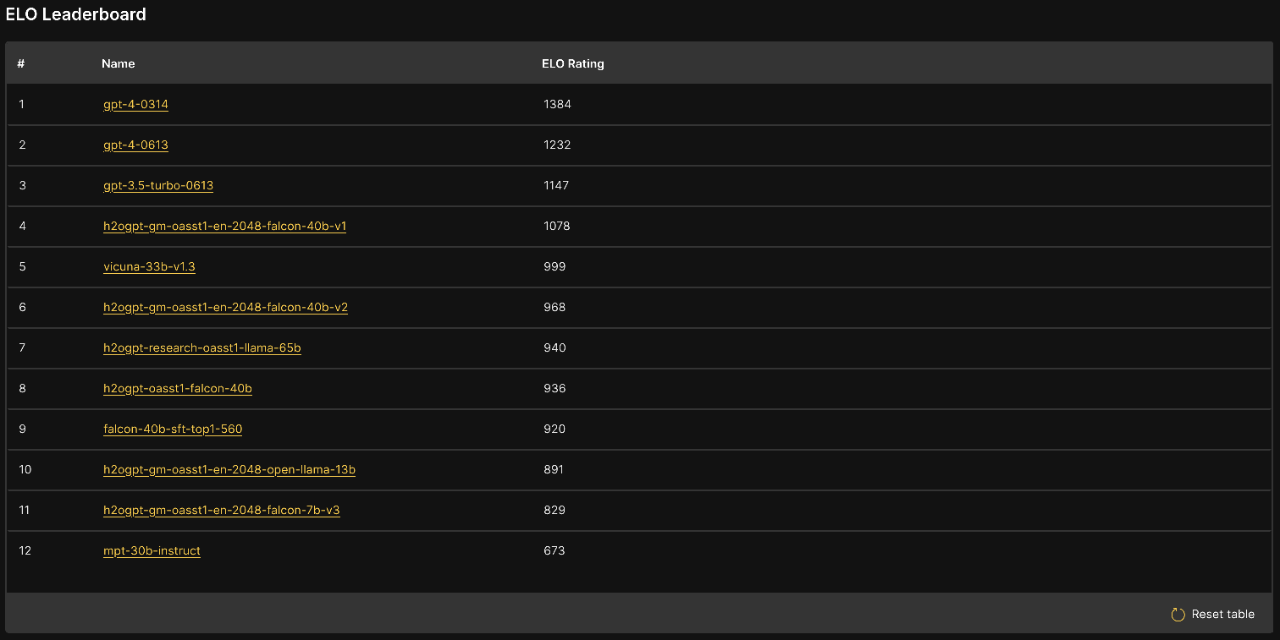

H2O EvalGPT, our open tool for evaluating and comparing LLMs, provides a platform for understanding a model’s performance across a plethora of tasks and benchmarks. Whether you want to automate a workflow or task with LLMs, such as summarizing bank reports or responding to queries, H2O EvalGPT offers a detailed leaderboard for popular, open-source, high-performance LLMs, assisting you in choosing the most effective model for your specific task.

Our system evaluates LLMs on business-relevant data across sectors such as finance, law, and banking. The leaderboard gives insights not just into which models excel in summarization or understanding banking terminology, but which are adept at summarizing banking documents. And we’re just getting started – the ability to submit your models for evaluation and benchmarking will be available soon.

Key Features of H2O EvalGPT

- Relevance: H2O EvalGPT evaluates popular LLMs on industry-specific data, enabling an understanding of their performance in practical, real-world scenarios.

- Transparency: With an open leaderboard displaying top model ratings and detailed evaluation metrics, H2O EvalGPT ensures full reproducibility. The entire evaluation codebase will soon be open-sourced.

- Speed and Currency: Our fully automated and responsive platform updates the leaderboard weekly, significantly reducing the time it takes to evaluate model submissions.

- Scope: We evaluate models across a wide range of tasks, with new metrics and benchmarks added over time to provide a thorough understanding of the model’s capabilities.

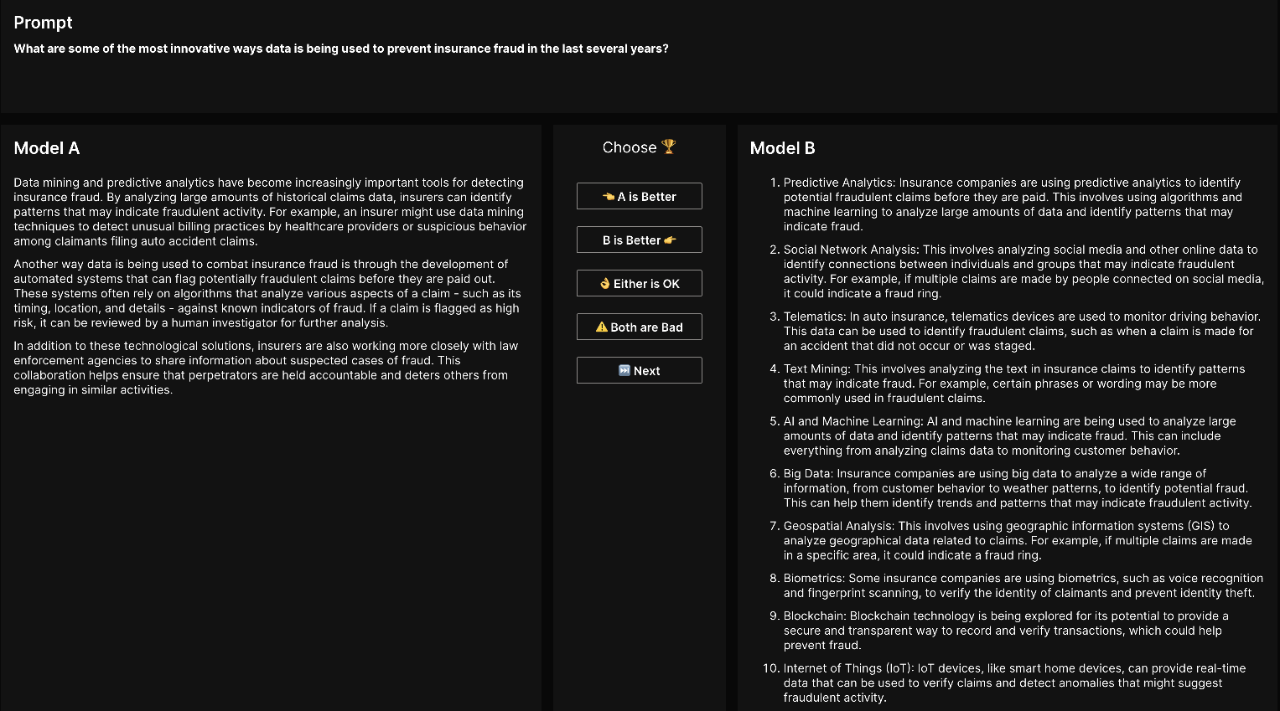

- Interactivity and Human-Alignment: H2O EvalGPT offers the ability to manually run A/B tests, providing further insights into model evaluations and ensuring alignment between automatic evaluations and human evaluation.

H2O’s LLM Evaluation Method – A Fast and Comprehensive Approach

Traditional evaluation of Large Language Models (LLMs) has typically relied on a blend of automated metrics and established benchmarking frameworks such as perplexity, accuracy, BLEU, and ROUGE scores, as well as comprehensive frameworks like HELM and Big Bench. Yet, these measures, while helpful, carry inherent limitations: they can neglect critical aspects of language understanding and often fail to capture semantic nuances effectively.

When automated metrics struggle to measure the richness of free-form text generation, other strategies like A/B testing and Elo scoring come into play as in MT-Bench. Despite their merits, these methods can either be resource-draining or overly simplistic for the intricacies of language understanding and generation tasks.

To confront these evaluation hurdles, H2O EvalGPT fuses the best of A/B testing and automated evaluation methods to deliver a comprehensive assessment of a model’s capabilities. It provides a meticulous analysis of both the quality and accuracy of the model’s output, while keenly considering comprehension and contextual relevance.

Uniquely, H2O offers a rich and comprehensive evaluation promptly while ensuring full transparency in its approach.

Taking a Deep Dive into H2O’s LLM Evaluation Method

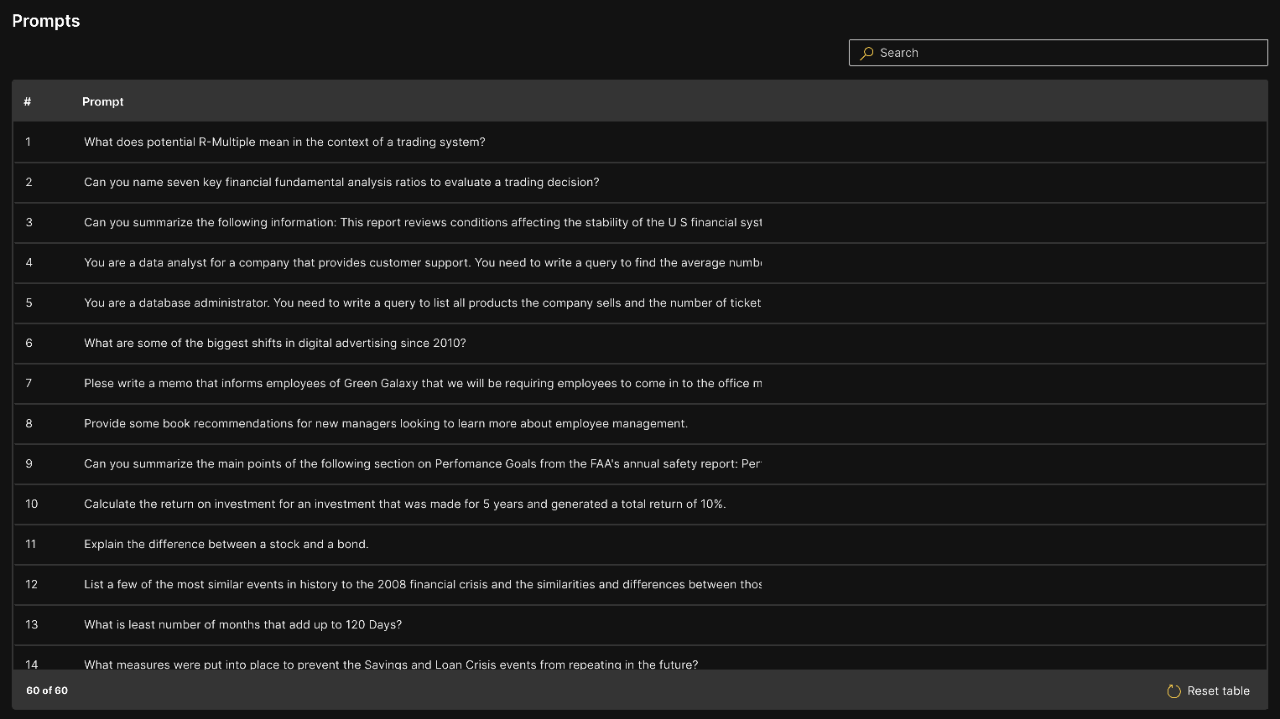

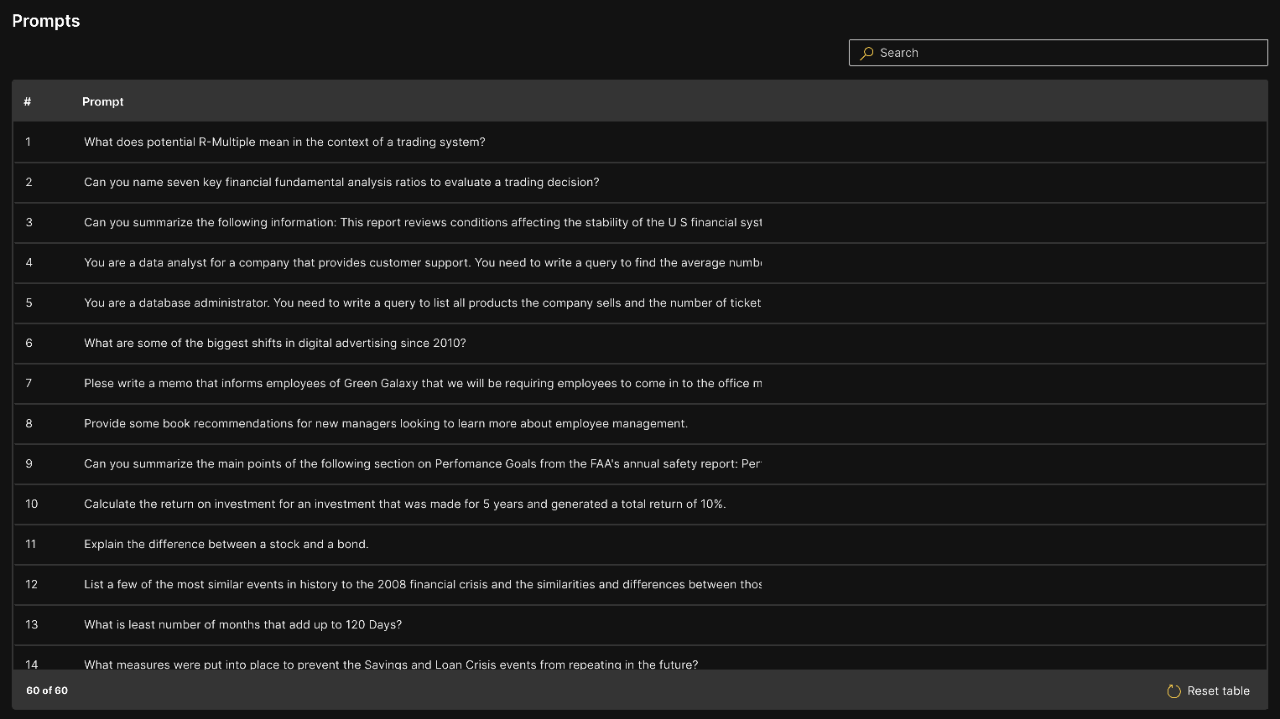

The evaluation methodology employed by H2O’s LLM Evaluation system revolves around the ELO Rating methodology, computing ELO scores based on the results of A/B tests. Our approach uses a diverse test set of 60 prompts spanning various categories, setting the stage for a competition between every model on the leaderboard.

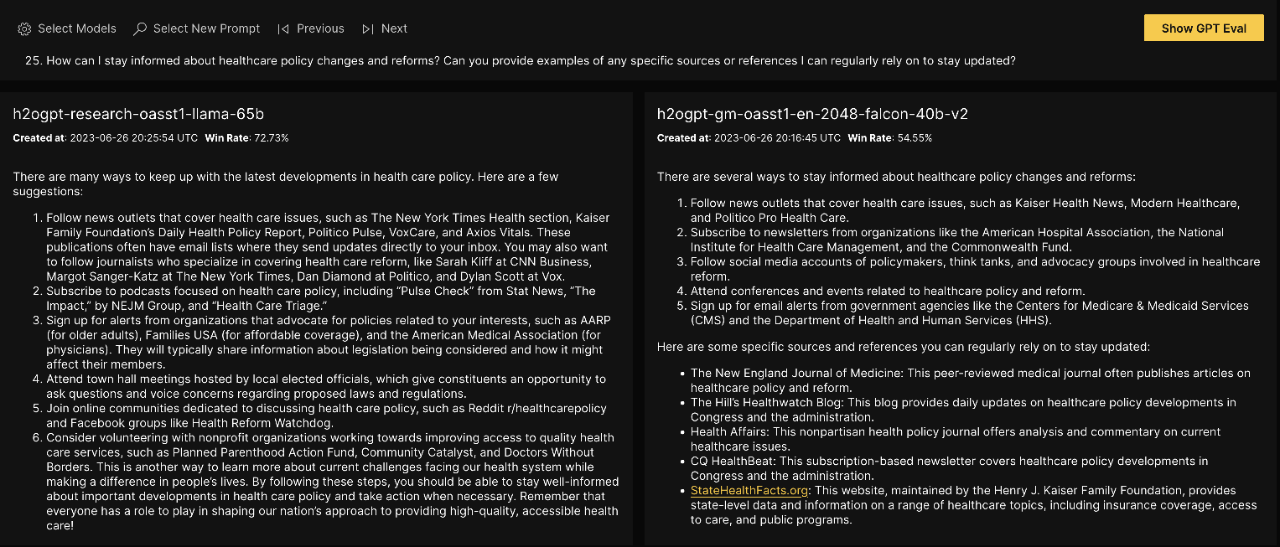

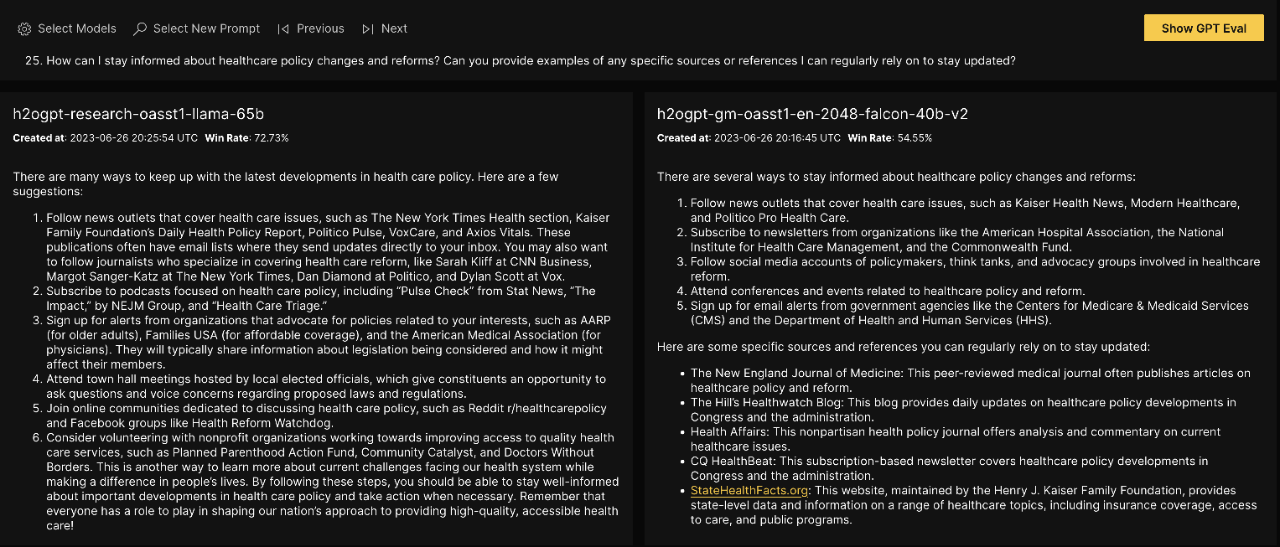

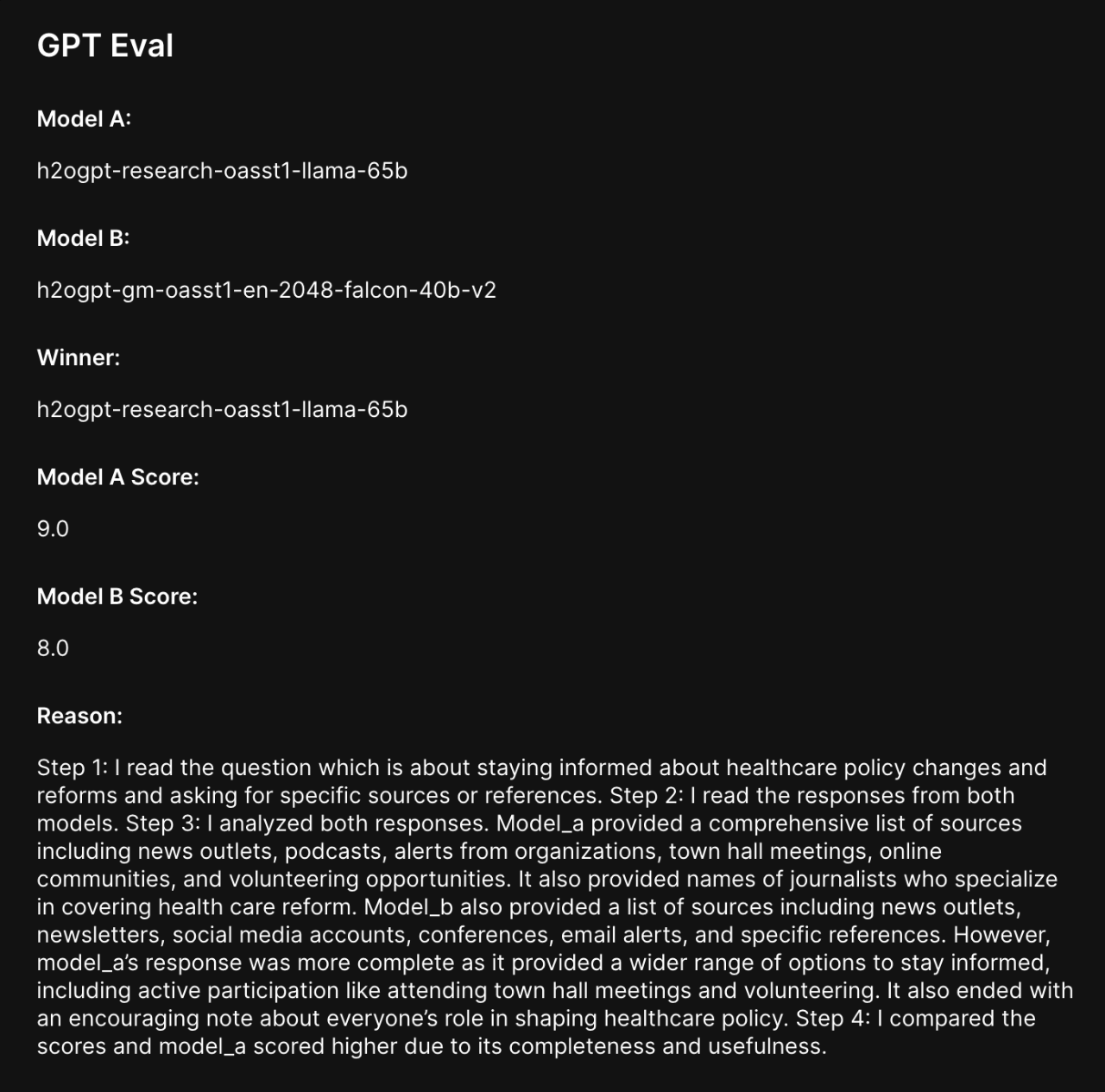

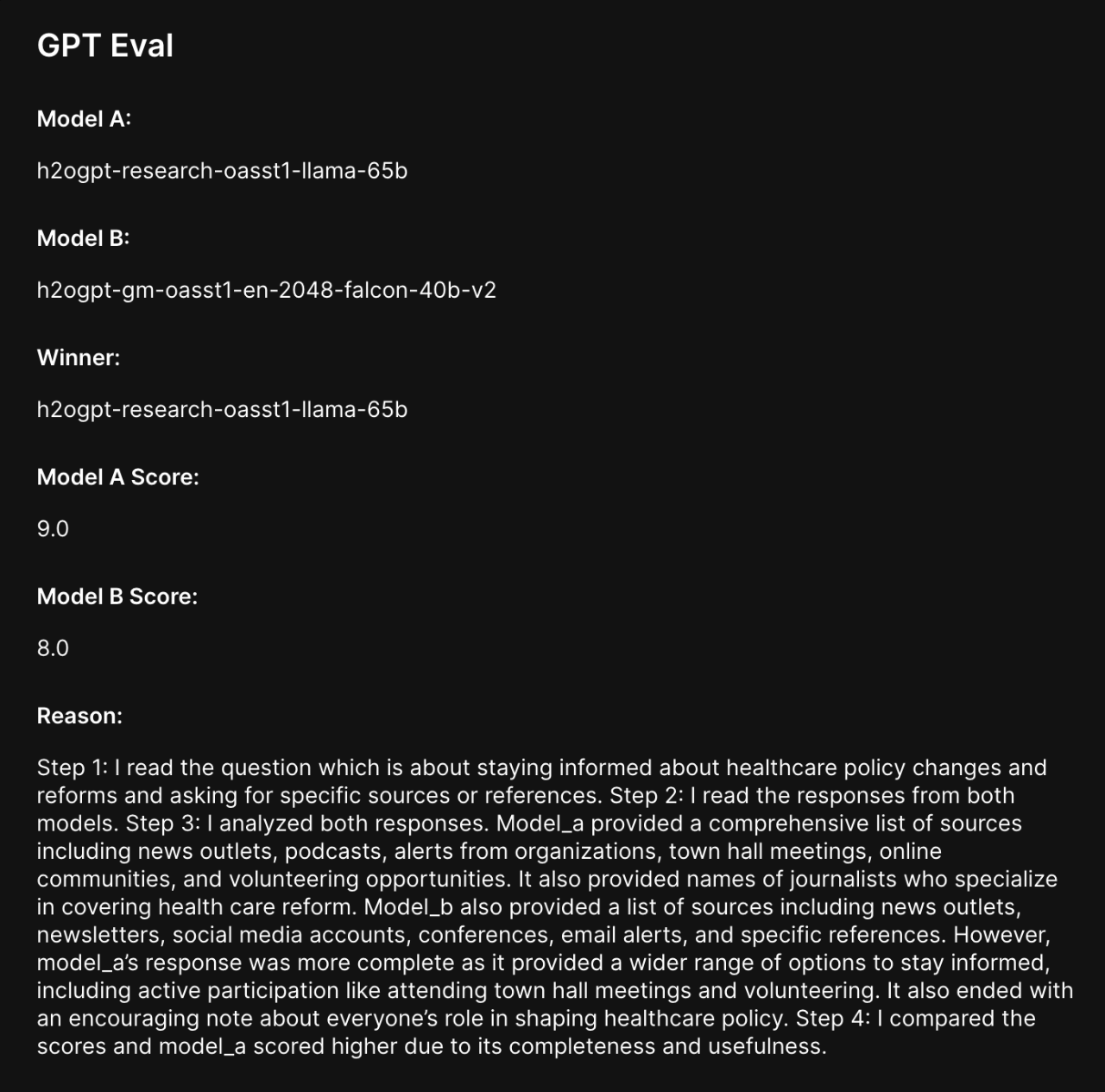

To ensure fairness in the evaluation, the sequence of all possible games is shuffled, and the positions of Models A and B are randomized before being sent to GPT-4 for evaluation. The final score for each model is considered the median ELO score from 1000 bootstrap rounds.

For an A/B tester, H2O’s evaluation scheme uses GPT-4-0613, which assigns scores to the responses from the models. The model is constrained to demonstrate understanding of the task and provide a detailed reason for its evaluation, which is displayed, thus ensuring a systematic and structured assessment.

Getting Started

You can see evaluations of popular models now at H2O EvalGPT !

To understand reproduce these results, all test prompts as well as every model’s response to every prompt are displayed and searchable.

Furthermore, for each A/B test, GPT-4’s evaluation can be seen.

Finally, you are able to contribute and explore the A/B testing method.

References

- Papineni et al. BLEU: a method for automatic evaluation of machine translation https://aclanthology.org/P02-1040.pdf

- Lin, ROUGE: A Package for Automatic Evaluation of Summaries https://aclanthology.org/W04-1013

- Liang et al., Holistic Evaluation of Language Models https://arxiv.org/abs/2211.09110

- Zheng et al., Judging LLM-as-a-judge with MT-Bench and Chatbot Arena https://arxiv.org/abs/2306.05685