Explore and learn from the first H2O Product Day event and discover more about all the latest & greatest innovations we’re making to our products and platforms.

Agenda:

H2O AI Cloud – 2:54

H2O AI Feature Store – 15:14

H2O Driverless AI – 32:20

H2O-3 – 40:25

H2O Hydrogen Torch – 54:51

H2O Document AI – 1:10:52

Model Validation – 1:28:36

H2O MLOps – 1:45:19

H2O Wave – 2:03:08

H2O AI AppStore – 2:24:22

H2O AutoDoc – 2:37:20

H2O Health – 2:45:03

H2O AI Feature Store Demo – 3:01:31

Read the Full Transcript

Welcome to the first H2O Product Day. This is our sprint release, the new series of events that you're launching, to pay for us to bring all the latest innovations that we are the company puts together all the time and take it to our customers, users and community. We are really, really happy that so many of you are going to be chatting today, and that you will be excited by all the innovations of the full lineup of products. But before we do that, for folks who might be coming in for the first time. Let me introduce ourselves. So H2O.AI is an AI first company, our mission is to democratize AI for everyone for 10 years now. And we built a whole suite of products to help data scientists, users, developers, engineers and businesses adopt and use AI to transform their business.

We're going to talk about a lot of these products today. But I want to sort of set the stage. We think of the entire AI journey for customers: going into adopting AI from scratch all the way to putting them on to production and getting value out of this. And to do that, think about tools that will help with what you make here, then the capabilities you need to operate that learning and AI model. And then innovate putting those to change using the models and your AI to change your physical and other products to even talk about today.

We'll go into the order that's already available for drivers, which a lot of human basic services integrate show open source, which is widely used by orders of longer, probably and I get a lot of new innovative products coming up as well to help you in your make journey they talk about or innovation in things like document data, which opens up a new kind of use cases to help again address the need for interfaces.

And finally, we'll cap it off with our offerings on the last which are helping to schedule models of production, or monitor them and retAIn them as needed.

And all of this is offered as part of the add on which is a one platform that brings together all these these ornaments right.

But with that I'm going to hand it over to Michelle Tanco to talk about our topic.

H2O AI Cloud

Hi everyone, my name is Michelle Tanco. I'm an H2O on the product team. And today we'll be talking to you about our end to end product and platform, H2O AI Cloud.

So what we're going to do today is first give a brief overview of what the Cloud is for anyone that is new to our MDM platform. And then we're gonna talk about what's been new and released in the Cloud the last three months, and some exciting features that we think you'll be interested to know about that's coming in the next three months.

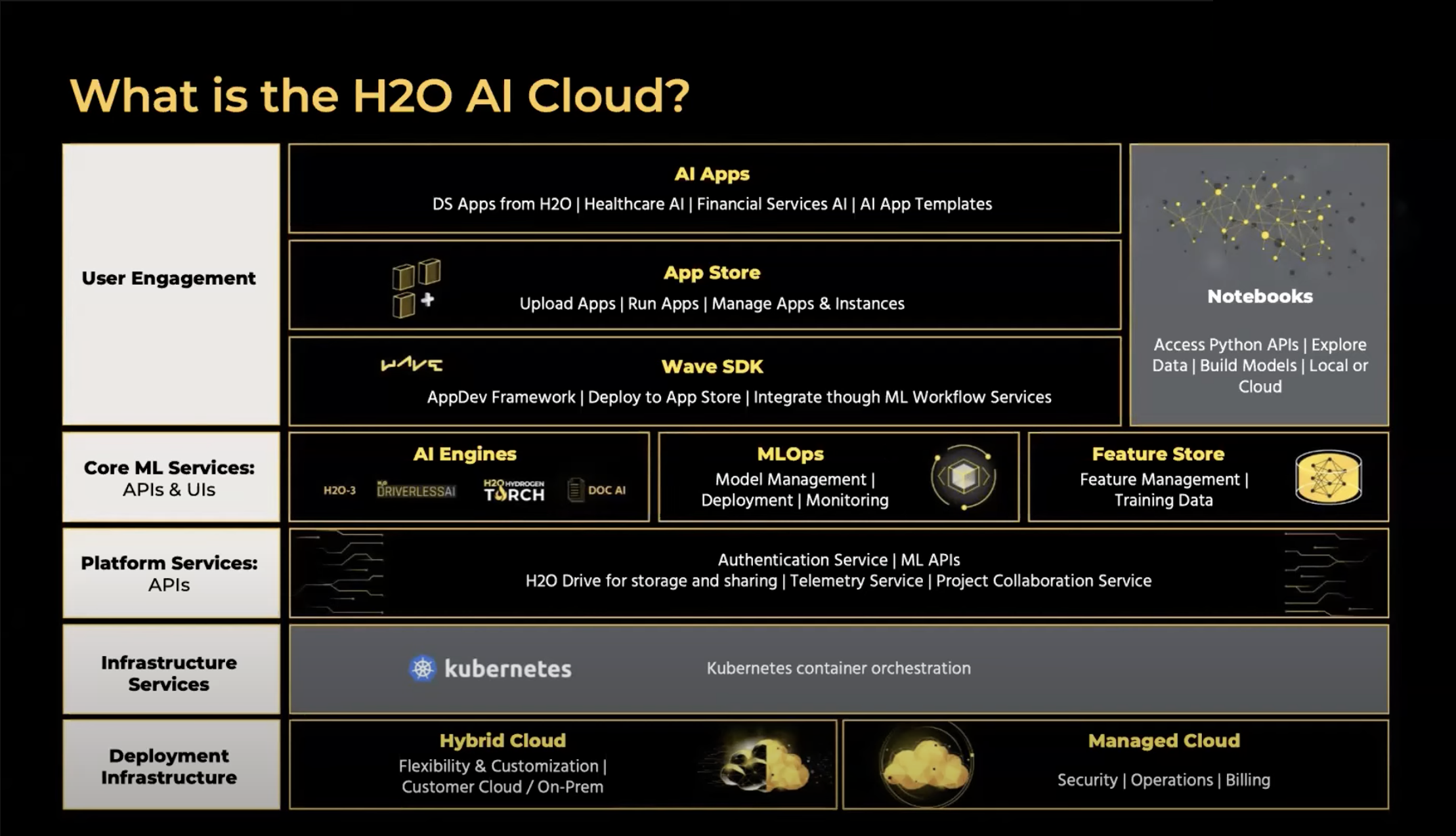

So what is the H2O AI Cloud? I'll just give you a brief overview of what it is. Our end to end platform allows different users in the AI journey to build models, deploy models, understand models, and then build applications for using these models in production. So you'll hear from many different product teams today, from our AI engineers who build your models to ML Ops teams or deploying them. But what I'm going to be focusing on in this first session is more on our platform services and the end to end glue and the end to end platform that allows you to get into the AI class.

The H2O AI Cloud product is available to customers in two main ways. The first is the Hybrid Cloud. So this is for our customers who have strong IT teams, really strong, specific details for security, and they want to have their platform in their own environment - this can be in your Cloud of choice. So in your own private VPC, AWS or Azure can also be prem on prem with anything where Kubernetes will run.

For other customers, the Managed Cloud might be a better solution. So this is ideal when you don't necessarily have an infrastructure or IP team to run your Kubernetes cluster. And you want H2O to handle all of this. So our Managed Cloud environment, it's the same capabilities as the Hybrid Cloud. Both of them are feature parity, except for it's all managed by H2O. So it is better for your end users, the admin, infrastructure, Kubernetes. And all that is handled by H2O, and you have access to your own private environment, or building your own models and using the platform.

So what's new in the H2O AI Cloud? There are some new things that have come out in the last couple of months that we wanted to show you. The first is our platform API token. So a lot of end users will interact with our platform from the UI or the front end. But a lot of our data scientists are more likely to be using clients in the background. So we have a new easy way to integrate with all of our Python clients securely using a single code, a single platform token. So let me show you right now a demo on how that works.

So I'm going to go to my Cloud environment, the H2O AI, and log in.

And after logging in, I'm going to click on my name on the top and go to the CLI and API Access page. And this page has all the information that I need to securely access from my local workstation. So I'm going to specifically go to the API section, where I can see the different information that I need to run from my local machine. So I can connect to the Cloud in my Jupyter notebooks for building models, or maybe in my favorite IDE like pi charm or VS code, if I'm building an app. So what I'm going to do is go to Jupiter, and go ahead and run one of my tutorials. This is Jupiter running on my local machine. And then I'll go to a notebook that is on my local machine for connecting to Driverless AI.

So other than importing my libraries, what I do to connect is use the information that I found on my platform page to securely connect to this environment.

So go ahead and run the Connect command. And you'll see that we're using a little bit of security. So we don't put our token directly in our notebook. But I have a link that I can click here that will take me to log into the Cloud. And it will give me my personalized access token that can use this token. And I'm now directly connected to my AI engine manager. And I can go ahead and start connecting to Driverless AI using AutoML connect H2O-3 for building models, and so forth. So this works for all of our API's, whether you want to build models, or you're deploying models with MLOps, or if we start using features there, which we'll talk about today. But you have one token that you can use throughout all of your notebooks and all of your code to start developing from your local machine and securely connecting to your platform.

Alright, and then the next thing I want to talk about has come out recently is specifically for our Managed Cloud customers at this point, and I'm going to talk about H2O drive. So I'm going to switch the environment page, thank you, and go to my manage Cloud environment. So here I have an instance of H2O Managed Cloud, that is only for me, it's my internal environment. And again, for each customer, it is a fully dedicated environment. And what I'm going to do here is use the H2O-provided object store to upload data once into the Cloud from my favorite location, and then I can access it in Driverless AI or other tools. So here, I'll go to the Drive app. And I'll go ahead and open up the application.

Alright, so here I can see a couple of datasets that I uploaded ahead of time. I can add a new data set I can connect to my favorite connector, whether it's S3 or Snowflake or upload files from my local machine. But this is one place to import data which I then use throughout the Cloud. So if I then go into an instance of Driverless AI, it will show me the datasets that I've uploaded in Drive and allow me to directly use those.

With a new profile, we can use the connectors tab to connect securely to my object store or database of choice. And my credentials are saved securely for me. So every time I want to connect to my S3 bucket, I don't need to put in my access token. By logging into the app, it knows who I am. And I can securely have access to pull in my data.

So after we bring these datasets in, we can then either share them with other users by creating a secure link, or we have access to these other products.

Now, we're going to talk about what's coming next in the H2O AI Cloud. And the first thing I'm excited to share with you is our new homepage for the Add Cloud platform. So if you're an HR Cloud user, you might be very familiar with the AppStore page, which is our current landing page for the ad Cloud. But we have a new homepage, which our goal is to be able to get all users exactly where they want to go as fast as possible. So this is the first of many versions that we will be sharing with you. And it is available today only internally, this is a sneak preview. So expect this in the next couple of releases. And within the next quarter.

On the homepage we can easily get into where we can build our own machine learning models. So I can easily get to Driverless AI to spin up an instance of Driverless AI for myself to build models, I can, if I'm interested in doing Market Basket models, or if I'm interested in doing Hydrogen Torch, which you hear about today, we also have access to applications that help us learn and control wave. So we'll talk about Wave later today. But it's a way to start building your own AI applications. And here are some apps that will teach us how to use Wave. In the center, I have access to all the things that I personally am doing. So everyone's center will look a little bit different. Here, I have a lot of apps running. So these are app instances that I want to get to easily, I can go directly into it. So if I want to visit the theme generator app, this application allows me to create custom color themes for Wave. And I can get there from one click of my homepage. So all of my apps are here. My pen dapsone are my favorite personal things in the AppStore. So what I want to be able to get and see is available here. And then for any developers, you'll have an imported app section where you can see apps that you've uploaded. So these are applications I and other people might be using.

At the bottom, I have access to common data science workflows. So ML labs for deploying models, I can do text specific modeling here. Model validation, which you'll hear about later allows you to understand if your model is ready for production or not, and auto insights for exploring the data. And then finally, we can always see the latest things that have been uploaded in the app store with the latest uploaded app. And it tells you what's new. So we have a lot of plans for this page. But we hope that helps everyone get into what they need to do when they're in the Cloud a little bit faster and improve your user experience.

All right. And the last thing I want to announce today, before we jump to the next product, is that I wanted to point out our documentation. So this is avAIlable from the doctor H2O AI page. But this is our new H2O AI Cloud documentation with the goal being to get you to the specific docs that you need. So this overall page gives you a little bit more information about what the Cloud is, a quickstart tutorial for how to get started, and tells you what the platform is all about and our goals here. You can also see that major capabilities, how to steal AI engines for building models. For each of these you can get into the specific features you might be interested in. So if you're coming to the Cloud, because you want to build a model and Driverless AI, then maybe you should come over to the datasets and Driverless to learn how to import your data.

So it's access to everything you might need here more information about MLOps and apps. And if you just want to know more about the Cloud in general, this is great. It gives you an idea of our different offerings, how they're managed Cloud and hybrid Cloud work. Who are our target end users? And where do they use different types of functionalities? And so forth.

Alright, so thanks for your time today. I'm gonna pass it off to the next product now. And if you have questions, please feel free to ask them in the chat.

We're using this for the National Youth League. Well, we've done the last copper that allows our customers to begin to work with me so a lot of our customers are now able to come into fully managed processes hosted and operated by H2O. And it comes with a highly secure environment that can have a customer come in bringing the data safely, launch their apps, from the ages enveloping H2O and then selling x logs into it. There's no fuss in addition to our hybrid, one of which is a lot more interesting. But the Manage store offers a very very easy and fast ramp to production to get value from it.

H2O AI Feature Store

Hi, everyone, this is Thomas Brady, Product Manager at H2O. Today I'll be talking about H2O’s Feature Store. I'll run through a couple of very quick introductory slides. And then we'll pop over to our presentation.

So the biggest thing that we want to start out with and talking about Feature Store as a new product that we announced last fall. It's a partnership that we've developed with wireless carrier AT&T. You should think of Feature Stores as a single source of truth for training data inside H2O AI Cloud.

But the word problem does Feature Store solve. So today, many customers are building training models, transforming features, making a lot of investment into all the computation and processing for making the features. But a lot of times that's kept within their own system within databases that are maintained by specific teams. And it's hard for data scientists across an organization to get access to this features. So as a result, you have lost production value that is it really repurposed for other uses, feature data that is siloed within teams. And a lot of times you don't really have a good sense of where your feature data comes from. And it's challenging for you to anticipate drift and any issues that you might have with feature data.

So what is a Feature Store? A Feature Store is the nervous system for AI organization. With it, you can transform your data into features to maximize that return on investment, that scale model production for your deployment of artificial intelligence. And with future stores you can detect and anticipate drift in your data, detect anomalies, and refresh, manage and refresh the lifecycle of your features. And you can also compare Feature Stores with feature rank to get feature recommendations with feature recommended or recommender and offer trade exchange features with feature exchange.

And the big thing that we also want to underscore is that many folks are moving from on prem into Clouds, and they're creating data Clouds. The next level is to move from data Clouds to AI Clouds. And this is where Feature Store will assist. This shows you sort of an example architecture the way a Feature Store works. So you'd have raw data coming in, or process features real time and batch that go through whatever feature engineering pipeline you might use. And then it goes into our future store where there's an online and an offline engine and a metadata registry. And from there features are consumed downstream for model train, batch predictions or real time predictions.

And the biggest thing I want to underscore is that Feature Store is designed with composable architecture. And what I mean by that is that we provide support to any feature engineering pipeline that you might have today, whether it's a Driverless AI Mojo, data, bricks, Jupiter Snowflake, or Salesforce, Einstein. Those are just a couple of examples. But really, it's designed to traverse Clouds and hybrid and on prem. So think of it as being agnostic to any feature engineering pipeline, enabling it to serve for real time inference and monitoring.

And with that, I think I'll start sharing my screen. Alright, so I'll quickly go through this demo just to talk about how Feature Store works. And give a couple of simple examples of how we envisioned Feature Store today. So like I said, in this example, we'll use Feature Store data or Feature Store features to train models and Driverless AI. So the problem here is that we want to predict flight delays, due to weather disruptions.

All the steps are laid out here. Before that, we'll register a new project and a new feature set using a data bricks notebook, which I'm showing you right here. I'm just putting the feature data into the Feature Store, cache, the retrieved raw features and batch and ingest them into drivers. So only do those portions today. Right now our datasets that we're using, in this example, our historical dataset, which we call weather offline, and simulated real time flight data, which we have in a CSV file.

So in this story, I've laid out a couple of Persona server personas that we use. In this case, I'm using superheroes from the Marvel Cinematic Universe. T'Challa is known as Black Panther, he’s a data engineer who registers and ingests the features to future store from various data sources. Auro, who you might also know as Storm, she's a data scientist who consumes features for features torque and creates a model logic using Driverless AI. And then Peter Quill, you might know Star Lord from Guardians of the Galaxy. He's a data engineer who ingest new features to the online Feature Store. And we also have Scott who will continue throughout the story as we do this, the Senate demo over and over again, he's a data scientist who retrieves data from the online Feature Store and scores. So we'll start at the very beginning here where T'Challa, aka Black Panther, sets up then connects to the H2O Feature Store.

Once we're connected, all we'll do is we'll input this example data source dataset that's offline weather data, that historical data. And then we'll also ingest the airline source, which is a large data set that simulates real time weather airline data coming in. So now that we've ingested all those two challenges, we're gonna go through and register new features, first extract the schema from the two datasets, and create a new project, then he'll register the schema as the feature set and just the feature data.

This process will go through all these steps, because especially with the airline data, it's a rather large dataset, but I'll skip ahead to through all the steps where we registered the features. So with a long set it will do as we extract the schema. Once that runs, we ended up creating a new project that we call flight delay prediction demo that has this little title called pipe predictor, create that new project. And then I register the schema as the feature set inside that project. Once that runs, you can see it creates the project name and the feature set name has all these attributes related to the feature who created, the time that it was created, and what it's used for.

After that, we registered the weather schema, which is just another set to create a feature set out of the weather view. Again, we have all the metadata related to that feature set. And then from there I go and execute the ingestion step to ingest all the actual features from your data into what Feature Store executes. When I go back in, I joined the two feature sets. Here, I'm going to join on the key date so that we can combine them and do transformations from those features.

Once we complete that, we do the same exact steps where we extract the schema from the join feature sets. And then we ingest them into the Feature Store. So first T’Challa does the same exact step where he registers the airline schema and ingests the joint features.

And then from there, we download the airline weather features as a data frame and then retrieve them as a spark trade here. And then moving on. Now we're gonna go to Aurora. Aurora, as you may know, is Storm from the X-Men, she's gonna use the feature set to build the model in Driverless AI.

So first, we said a little a couple of simple helper functions that we're using to pull out the parquet file and download and extract the Mojo so that we can pull out the features when we run the experiments and then have connection handling setup to Driverless whereas inputted my drivers URL and the access token once actually does connect to Driverless and retrieve all the features. So from here I'm going to do the driver dataset.

While we're waiting on this command to run, I'm going back to the top. We can talk about it with all of the users here. In this scenario, you can imagine that a data engineer has been managing any of the feature engineering pipelines within their organization. And at this point, they're connected to the Feature Store as a source. They're managing connection handling setup for the data scientists. And then data scientists come in and they're able to shop. You can think of them browsing through the Feature Store to find the right features that they want to use for their projects. And what we can do is also go through a quick run through of the UI and features Forbes probably.

Alright, so I'll give a real quick walkthrough of the UI. I'm already on this command set to do it. So the first thing you'll see here within the Feature Store is that there's kind of three main areas along the header, you're going to have a very simple dashboard that shows you an overview of your features, for instance, and a search bar where you can conduct advanced search, and filter and facet off of whatever dimensions that you want to. On the left hand side, you're able to see where projects and feature sets are, if they're able to manage access to projects, and manage access to feature sets. Within the Project tab, you'll see all the status of projects that are locked or unlocked, and all the winning access permissions. Within feature sets, you can view all of your feature sets, or see all the feature sets that you have access to within the Feature Store. And you can manage and view all the access requests that are coming your way and grant access to other folks in the future. So within the feature sets, I can open up one feature set here, this is another example of the weather flight delay prediction. And within this feature set, this is called the feature set detAIl page. On the top side, you have the name of the project associated with the AIrline schedules, number of features and who the owner is. Under the key detAIls and the detAIl page, you'll see the description of it was created version, the feature type, data sources were processed there was and you'll see things related to monitoring tend to live any special items where you have sensitive data such as PCI, RPI, SPI, etc, then your feature set. And on the right hand side, you actually have to be able to drill down into features and see feature metadata related to the features. So of the datatype the variable importance, what version of this feature is, and what the recent version change was, along with other metadata, such as feature statistics. So from here, we'll pop back over to our notebook process here.

Okay, looks like I'm having a little bit of issues here with this dataset, it is rather large. This worked before. So maybe what we'll do is we'll pause right here. And we'll come back and mediate a little bit and with that, I'll turn it back over.

All right, so the new animation that you're building to delete the last few months that shouldn't be easy. Edie and I wanted to bring up the next product over here quickly, which is fabulous.

H2O Driverless AI

Hi, everyone. I'm Megan Parker. I'm a data scientist at H2O and today I'm going to be talking a little bit about Driverless AI and H2-3, two of our machine learning products that H2O has. We're gonna start with Driverless AI and we're going to touch on what's new in the recent versions.

Driverless AI, as many of you know, is our automated machine learning product. It encompasses best practices from Kaggle grandmasters from our team to really reduce the time to build a model from months to hours.

It's doing a lot of automated machine learning, but it also offers playability. So while we're incorporating these best practices under the hood, we like to provide reasons around why the model is doing what it's doing explainability and fairness.

Today I'm going to be talking about some of the new features that have been added to Driverless AI and the last major release. I'll be focusing a lot today on recipe management. So while Driverless AI is really automated, there is the ability to add recipes to the experiments and just give a lot more custom integration and control. We've added a recipe management to list recipes, add recipes, and most importantly, edit them within the UI.

And this kind of leads into our second new feature transparency. This is, I think, gonna be really cool to show. But with each experiment, you'll be able to get Python code that's auto generated for the final model. And what's great about that is not only can you see everything that's happening in the experiment, but you can actually edit it. And we're going to talk about different reasons why you might want to do that and how to do that today in our demo. The other two features that were recently added that I'll talk about is the Admin API. So you created an Admin API for admins, so that they can manage entities created by other users. And then finally, we've added Mojo Shafi support router, and the C++ Mojo. So the C++ module is offered if you want to deploy in our Python environments. Most recently, we've added Chapuis reason code. If you're familiar with shaky reason codes, they add a little bit more information to the predictions and how they're being created. So in today's demo, we're going to be talking about if an employee will leave a company. So rather than just getting the probability of an employee leaving, we'll get the reasons behind it. And now that's supported it in a shockwave.

Alright.

Okay. So with that, I'm going to jump into a demo.

Right, I'm in Driverless AI right now. Today, we're going to be doing a demo of employee attrition. So I can in Driverless, let's say, I click on the details page, and see my dataset.

Here's my data set rows, I have attrition, the column that I'm going to try to predict. But I also have a bunch of different information about the employee. So we're going to use Driverless AI today to try to predict, we can target approval quit, I'm going to select my target comm which is going to be attrition.

And with Driverless AI, that's really it. I'm going to lower some knobs here for the demo purpose, but I don't have to provide much else. Driverless AI is going to, as I mentioned before, encompass all these best practices. So it's going to check for data comm type, it's going to check for leakage. Is there a drift happening? Have we split the data to check how well it's doing. And then most importantly, it's going to do automated feature engineering, automated algorithms collection and automatic model tuning. This is all happening under the hood, it just has my whole dataset starting to build models, you're gonna see that pop up here. So here's my model on the left hand side, the variable importance is in the middle. And some of the variables we're seeing are automatically generated by Driverless AI like business travel, this is the target encoding feature. And then I can see how well the model is doing on the right. It's going to keep doing this until it finds the best model, I'm going to switch over to a completed model.

This is my same employee dataset, but it's already run. And you'll notice the yellow buttons pop up once it's complete. So there'll be things like model interpretation, download that deployment objects for scoring, you can also turn the experiments. And if you click on this, there's an individual recipe button. And I'm going to click on it.

And we're going to upload it as a custom recipe.

Now, as I said, that auto generated Python code, this is going to tell me pretty much exactly what that final model is doing. So now I have a lot more transparency into the model. And most importantly, I can edit this. So I'm going to go down a little bit to talk about two of the functions that I think might be most valuable to our audience.

The first is the model. That model function. So this function is actually just that, showing you all of the parameters of the final algorithms, so I can see the light GBM model that was decided on. And here's all the parameters that were used for the final model. Now, if I was so inclined, I could edit this and do slight modifications to my model, either, I think maybe the performance may be improved, or I want to do it from the surface. Maybe, for example, increase that regularization a little bit.

The other function in this code that's really interesting is a set game. Now, this is all about feature engineering. And a lot of times we hear requests, like, you know, certain features that are engineered. We don't like you know, from a business perspective, that might not make sense. Or the other way I need this column to be in the model. Both of these can be done and even further customizations in this step change function.

I'm gonna go down a little bit. Here, I can see all of the features that were built into my model, the beginning of the features are going to be the original ones. So age has an original transformer, nothing was done to it. But I can go ahead and remove that I don't work with this particular feature and I'm going to delete it.

If I go down further, I can see the features that were engineered by Driverless AI automatically. So here I have business travel, the applied targeting coating on it. I can modify the parameters of this car. In encoding, I can remove it completely, or I can force it in its force, the model has to be right there to get more control by looking at this particular code and again, editing it, I can save this as a new recipe and activated driver, okay, it will automatically check the code to make sure it's okay to use. I'll go back to my experiments tab. And let's try to build another model with this recipe.

It's like my target column again, in nutrition. The major difference here is I'm gonna go to our experts, expert settings, click on recipes. And I'm going to ask to include a specific individual. This is my new recipe, my individual recipe, save it. And on the left hand side, you'll see that rather than the typical display here, it's all going to be custom individual. So I've customized this completely. When I launched the experiments, rather than running through all of the methods and stuff as before that feature evolution model tuning, it's actually just going to build the final model for me. So I'm gonna get a final model, with those modifications that I made in the Python code. What's great about that is I'm still going to get some the Mojo so I still have that completely independent Java object for deployment, training my model now. And once it's done, look at that, that button to get the deployment object and interpret it and all of the things that I normally would do with the Driverless AI model.

With that, I'm going to go back to my slides and talk a little bit about H2O-3.

H2O-3

H2O-3 is another machine learning product we offer. It is open source distributed in memory machine learning. So each two or three, all the algorithms are built by our engineering teams, they are actually boots, they're all built so that they're distributed.

What's great about H2O-3 is I could import unlimited amounts of data. As long as I have a cluster big enough that I can still connect to the apiary cluster using Python or R API's. So if I really like our studio, or if I have, you know, pi charm or Jupyter Notebook set up on my local computer, I can still use that to connect to the largest jewelry cluster that's running on the Cloud, around Hadoop World cetera.

All of the algorithms also support this Mojo, this dependent Java deployment object, so I can go ahead and build my model. And then I have this really low latency deployment object that's really flexible and independent. They were going to be discussing what's new and h two or three and also a little bit about how you can use each two or three in the AI Cloud and Michelle was talking about.

So I'm going to talk about this and we're going to demo it. But we have a couple of new algorithms that were created. We also have pre and post modeling additions to the platform. And I forgot to mention, we have a new ability to import Mojo's into any issue, a free version, that you have models created in older versions, you can import those models and newer h two or three versions for comparison purposes. So this can be really nice when you're thinking about whether or not to use a model. And then finally, to have explainability.

These were all added pretty recently, when it first touches on the algorithms that were added. So one of our new algorithms is called the uplift model. It's a little bit different than a traditional supervised learning model, because we're not just trying to predict the target column, but we're trying to predict the target column in relation to some treatments. So going along this example of a lead converting to a customer, I may decide maybe I can get a lead to convert if I offer a discount.

So this particular model isn't just figuring out if a lead will convert to a customer, but it's figuring out what will lead to a customer if they are targeted with a discount. So there's this context of a treatment occurring. And the output of the uplift model is going to be this graph on the right, so who's persuadable, who would actually convert if we offered a discount, who's a sure thing, they're going to convert to a customer no matter what, who's never going to. And then finally, who might have an adverse reaction to any marketing, so maybe it will cause them to go the other direction.

After I demo this for a minute. The other option we've had for a while, but we recently added multiclass support for both that model. Well, that model generates rules using decision trees. These are the linear models to filter the world and then create clear text rules that you can use to predict.

So I demoed that we've also offered some pre and post modeling, so feature selection with Instagram. So this is a new algorithm that is used to determine which features are not invisible. And also a model selection tool box which is DLM specific, so linear model specific, but it gives you this idea of what are the best predictors. Given the constraint that I can't have more than n predictors, and I'll show this to you.

And then finally, explainability. So we have a lot of global explainability methods available like variable important partial dependence plot, the Shapley summary plotting, showing on the left hand, we also have our local explainability through Shafilea reason code. And this is a graph for a specific route. And what's great about the shabbily reason codes is that it's also available in our Java objects. So again, if you want to not only know who's going to quit, but why, that's available in our deployments.

Alright, so let's go to a demo. I'm going to start by going to our API call that Michelle was showing, and we're going to start by creating an h2 as requested. So to do that, I'm going to go to my AI NS and on the top and click on the H2O menu. To launch a new cluster, you can click the blue button.

And what's really nice about the AI engine manager is that I don't need to know how many nodes a cluster should have or how big the node should be, I can actually just give it information about my dataset size. And it's going to tell me, so if I have 10 million rows, 300 columns, how big should this Cluster B, and it tells me I should have two nodes, each about 50 gigabytes of memory. So this helps a lot, it'll automatically optimize based on your data set size, start the cluster for you. And once I've done that, I'll get this blue hyperlink. I can click on that and see flow, which is our web based UI. I can do machine learning by pointing and clicking or I can connect to this through Python. So I'm gonna go to Jupiter and show this a little through Python and features.

First thing I'm going to do is I'm going to import my data, we're going to use the same data as before from Play turn data. And I'm going to use the Infogrames to see which predictors might be helpful, which maybe aren't relevant. I'm going to call this Instagram model. And we'll see the plot. I can see all my predictors here. And you'll notice that there's this red line.

Any features inside that red, not red line, we can consider maybe not that helpful. And there are two ways we do this. One is total information, which is really, you know, is this variable going to be used by the model? Is it predictive at all for attrition? But the other part of the plot isn't that information can be really interesting. Does this feature bring any additional information if it can get off?

If there are redundant features, we don't need to use all of them. I can see this information in a table as well. And we see total working years for example, while it has information regarding attrition, it has no net new information.

So if I take a look at a correlation matrix, I see the total working years is highly correlated with job level, monthly income near the company. So while it is relevant, maybe with all those other features avAIlable, it doesn't bring any new information for the model. This is a really easy and nice way to figure out how to filter down your features to our strong core set of features.

The other feature selection method we've recently added as model selection toolbox is nice to the linear model, but it will automatically figure out the best number of predictors up to seven, as I've sAId, so you can modify this as you want to, but you'll see a table, and we'll give you a list of the features or predictors within this limit, and the highest R squared value. So again, another easy and quick way to figure out the best features. And this time was in the context of a linear model.

Going further, let's start by building a model. So we talked about the uplift model, which gives us that prediction of a target column hit within the context of some treatment. So let's say we think we can prevent people from quitting as we say they're not, they don't have to travel frequently anymore. So that's going to be our treatment.

We're going to build uplift Random Forests estimator, it's going to be the same as any other supervised learning model, and H2O three, but we're going to add this treatment column. So we're going to try to predict with the context of whether this treatment is applied or not.

And the output when you predict is going to be a little bit different, you're gonna get this uplift prediction. So that is how much of the prediction changes if the treatment was applied. The negative value says they're less likely to quit if they didn't have to travel frequently. So not only are we getting a prediction of churn, but we're also getting a sense of who we could target with maybe the incentive of less travel, append employees.

The other new algorithm that we can show today or will fit well, if it's going to generate a federal rule for me, and I can pull that rule about using a blue important function. So I've asked for no more than five rules. And the length, as a rule, can't be more than five either. And here's my rules, and I can see them in a really clear format. So if the overtime is now your total working years is greater than two and a half, and they've been at the company less than 30 years, they have allowed, likely less likelihood of churning.

Now let's build some models. Let's explain them. So I have my DVM model here, I'm going to build it, a tool that offers a lot of out of the box explainability. So I can look at a variable importance plot, I can do partial kinds of blocks, Alexei.

And see, for example, how the prediction changes for different job roles or get certain job roles more likely to cause a term. We can also call this explain function. And this is going to run everything automatically. So I get this report right away, it'll show me how the model is performing on a dataset. What does variable importance look like? Shapley, partial dependence plots, and so on. So this is really nice, if you just want a kind of overview of the model really quickly. This is a relatively new feature as well.

So now that we've talked about some of the new features, I'm gonna go back to our app store and talk about what we can do with those models. Now that we're in this hyper Cloud. The first thing I'm going to do is I have an instance running Autodesk so we're gonna go and visit it.

AutoDoc is automated documentation of my model. Now this comes out of the box of Driverless AI, I'm sure some of you have seen this download Autodesk button. But you can also get the AutoDoc within the hybrid Cloud through this H2O AutoDoc app. So I can ask to create a new AutoDoc and select it from an H2O-3 moja. Yeah, but it's going to be an automatic documentation with experiment overview, a few additional things like.

Like, feature importance, the final model, how to do information about how to reproduce this model. And partially, they also offer additional information, the appendix depending on the algorithm. And I can download this in different formats. So that's one of the nice features of using the hybrid Cloud, which was great. The other thing I can do is deploy the model. So I made the Math Model, I have a mo job, I can use the MLS platform, which we'll talk about more later today, to automatically get a REST API that can be paying to get predictions in real time, I can create a deployment I've already done that using the H two or three Mojo. And I can click on that and you'll see a sample curl request that I can pay to get predictions for bleachers. This is one of the nice features as well as using the hybrid Cloud. Now that I have the Mojo I have a really quick and easy way to deploy the model and manage it.

I'm going to jump and talk a couple more slides about what's coming. And I'll pass it to our next speaker. So what's coming soon at row three? With JVM improvements, we're adding more robustness to outliers. And we're adding interaction constraints.

And then another thing we're adding to DLM was an influence diagnostics. So how much does one record influence the model coefficients? Do outliers really cause a model change significantly? For post modeling, we're adding the ability to import photos into newer H2O-3 versions. So if you have an older model with a poll, Joe, you can import them into newer H2O-3 versions in the future. And we're adding more motor support to our new models.

Just to touch quickly on our GBM improvements, we are adding a uniform, robust spinning. If any of you are building models, you might notice that if you have a few columns, range based spinning, where I'm bidding based on the range of that x axis, causes a lot of empty bins. So here I have an outlier for income. And when I flipped by range, I ended up with all these empty bins.

The uniform robust spinning is going to split the non empty bins and future iterations based on the error. So bins that have a lot of error, we're getting them wrong a lot we're going to focus on and slip. You can think of this kind of like contravening where we're rather than bidding based on bidding based on population of each of the records in each bin. But what's nice about this new bidding method is that, as you know, our tests have pretty similar performance to Pantel bidding when you have a few variables in terms of accuracy, but the time to build is much much quicker. So we have this really hopefully similar accuracy to the content bidding. It was a significant reduction in time and that'll be coming out in our next H2O-3 release.

This one, just to highlight this, is a whole bunch of information to be added to AI and digital clean law for the customers and users. We should be able to take advantage of all the new algorithms, especially the customer university that maybe should have had earlier. It's a big game changer that allows water for large enterprise customers, especially with regulated use cases, to be able to get full transparency insured. So you can take and model and as I point and edit every single customer need.

So, super excited. This is a big, big bag that I am offering to customers. And then I'm going to hand it over to the next speaker, Dimitri Gordy. You are one of our cabinet grandmasters and also manager for our Hydrogen Torch moralization product. He's going to talk about both of those.

H2O Hydrogen Torch

Welcome, everyone, I am fortunate to talk about the product which we launched quite recently, just a few months ago. So I will take a little bit of time to go through the basics about the product.

Hydrogen Torch is our deep learning back end. So it's a product which was built from the point of view of providing you with the tool to build deep learning models. And we're focusing on unstructured data as the input. So we're going to be talking, we're talking about NLP and images. And today, we're gonna be talking about simple audio data. And with Hydrogen Torch, you are enabled to build state of the art deep learning models for unstructured data you have. So we're talking not just about shipping, pre-built models and common tasks. So you will be capable of training models, to your own data and to your own downstream tasks, including all the state of the art techniques, state of the art architectures. And, of course, transfer learning as a part of it. Last but not least, we are also putting a lot of tension on the ability to deploy and bring it to production. So of course, we have it integrated with the ML ops, which you will hear about a bit later, which is our product to serve the models. So besides Driverless AI and H2O, three models serve as a way to also deploy deep learning Hydrogen Torch models and serve them in production to solve your business needs.

What are the key points of Hydrogen Torch? First of all, we're focused on building UI. So a no code interface for you to start building deep learning models right away. That will require way less headache from your side, to code everything in, because everything is already built, and well tuned and tested for you. And especially for those data scientists who have not that much experience with deep learning. Overall, this tool can be a quick start to just put the data in and start producing the models, which have very, very good performance. And probably equally important also to gain experience about how deep learning works, what are the tricks and what are the important things you can use in deep learning to get the best out of the data you have. So we also see it as a tool for learning and getting more experienced and look more experienced being able to build more accurate models with Hydrogen Torch.

We have a variety of text, images, and with the upcoming release, audio model, problem types to solve. So we're talking not only classical classification and regression, many other tasks included and this set of the tasks is being constantly extended, and of course driven by the demand in the market as well.

And mentioned the training best practices, which not only includes modern neural network art textures and transfer learning. But it also includes lots of training, ideas and training techniques which were developed in the past years, which actually bring the new neural networks to the state of the art level. And sometimes, making the best out of the training practice gets you a better result than just building a larger, heavier model or using more complicated neural network architecture.

All these parameters also, of course, require some tuning, and we provide the ability to. To select the best model to run grid search to search for the best model accuracy, and pick the most accurate model of all the possible combinations of parameters, neural network architectures, and so on. Last but not least, is inflexible deployment is an oval already mentioned, where natively deploying Hydrogen Torch models to H2O MLOps. But we also provide the ability to download a package as a Python will package over the models you build, and deploy it to your own Python require a Python environment, if needed.

And now let's jump to what's new on Hydrogen Torch. We made an official launch just a few months ago, but since then, we had one minor release and one kind of a larger release coming later this month. They both included quite a lot of changes and improvements. But I would like to mention just a few of those, kind of the major ones. The first one is a new set of object detection algorithms. With this item, I would like just to emphasize that we're enhancing the tools which are already there in Hydrogen Torch by means of trying it out against current competitions and learning on Kaggle. About the new techniques which perform better than the rest. And after each competition, we just collect the feedback from the community, we collect our own experience, and implement and add new ideas and algorithms into the tool. And that happens with object detection. So we're adding efficient depth and few other object detection algorithms, you will be able just to choose out of the list of the algorithms there without the necessity to code and test them.

Few other large changes in items listed include instance segmentation problem type, I will show it in detail in the demo. So this is a new image problem type and an extension of the 12 problem types we had before. We're adding draft camps, which is a way to explain model predictions and with the grab camps, we're introducing the engine of model explanations in Hydrogen Torch, which will be of course, expanded and improved over the next releases. We're starting with image data. But of course, we'll expand it to NLP and audio. And last but not least in the least, we're introducing deep learning for audio.

Let me just give you a quick overview of these last three points with the instance segmentation. That's a new problem type where we have several objects we would like to detect on the image. And with the instance segmentation, we're not only detecting exactly on the image they're located but we're also isolating them one from one form or another and indicating and recognizing each individual object. In this example, we're looking at the cells on an image and we need to recognize each individual cell, not only all of them together as a whole.

Let’s go through the explain ability technique we're introducing here. The examples of the grab camps applied and captured from the current hydrogen course development. On the left hand side we have two sets of the image examples where the model is supposed to classify the images to find planes on them and grab camps. Tam is an algorithm to detect the area of an image, which drives the prediction done by the model. So, with graph camps with each image, you will be able to not only receive the prediction, and for some problem times confidence, but also an attempt to explain what is the error on the image that drove the prediction of the model. In this in this left hand side example, where we're making sure that the model is trAIned on recognizing the planes and on breg background or any other features of images that can miss land or over.

Over fit the model. On the right hand side, we have examples of an image of a task called distracted driver distracted drivers, where the model is expected to predict if a driver is paying attention to the road or if a driver is having a sip of coffee or talking to a passenger. So there are 10 classes of driver behavior. And in this class is the multi class classification task.

We're looking at the grab camps. What model is paying attention to when trying to recognize the attention of the driver. And as expected, we see that the model is focusing on where the arm of the driver is, where the driver is looking at and all the important things we'd like the model to focus on for this task.

And the last point in my list was deep learning for audio. This is something we're introducing in this version, we're adding audio support for classification and regression tasks. So here you see the screenshot of an example. But let me just switch to a demo to show it to you live.

Let me just jump into the classification task and show you an example of a model. I'm sorry, let me show you the data set first. So we're talking about an example of an audio classification task. Where the task is based, is to recognize the birds based on a short recording of an audio from a rainforest. So we have quite a lot of records, each record is just the three second audio. And each recording can contAIn multiple labels, we have them coded but I think we have around 20 different species of birds, which the model is expected to get to recognize from from the audio. So in some examples, we have two birds and some one bird. And in some, we can see more.

And I have an experiment, which has already finished running for a disorder classification task. And let's look at the prediction insights over here. You see that we have actual pictures, not audios over here. And this is very much intentional, because the way we solve this task is we transform the audio into spectrograms. And then we solve it as an image task, which has quite a lot of benefits. Because whenever we convert it to an image, we can use all the modern image neural network architectures and more importantly, transfer learning from images. And that works very well even for audio when converted to spectrograms. So here we see a couple of examples of spectrograms of these recordings, we see that these words, this one in particular, was perfectly predicted. So the true label was 23, and the one we predicted, let's look at some more challenging examples we saw. So let's have a look at this one. So this one had a recording of two birds labeled one and 10. But the model predicted one, three and 10. So we have a false negative, and actually a false positive sorry, non predicted one and three and 10 will have very low confidence. So we can use the tools we have in Hydrogen Torch already out of the box with prediction insights to investigate the audio data through the spectrogram to see some of the challenging examples and potentially see which types of the recordings at least visually cause more challenges to the model.

Um, let me stop here with regards to Hydrogen Torch and take the questions in the chat and pass it to the next presenter.

So just to reiterate - folks who have not tried H2O out before, I definitely highly recommend you to come on the show at AEI and sign up for our free trial and actually get in touch.

And things in a tremendous amount of sophisticated, deep learning the link in the notebook interface. So we made it extremely easy for all our users, it has picked up their expertise in deep learning or learning to be able to take advantage of the latest latest and greatest innovations that are happening. This is a product by our caravan master key. So one of the innovations and best practices teams have been covering the product is a game changer if you're looking at a lot of these kits that Dimitri referred to earlier, to be able to use this moment. But the nice thing about this is all of this is again managed completely in an AI Cloud AI called your environment is taken care of and bound to produce, they cannot vary. So super excited about that.

H2O Document AI

Document AI is a product that we launched only this quarter, earlier in January, and this is a phenomenal traction. This opens up a completely new set of use cases, which get donated document information.

And if you're not already, use Mark Landry, who is also one of the cabinet ministers and longtime veteran of H2O. And there's innovation. Hello, thanks for coming.

Document AI is a new product that we launched a few months ago. And we have a new self service UI that sits on top of that now. In November, December when we launched, we had a nice new interface that's a self service user interface. So I'll show a little bit of that. At the end, we'll talk a little bit about what document AI is, because as we've been talking to customers about it over the last few months now there's a lot of lack of clarity, I suppose ambiguity of what we really mean with document AI, and people just meeting us in all different spots where it is. So it's been good for us to learn from speaking with some customers and learning some use cases. Beyond those we have firsthand experience with which that's accumulating as well.

So the really basic solution space, you know, the vast reservoir of untapped insights in here, your electronic documents, document images, like faxes, forms, other semi structured camera phones, we're getting documents in all different ways. And you can think of documents as an image as the old school fax, the new ones are PDFs, but they're just pictures pretty much. And so the untapped part is - definitely what we're finding out from customers - is that there's a lot of people that have these documents, and they're just sitting around. It could be that your software that you use in your ERP system, or we talk with a lot of manufacturers, it produces documentation, and it just sits there test runs, things like that, and so untapped is that there's data sitting in there that didn't necessarily go into your ERP database, your data, Lake, whatever you're using, just kind of sits trapped in those documents. And so some people are using it for validation of their database type, a nice tabular data set to make sure that it checks it out. The only other option is that it's in, it's trapped in a document. And so a validation use case is a very common one that we're seeing. So H2O document AI is designed to understand those documents, process them, and manage the process of creating these as well. So we'll take a look at that with a self service UI with the unstructured text data, unstructured, semi structured, it's sitting there, you know, in different format forms, but it's not just NLP, we're not just picking up an NLP dataset, regular text and processing it, but we're going to be very similar. And so we'll talk about a hybrid model that what really would document is underneath.

So looking here to see who benefits from this. First of what we've been talking in the last several months with customers, and so this is wide array of people, business leaders who don't really want to get into the nitty gritty of what we're doing, you know, but they really they know the documents are out there, they've got to get something done. They're already looking at an incumbent process, perhaps which is less efficient. And so we're seeing a lot of those kinds of replacement sort of things. So operational leaders all the way up to the C suite, especially the data analytics team. There's data that they know that's trapped there that they may be able to augment the model, something like that workflow users especially this is where you have an incumbent solution, someone's already sort of reading these documents by hand typing them up, getting specific information out of it. I haven't really said that yet.

But a really common use case is going to be an invoice. And then you know, if you have multiple vendors, hundreds, 1000s of vendors, and so as a lot of our enterprise customers do, every single invoice is going to be a little different. But the core information is there, we as humans kind of understand it really well. You want the invoice number, you want the purchase order date versus the date of shift versus the date, the bill is due, those are all different dates. So your basic tools will tell you that those are dates. What we're trying to do here is extract specific information that connects with your business processes. Here, the users of the tool, we're building our data scientist and labeler. So far, as we talked with customers, we're looking into how we can move that forward. But right now, I'll show you the Self Service UI in a few minutes.

And that's meant for the data scientists to build models, and labelers to help either to create the datasets up front. We're making that as easy as possible, and also reviewing them on the back end. So how do we do that? In the middle there, the document AI, self service UX again, I'll show you that in a little bit. Intelligent OCR, there's really two big building blocks that have happened here. It's more complicated that there's more than four or five that really get involved in a pipeline of handling a document. The two big building blocks are those next, the second and third bullet point. So an intelligent OCR system, OCR is optical character recognition. But there's multiple ways of doing that.

A lot of people were talking to you are kind of familiar with OCR of maybe 10 years ago, or even current OCR that just isn't really up to the kind of tasks that Dmitri just showed us are capable at of Hydrogen Torch, you know, convolutional neural nets fancy, you know, deep learning computer vision algorithms have come a long way. And they're doing a good job with OCR. But our intelligent OCR is actually a dynamic OCR system because many of the customers, maybe half, probably two thirds, have clean PDFs actually, or they have a mix, they might have some clean PDFs that have come from their own systems. But they also have incoming documents that may be through a fax or just some other kind of picture format.

And the difference is, if you've authored a document, the text is just sitting there, you don't actually have to use a computer vision algorithm to read the text, it's just part of that document, copy paste in a PDF. If it's an image, there is no text. And so our system, we built it to pattonville batches dynamically and figure out what we need, and use the state of the art algorithm when we need it. And use also state of the art, it's not even straightforward to just process a PDF, there's a lot of intelligence that goes into what we're doing in there. So that you don't have to think about it, you don't have to use the right tool for the job, use ours, which will itself use the right tool for the job. And the second big piece there is multimodal deep learning. So it's very common to NLP. These documents look like text, we read them like text. So the core of it is really handled like a natural language processing problem. But the location of the text is important too. And I'll talk about that when we see some real documents. And you can envision this in your head that we're not just speaking left to right paragraph form, we don't look at blocks of text, even something kind of ugly, like a Twitter or something is still going to read roughly left to right. When we send documents to each other we send tables, we send chunks of information that split left and right address header is on the top left, things like that. And so the layout of that document is important. That's how we convey information without having to type literally what we mean with everything on there. So it's a very concise format, we usually send information.

And then another key piece of this is the document AI MLOps working with pipelines that do those two basic building blocks, get the characters out from PDFs or images, and also do classification. But there's multiple classification models people want to run. So you want to take specific parts like I spoke about with the due date versus the invoice date versus the purchase order date or something like that, you know, all three of those, we might classify them all separately, or just get the ones we want. But we can handle page classification as well. So UCSF, we have a lot of customer videos of the kind of experience we got with them in the summer.

Their pipeline has two parts, it actually gets a fax that a medical referral is what they're interested in. But often a medical referral coming in through a referral fax includes all sorts of other information related to that. So it could be just a fax cover sheet, medical notes, clinical notes, things like insurance information, various different types of documents. So the first thing we're going to do is classify what each of those pages are in part of that fax. And then specifically, if so far, we build the referrals pipeline, we'll extend that to other pipelines too. But the referral document has 115 different things that they want out of that referral. And our AI models have gone. So we do both of those algorithms at the same time.

And at the end of the day, the way they score that is a document goes in JSON comes out through our document AI MLOps, which is a really simple REST API to use. And I'll show you a little glimpse of what that final output looks like too.

This is something that I guess a lot of customers haven't really thought of too. So we're dealing with it, people don't quite think of what piles of data they might have around. Or like I said, they haven't come in the process. And so the new value, and often be the next I'll talk a little bit about what we're finding is that the robotic process automation, RPA, templates, Cofax, UiPath, these are all kind of in that space of handling documents, one by one by one or one format by format. So if you have a common 10 vendors, you might create a way of doing document AI. Without the AI portion, essentially, I'm going to click on this box. And this is where this piece of information comes from. And you can do that and UCSF had done that to about 100 templates, but they just noticed that even the Pareto Principle didn't really apply. They weren't getting it at 20 out of it, they were still covering only about 60% or so of all the provider groups that sent them referrals. So they had processed them one by one by one, when we got from this provider group. This is the way that their form looks. But when it goes outside that form, it has nothing. And so what our tool does is use AI to develop a generalized understanding of what these documents are. So that as the format changes, that's okay. The way it's referred to the orientation of it, how it appears, you know, the all of these change when you don't control the input sources, usually what we say that's where our product thrives, because we're noticing a lot of people are just running into a wall eventually, with the template size.

What can you do with those documents once you can get the information out? There's several different use cases where people can do the validation exercises. That's what we had done with PVC, a while back, we get bank information and extract the information out of that bank information, which is locked in a PDF. It's not an EDI extracts, you know, it's not a clean CSV, it comes in differently for every bank and mirroring that up with what a company says in the general ledger. Alright, sorry about that. Okay, so here is the document AI self service user experience, the user interface is basically lAId out on the left side, we have different projects, we've got a lot in here for our testing. But typically, we’ll run into customers, we had three of these at PWC. We picked up over time, three different documents, three different independent sets of information, you can organize these however you like. Continuing through, I've got the medical lab test pulled up here. So document sets is where we'll ingest a data set, I'm not going to show that just the interest of time, but we can do some deep dives on this, we've done a few material that we'll get out there, our documentation set, our documentation website is full, you can read and you can walk through some of these examples. Annotation sets are where a lot of this happens when I'm clicked on here, this is how you work with those original documents. And just to show you what I mean, I've got two loaded, pretty small 1424 pages, there's some PDFs in there. Another set that may have come to us later and actually did it when we were doing this 20 different documents with 90 pages. What do I do with those, I can run the intelligent OCR. And then this attributes column here shows me the different forms that the documents take. So we've got text, show what that looks like, you can really envision what this is going to be the OCR results. And then labeling class are kind of special things that were to enable us to do the page classification, or the entity recognition. And so let me show you a little bit of what these look like.

I'll show you originally also how we label. I'm going to flip into something we call page view, there's really two ways of operating you see a pretty normal kind of user interface here. As we walk through some of the information. As our data kind of matures across the pipeline, we build models and so forth. But a big piece of this user experience is mimicking what we spent a lot of time with as we learn this through actual use cases. And those documents are very visual. And so seeing things late labeling things, how we create the data to trAIn the model tends to be a visual process, we need to show the models where the information came from not just the text that we have both so we can see here this user experience, we can see the documents, we can move between documents, like I said, we don't control the input source and this we might get medical lab tests from all sorts of different vendors. And the actual format shifts around but you can see even just not being experienced with this, the basic shape is very similar. They're gonna refer to it as the column headers are different but the content is similar, we see some scratches of some marks through here, these are different and we can handle all sorts of different document types. And again, this way we thrive when the input source changes. And so we can use this tool to create from scratch, we can create the labels that we need, however you're going through your document type. This is an interface that will allow you to create a project where you can pick things I've already set up over here.

But it's very easy to create a project from scratch and get them labeled to To start out, let me show this is what I actually did this myself. So we've got different columns here, roughly. And we're teaching the model that all the text and here's a line text description, this is the value, this is the units, and so forth. So there's going to be different use cases with different classes. So bring your own sort of recipe, this has meant for custom recipes, we're learning there's a lot of traction with some common recipes, too. So pre built models in the supply chAIn are definitely showing interest as we've talked with customers. But this tool was designed to let you build document pipelines for the information within these documents that matters to you.

With this interface, we get what we want to trAIn the models, we get the text location, let me show you what a more detAIled one looks like in that way. So here, we see all the OCR tokens.

We see this as the classification labels here. So I can see that I've got my line Test Descriptions, it's broken out into each token. And here's the token value. So the results of running the OCR process, this is all under one, one roof, we've got a lot of different things you can do within here to move to a dataset that's trAInable. And to skip ahead a little bit and not take too much time. You can also see that I've already run models against these. So I've created my labels, I've merged it in with OCR, and at this point, I can trAIn a model. And then this is, we can see the accuracy of that as well, with all the typical data science kinds of ways where we're doing classification generally. So you're gonna see some common precision recall f1 scores here, by class. So you can see, you know, I've trAIned this on only 11 documents, nothing else, just 11 documents. And so what's happening here, it's already managing to learn the really dense type of data inside those tables, we need some more documents to be able to pick up some of the sparser ones, this is volume over here of tokens in the right to support. So through these are the tools that we use to fight these problems, over literally years, until we kind of lock into the right technology. And we've put that technology into the back end data science library we have. And that's what was released a few months ago. And now we've got this self service user interface that sits on top of it. So you can, we've got models, you can deploy into a pipeline, sorry, when it's all sAId and done. And that's the important part here is that it scales. And so that we take in documents, whether it's a PDF, or an image, a PDF is interesting to think about, because that can be a multi page PDF, so we're gonna get one document with multiple pages. But the JSON supports all of that and shows you the boxes of what we label when we're doing prediction. So we can use one of the two different models,

different post post processing that we've picked up, too, we label everything's and tokens, putting those together doing tables, line items, things that we're learning about as we talk with the different customers, all packaged into one pipeline that executes from top to bottom, in a scalable asynchronous REST API.

Model Validation

But it's a new user interface we're launching this month, and pretty excited about that. And you'll see more content coming out from us on this.

Yeah, so this is like a lock status is pretty exciting. We launched this last quarter, and we've had phenomenal traction. And, more importantly, we are able to tackle a wide range of use cases that we didn't have before. So it's a great opportunity for us to understand, can help solve some of your problems. So definitely, it's a lot of intimate interaction on the chat. But please reach us reach out to us, and how we can help you solve your intention documenting these cases, what types of documents. Our solution is extremely flexible, to be able to tackle problems that are typically not answered by a lot of the out of the box solutions that we're going to bring, go to the next topic. So far, what we looked at is a whole bunch of AI engines and tools to make your AI model. So we'll be AutoML from Driverless AI or H2O Open Source, Document AI and hydrogels, they're all helping us take a wide variety of use cases, different types of problem type, and for building models. But the second part of the equation is once you build these models, you need to then start understanding those results. Independence values validate those models when deployed. So talk about more focus a little bit about what do you do after you build those models? To start off, I'm going to invite Dimitri Gaudium. AgAIn, to talk about model validation, which is a set of capabilities that we have been building to help data scientists understand if the models are actually good, robust and actually validate them.

So model validation is definitely a new topic to our tool set, I would say, but we're constantly working on it. And we reached the point where we have some sort of a beta version of a product, I would like to talk about it today. We're going to be working on it, improving it and extending it quite a lot in the upcoming future. And I think we'll be AIming at launching it largely later this year.

So model validation is concerning pretty much any machine learning model. And I would, I would point out like four different areas of model validation we were trying to focus on, and why we believe more validation is an important topic. First of all, assessing the models, where I'm coming from is the financial services industry. And I'm a past experience, we had a lot of pressure from regulators with regards to assessing them. And validating models we use from all sorts of points of view. So what we were expected to do not only was not only to assess how accurate the model is, but also to take, take a look at it from multiple angles, such things as robustness of the models, what are the clusters of data where the model is having difficulties predicting correctly, or things such as how accuracy is stable over time, because we're collecting data over time. And there are certAIn trends in the population which change over time.

If the model has some assumptions behind it, whether they hold and many more, basically imposed by the regulators. But even outside of the financial industry, it is still a very, very important topic to keep in mind when you're developing a machine learning model. And you're planning to apply it to your business use case, what to expect in terms of the performance of the model in the future. And what are the inputs that would allow you to assess that more precisely, because looking at accuracy alone might be misleading, or might be insufficient. And as soon as you put your model into production, then you might face lots of different problems. So as soon as you focus on assessing the model, from a holistic point of view, the topic of validating or ensuring the model is fit for the production your use is brought up. So typically in larger companies are independent teams who do that. So based on the assessment of the model, they put a stamp on whether the model is validated, or whether it's not validated for production use to have kind of a final decision in the process before the model is rolled out.