A Brief Overview of AI Governance for Responsible Machine Learning Systems

Our paper “A Brief Overview of AI Governance for Responsible Machine Learning Systems” was recently accepted to the Trustworthy and Socially Responsible Machine Learning (TSRML) workshop at NeurIPS 2022 (New Orleans). In this paper, we discuss the framework and value of AI Governance for organizations of all sizes, across all industries and domains.

Our paper is publicly available in arxiv: Gill, N., Mathur, A., Conde, M. (2022) A Brief Overview of AI Governance in Responsible Machine Learning Systems.

Introduction

Organizations are leveraging artificial intelligence (AI) to solve many challenges. However, AI technologies can pose a significant risk. To avoid such risks, organizations must turn to AI Governance, which is a framework designed to oversee the responsible use of AI with the goal of preventing and mitigating risk.

AI Adoption & Problems within Industry

AI Adoption

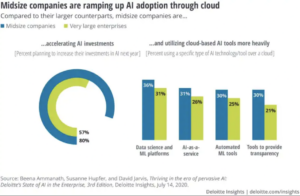

- Larger companies have had the advantage of using due to resources alone

- Smaller companies can now take advantage of AI by more affordable means, e.g., cloud computing.

- AI is on an upward trend and will continue as such.

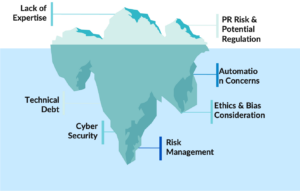

- Unfortunately, AI has pros and cons and cons are sometimes not accounted for.

Problems within Industry

- Lack of risk management

- AI technology is moving too fast

- Government intervention is lacking

- Lack of AI adoption maturity

Manage AI Risk with AI Governance

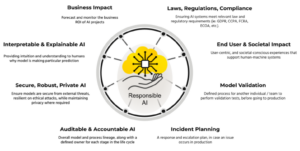

What is AI Governance (AIG)?

AI Governance is a framework to operationalize responsible artificial intelligence at organizations. This framework

- Encourages organizations to curate and use bias free data

- Consider societal and end-user impact

- Produce unbiased models

- Enforces controls on model progression through deployment stages

Benefits of AI Governance

Alignment and Clarity

- Awareness and alignment on what the industry, international, regional, local, and organizational policies are.

Thoughtfulness and Accountability

- Put deliberate effort into justifying the business case for AI projects.

- Conscious effort into thinking about end-user experience, adversarial impacts, public safety & privacy.

Consistency and Organizational Adoption

- Consistent way of developing and collaborating AI projects.

- Above point leads to increased tracking and transparency for projects.

Process, Communication, and Tools

- Complete understanding of steps to move the AI project to production and start realizing business value.

Trust and Public Perception

- Build AI projects more thoughtfully

- Above point builds trust amongst customers and end users, and therefore a positive public perception.

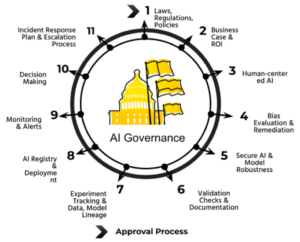

Stages of a Governed AI Life Cycle

Organizational Planning

- Comprehensive understanding of regulations, laws, and policies amongst all team members.

- Resources and help available for team members who encounter challenges.

- Clear process to assist team members.

Use Case Planning

- Establish business value, technology stack, and model usage.

- Group of people involved include subject matter experts, data scientists/analysts/annotators and ML engineers, IT professionals, and finance departments

AI Development

- Development of a machine learning model from data handling and analysis, modeling, generating explanations, bias detection, accuracy and efficacy analysis, security and robustness checks, model lineage, validation, and documentation.

AI Operationalization

- Deploy machine learning model to production, which requires review-approval workflows, monitoring and alerts, decision making processes, and incident response plans.

Conclusion

AI systems are used today to make life-altering decisions about employment, bail, parole, and lending, and the scope of decisions delegated by AI systems seems likely to expand in the future. The pervasiveness of AI across many fields is something that will not slowdown anytime soon and organizations will want to keep up with such applications. However, they must be cognizant of the risks that come with AI and have guidelines around how they approach applications of AI to avoid such risks. By establishing a framework for AI Governance, organizations will be able to harness AI for their use cases while at the same time avoiding risks and having plans in place for risk mitigation, which is paramount.