H2O4GPU is an open-source collection of GPU solvers created by H2O.ai. It builds on the easy-to-use scikit-learn Python API and its well-tested CPU-based algorithms. It can be used as a drop-in replacement for scikit-learn with support for GPUs on selected (and ever-growing) algorithms. H2O4GPU inherits all the existing scikit-learn algorithms and falls back to CPU algorithms when the GPU algorithm does not support an important existing scikit-learn class option. It utilizes the efficient parallelism and high throughput of GPUs. Additionally, GPUs allow the user to complete training and inference much faster than possible on ordinary CPUs.

Today, select algorithms are GPU-enabled. These include Gradient Boosting Machines (GBM’s), Generalized Linear Models (GLM’s), and K-Means Clustering. Using H2O4GPU, users can unlock the power of GPU’s through the scikit-learn API that many already use today. In addition to the scikit-learn Python API, an R API is in development.

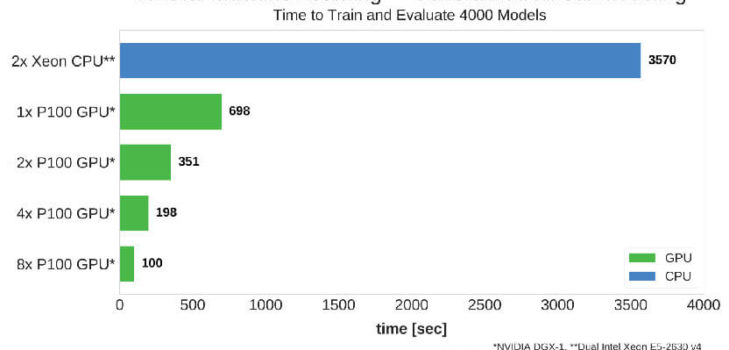

Here are specific benchmarks from a recent H2O4GPU test:

- More than 5X faster on GPUs as compared to CPUs

- Nearly 10X faster on GPUs

- More than 40X faster on GPUs

“We’re excited to release these lightning-fast H2O4GPU algorithms and continue H2O.ai’s foray into GPU innovation,” said Sri Ambati, co-founder and CEO of H2O.ai. “H2O4GPU democratizes industry-leading speed, accuracy and interpretability for scikit-learn users from all over the globe. This includes enterprise AI users who were previously too busy building models to have time for what really matters: generating revenue.”

“The release of H2O4GPU is an important milestone,” said Jim McHugh, general manager and vice president at NVIDIA. “Delivered as part of an open-source platform it brings the incredible power of acceleration provided by NVIDIA GPUs to widely-used machine learning algorithms that today’s data scientists have come to rely upon.”

H2O4GPU’s release follows the launch of Driverless AI , H2O.ai’s fully automated solution that handles data science operations — data preparation, algorithms, model deployment and more — for any business needing world-class AI capability in a single product. Built by top-ranking Kaggle Grandmasters, Driverless AI is essentially an entire data science team baked into one application.

Following is some information on each GPU enabled algorithm as well as a roadmap.

Gradient Linear Model (GLM)

- Framework utilizes Proximal Graph Solver (POGS)

- Solvers include Lasso, Ridge Regression, Logistic Regression, and Elastic Net Regularization

- Improvements to original implementation of POGS:

- Full alpha search

- Cross Validation

- Early Stopping

- Added scikit-learn-like API

- Supports multiple GPU’s

- Based on XGBoost

- Raw floating point data — binned into quantiles

- Quantiles are stored as compressed instead of floats

- Compressed quantiles are efficiently transferred to GPU

- Sparsity is handled directly with high GPU efficiency

- Multi-GPU enabled by sharing rows using NVIDIA NCCL AllReduce

- Based on NVIDIA prototype of k-Means algorithm in CUDA

- Improvements to original implementation:

- Significantly faster than scikit-learn implementation (50x) and other GPU implementations (5-10x)

- Supports multiple GPUs

H2O4GPU combines the power of GPU acceleration with H2O’s parallel implementation of popular algorithms, taking computational performance levels to new heights.

To learn more about H2O4GPU click here and for more information about the math behind each algorithm, click here .