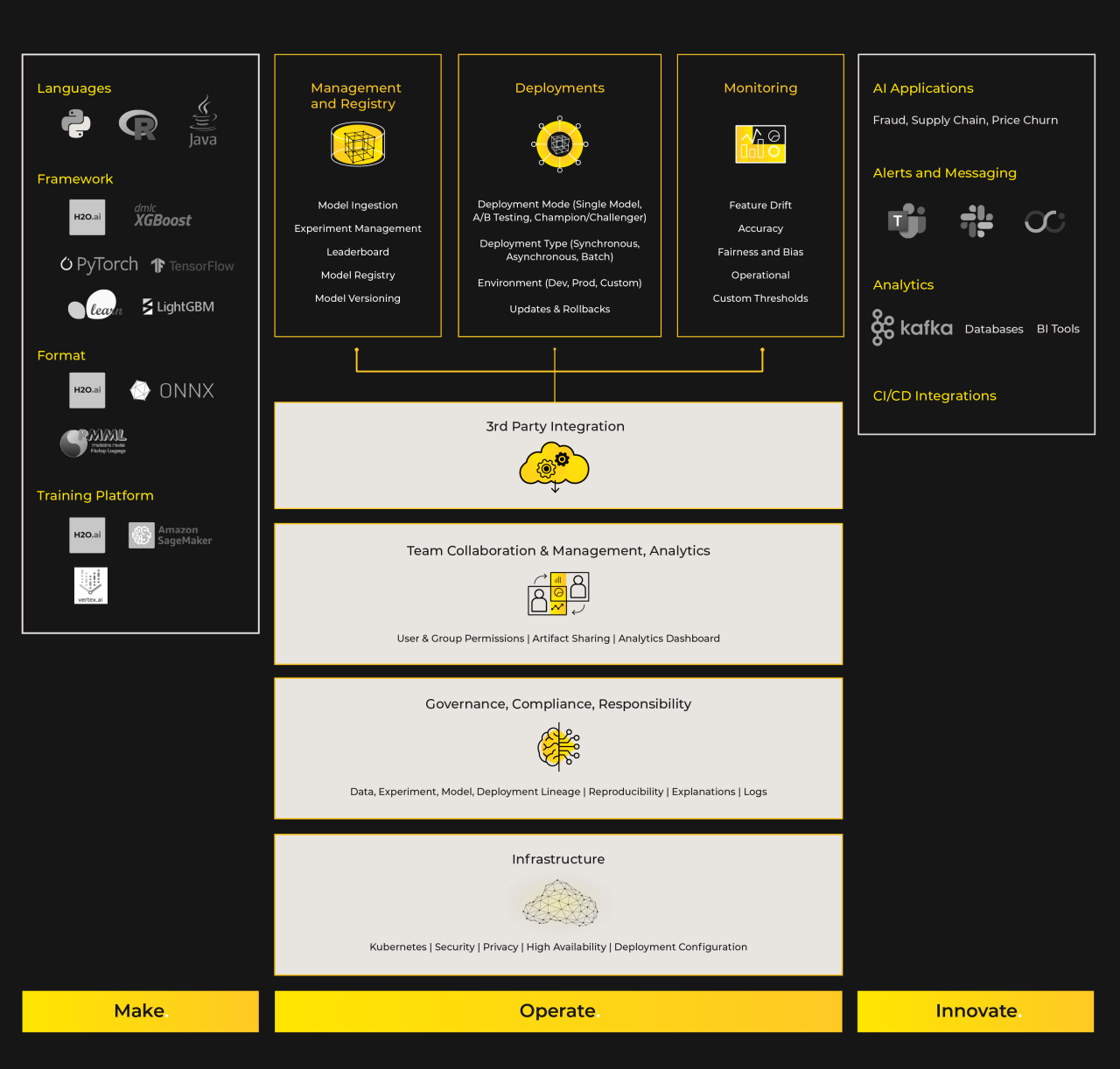

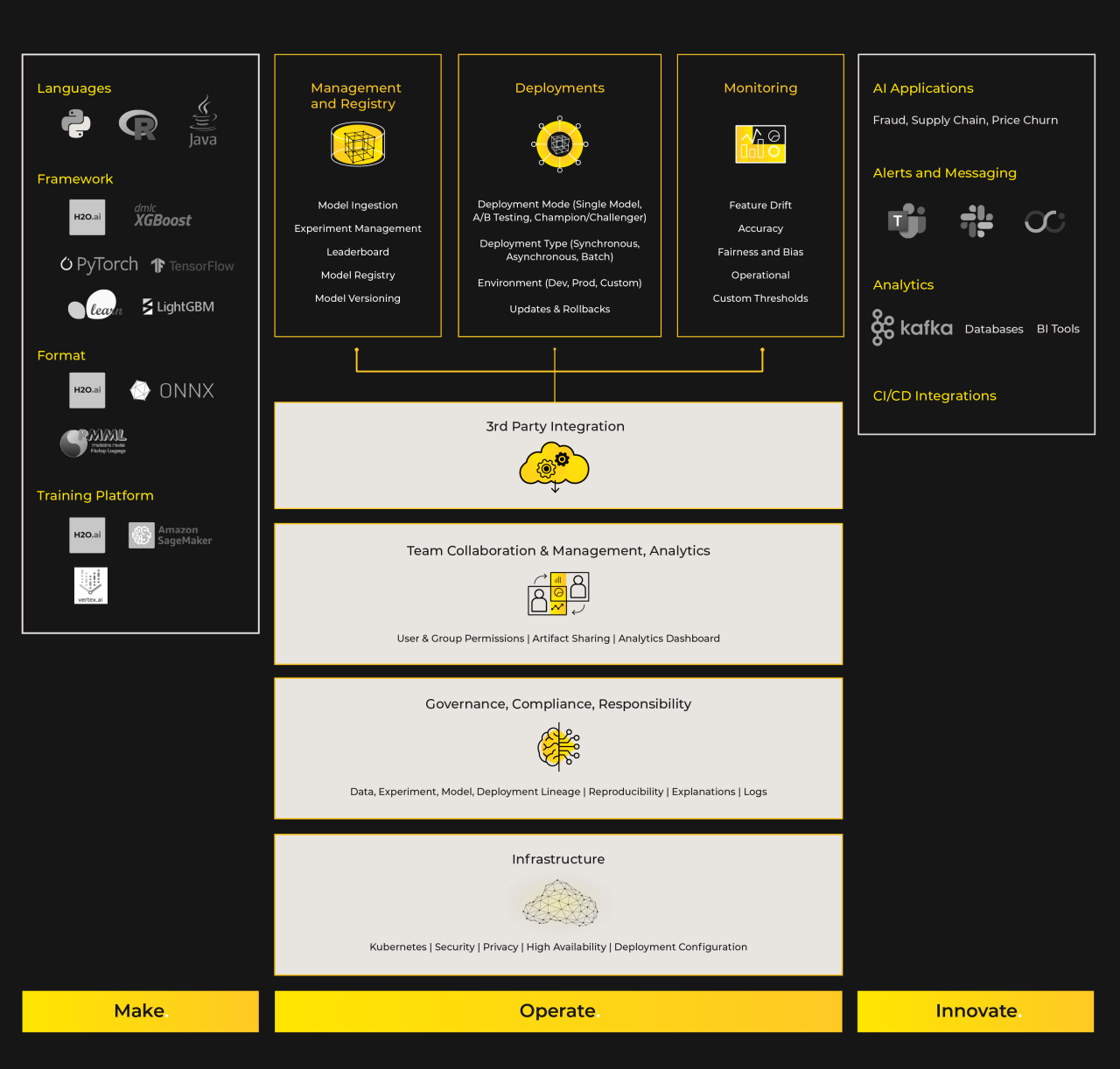

H2O MLOps

Operate AI Models with transparency, scale and confidence.

H2O MLOps provides a collaborative environment that makes it easy for organizations to manage, deploy, govern, and monitor machine learning models in production. Today, many organizations struggle to move from experimenting with AI to production AI models that drive meaningful business outcomes. Standing in the way are cumbersome and manual processes, DevOps skills or resources, and an ability to monitor models that are becoming less accurate or more biased overtime. H2O MLOps provides a simple interface that enables end-to-end model management, 1-click deployments, automated scaling, and model monitoring that provides automated drift detection for both accuracy and bias. With H2O MLOps, organizations will more rapidly move AI models to production and improve them as they deliver positive and responsible results.

Benefits

Accelerate the time-to-value of AI outcomes

Automation makes moving from experimentation to model deployment simple, seamless and continuous. H2O MLOps helps Data Science teams quickly take ML projects to production, reducing weeks to days, and days to hours.

Operate AI with agility and confidence

Automatically monitor models in real-time and set custom thresholds to receive alerts on prediction accuracy and data drift. H2O MLOps ensures that all deployed models are operating as intended.

Achieve consistent ML model delivery

Reduce MLOps management with high-availability deployments and automated scaling that ensures Data Scientists can focus their time on making AI that delivers value.

Capabilities

Model Management & Registry

3rd Party Model Ingestion

Operate models from a wide-variety of machine learning frameworks, including: H2O.ai, pyTorch, scikit-learn, XGBoost, and many more. Customers have the option of importing their models using pre-built integrations with Driverless AI or MLflow, or upload models in Pickle serialized format.

Leaderboard

Compare experiments against each other, using an evaluation metric of your choice. Metrics are imported automatically as the experiment’s metadata, and made available for users to determine the leaders.

3rd Party Model Management Support

Browse for models stored in 3rd party model management tools, directly using the MLOps UI, and import the artifacts to be deployed and monitored using H2O MLOps.

Collaborative Experiment Repository

H2O MLOps includes a collaborative model repository that enables data scientists to manage, permission, and share their experiments and models with co-workers.

Model Registry and Model Versioning

Upon comparing models against each other, register the best performing model(s) into the Model Registry, to prepare for deployment. Take advantage of iterations of models for the same model type, using Model Versioning.

Model Deployment

Deployment Modes

When introducing a new model or a new model version, operations teams may want to test with live traffic and compare results with the prior version. With H2O MLOps, users can run comparison tests between production model (champion) and an experimental model (challenger), or test two (or more) models and compare results in a simple A/B test.

Multiple Environments

H2O MLOps uses customer-provisioned Kubernetes environments and supports multiple infrastructure environments simultaneously. MLOps teams can have environments for development, testing, and production, all running in different locations.

Deployment Type

Easily serve your model as a real-time deployment (synchronous or asynchronous) or batch deployment (one-time or scheduled) with the click of a button.

Updates & Rollbacks

Machine learning models can require frequent updates in production. With H2O MLOps, operations can easily replace models with a few clicks, and Kubernetes automatically handles the routing of new requests to the new model while the prior version handles old requests. Similarly, if there’s a need to roll back to a previous version, that can also be done with the click of a button.

Model Monitoring & Alerts

Model Drift

When data changes between training and production, models can become less effective. This “drift” is tracked by looking at differences between training and production data for each model feature. H2O.ai also offers an AI application for drift detection designed for data scientists, which has detailed views of each feature so data scientists can determine if they want to refit, retrain or build a new production model.

Accuracy

As models serve in production for some time, model performance and accuracy generally degrades. In order for organizations to be confident that their models are predicting as desired, model accuracy should be monitored. H2O.ai has monitoring capabilities for both regression models and classification models.

Fairness & Bias

Machine learning models are inherently subject to bias, which can be caused by a number of factors. Fairness is not only a question of ethics, but also instrumental to achieving optimal business value. H2O MLOps monitors models for bias to provide organizations with information to achieve better business value from their models.

Operational

Production models are often served on infrastructure that are prone to operational issues. Sudden spikes in request volume or issues with model objects can cause increased latency, or worse, outage for the deployment. H2O MLOps monitors operational metrics for organizations, so IT teams can detect and respond to issues before they become problems for the business.

Custom Thresholds

For each deployment, H2O MLOps users can set thresholds and alerts on a variety of metrics. When metrics hit the given point, an alert is triggered to notify the MLOps team about the issue so they can take appropriate action.

Team Collaboration & Management, Analytics

User & Group Permissions

Set permissions for users and groups for Projects, enabling the appropriate level of access.

Artifact Sharing

Share models and model artifacts with collaborators and team members, and provide permission to them to perform actions for your models.

Analytics Dashboard

Receive a complete view of all models across the entire organization, and gain insight into how models are progressing through the deployment workflow. Build a deeper understanding of machine learning adoption within the organization by mapping models to its creators.

Governance, Compliance, Responsibility

Data, Experiment, Model, Deployment Lineage

H2O MLOps maintains data and traceability across the entire machine learning lifecycle, enabling end-to-end lineage of data, to experiment, to model registry, to deployment.

Reproducibility

With each model and deployment maintaining its metadata, organizations are able to reproduce development of models for any type of compliance need.

Model Explanations In Production

Receive model explanations for each scoring request at runtime. Model explanations make it easy for individuals to understand which AI model features contributed the most, both positively and negatively, for each individual prediction. With explanations at runtime, organizations can more easily validate, analyze, and improve model results, while being compliant with industry regulations.

Event Log per Deployment

H2O MLOps includes an event log for each deployment. The log captures all events related to the deployment, including who took action and when it took place.

Infrastructure

High Availability Deployments

Select up to 5 nodes to replicate model deployments. H2O MLOps will automatically check the health of each node, and load balance across nodes, so if one node fails, customers won’t experience any service interruption.

Deployment Infrastructure Configuration

Choose the Kubernetes configuration that is best suited for each model, including, CPU vs. GPU and min and max processing and memory allocations. Customers can assign deployments to GPU nodes when the model size is large and/or requires ultra low latency scoring.

Deployment Options

H2O MLOps, is one our broad and expanding set of H2O AI Cloud products. Customers deploy the H2O AI Cloud, and use the entire state-of-the-are AI platform or just the products that meet their needs.

H2O AI Cloud has two deployment options, Hybrid and Fully Managed.

Fully Managed Cloud

The Fully Managed deployment option provides the easiest way to get started and securely operate the H2O AI Cloud.

Complete H2O AI Cloud

Unlimited Users

Dedicated single-tenant cloud environment

Multi-layer security with encryption in transit and at rest

Virtually no infrastructure management

Highly available

Hybrid Cloud

The Hybrid Cloud deployment option provides complete control over infrastructure, software updates, security, and compliance. Customers can operate the Hybrid Cloud in any cloud or on-premises IT environment.

Complete H2O AI Cloud

Unlimited Users

Complete control – customer managed environment

Choice of cloud or on-premises environment

Run in compliant environments such as PCI-DSS, HIPAA, and FedRAMP

Low-latency – Bring AI near data and apps