Intro to AutoML + Hands-on Lab

In recent years, the demand for machine learning experts has outpaced the supply, despite the surge of people entering the field. To address this gap, there have been big strides in the development of user-friendly machine learning software that can be used by non-experts. Although H2O has made it easier for practitioners to train and deploy machine learning models at scale, there is still a fair bit of knowledge and background in data science that is required to produce high-performing machine learning models. Deep Neural Networks in particular, are notoriously difficult for a non-expert to tune properly. In this presentation, we provide an overview of the field of "Automatic Machine Learning" and introduce the new AutoML functionality in H2O. H2O's AutoML provides an easy-to-use interface which automates the process of training a large, comprehensive selection of candidate models and a stacked ensemble model which, in most cases, will be the top performing model in the AutoML Leaderboard. H2O AutoML is available in all the H2O interfaces including the h2o R package, Python module and the Flow web GUI. We will also provide simple code examples to get you started using AutoML.

Talking Points:

- Data Prep

- H2O Automatic Machine Learning

- Ensembling

- Why Combine Random Grid Search with Stacking

- What is H2O AutoML?

- Preview of Code

- H2O3 Roadmap

- Hands-on Demo

- Binary Classification

- Code to look inside Ensembles

- Power Output

Speakers:

Erin LeDell, Machine Learning Specialist, H2O.ai

Read the Full Transcript

Erin LeDell:

All right. So this talk is about automatic machine learning. I'll see if my slides are hooked up. Okay. So welcome, my name's Erin LeDell and I work as a machine learning scientist at H2O. Auto ML is the main project that I'm working on now, so this is what I'm gonna talk with you about. And it's a pretty new component to H2O. It had kind of a quiet release over the summer, and we've been working a lot this fall to add a whole bunch of new features and in H2O316 which was released a few days ago, or I guess technically last week, there's a whole bunch of really new stuff for autoML. So if you haven't upgraded to 316 yet, I would recommend checking it out. And all the new features are sort of documented on the autoML user guide, if you're curious about what's new.

Okay. So the title of the talk is automatic machine learning. And this is the team right now for autoML, this is myself, and Navdeep and Ray and yeah, so we've all been sort of working on this since the beginning together and that's who we are. Alright, so this is what we're gonna talk about. So the first topic is what does automatic machine learning mean? It's kind of one of those generic terms that, you know, like data science that maybe means a lot of different things to a lot of people or sort of ill-defined. So we'll talk about what does that include? And then we'll talk a little bit in more detail about H2O's approach or at least our current approach to autoML, which involves random grid search and stacked ensembles and combining those techniques together.

So I'll go into some of what those two things mean and what the algorithm is. And then I'll talk about the software. So how are we exposing this to the users? What's the interface? So we will show the R Python and web interface to autoML. And then we'll talk a little bit about the roadmap for H2O3 So H2O3 is also known as H2O. It's the third incarnation of H2O, which is what the 3 is. And then so that'll be the first 30 minutes or so. And then the second 30 minutes will be a hands on tutorial. And it sounded like from the last talk that the quick labs was not optimal. So we'll, we'll also have a quick labs lab. However, the code is also available. I'll put the link up there.

So if you wanted to download it and just run it on your own machine, that's also an option. If the quick labs is not working for you. Okay. So what is automatic machine learning? Okay, so here's kind of three bigger components of what, at least in my opinion, of what automatic machine learning is all about. So this is kind of representative of the whole data science pipeline. So there's a lot of things in terms of data preparation that could be possibly automated, so that's one component. Then if the goal of automatic machine learning is to, I mean, we could sort of debate what the goal is, but let's assume that the goal is to sort of get the best model with the least amount of effort, I guess, or, you know, the most amount of automation. So part of that would involve generating a whole bunch of different models and either choosing, you know, the best one of that set or taking them all of the models or some subset of the models and ensembling them together.

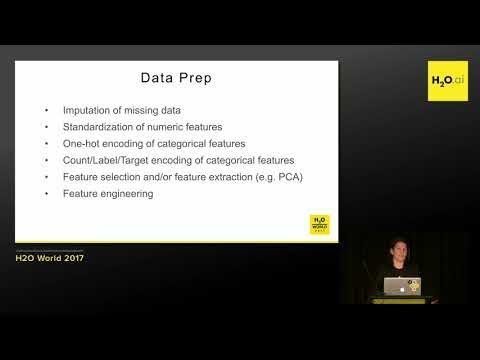

Data Prep

So that ensembles is sort of the third piece of once you have all these models, how do you get the best model, which is usually to take some combination of those models together to get better performance? So those at a high level are some of the features of autoML. So let's talk about that more specifically. So in terms of data prep, there's a lot of different things that we can do. And some of those things can be automated. So some simple things, the first three bullets here are stuff that we already do in H2O automatically, not necessarily in autoML, but in all of our algorithms. So there's automatic imputation of missing data so if you have missing data we will fill those data points in for you when that's required.

Another thing is the standardization of numeric features. So if the algorithm requires that we will do that as well, and then for categorical features, we will automatically one-hot encode the categorical features. Again, only if that's required. So a lot of this, actually those first three things are not at all required by tree-based methods. So we can kind of skip over that, this is more for GLMs and deep learning. So that's already done in H2O fairly easily. So what are some more things that we could do that maybe we could automate a little bit? So one thing and this is what you've learned about a little bit in the driverless presentation right before this one is, this is all kind of getting into the more sophisticated feature engineering stuff.

So there's, there's something called either count or label or target encoding of categorical features. So that's just another way to gain more value out of these categorical features other than just one-hot encoding them. So that's something that you'll see a lot of the Kaggle grandmasters do pretty standard in their pipeline. So that's one thing that you could do. So then there's some algorithms that benefit from doing feature selections, so that's another thing that you could do in the data prep category. And then a counterpart of that is feature extraction. So the difference between those is feature selection, you're just choosing some subset of the features and feature extraction is taking some set of features, or maybe all the features and extracting, or doing some projection of those features to create new ones.

So an example of that would be the PCA algorithm or any other dimensionality reduction algorithms. So you take your original features and you just generate new ones from that. And then feature engineering, which could kind of encompass the last two bullet points at least, that could be a lot of different things which could be very manual or could be automated to some degree. So things like maybe bringing in other data sources and joining them with your data, that might be a little bit more of a manual process though, there's aspects that we could probably automate in there. And then all of the stuff that you learned about in driverless, that's basically all automatic feature engineering. So these are all aspects of what we would call the data preparation part of automatic machine learning. Okay. And so I'm speaking generally right now, so I'm saying in general, what is automatic machine learning?

H2O Automatic Machine Learning

And then we'll go into more specifically, what is H2O's automatic machine learning. Okay. So the next part, so for model generation, there's a couple different ways that you can generate a bunch of different models. So the most straightforward approach would be something called a grid search or a Cartesian grid search. So what that is is basically I mean, a lot of you might already know this, but just for you know, verbosity, I guess I'll explain what that is. So with a grid search, it just means, let's say you have a random forest that you want to train and you wanna get the best random forest. So you might select, let's say three different hyper-parameters of the random forest. Maybe you would look at max depth, so the depth of the tree column sample rate, and row sample rate.

So those three parameters. Let's try to basically get the best model just by tweaking those parameter values. So what you do in a Cartesian grid search is you, so as the user or the person doing the grid search, you have to actually specify for each one of those parameters, some range or values that you wanna look over. So let's say, you know, for max depth, you might say 5, 10, and 20, those are three values you could look at. Column sample rate, row sample rate, you could say, you know, 60%, 70%, 80%, those are all values that you could choose. And then the grid search is combining all those combinations of those different values and then training a model for each one of those combinations. And that's what in a two-dimensional sense, you could think column sample rate, and row sample rate, and all the combinations of those, that's what the grid is.

So that's a great approach, but the problem with that is that it takes a long time and you'll be guaranteed to get the best model of those combinations of parameters, but it might not be the best model in general. So but the main drawback of regular grid searches is that it takes too long. So the solution to that is, I don't know the solution to that, but like a way to at least reduce the computation time is to do that same process but to randomly sample from that hyper-parameter space and train models that way. So there's papers about this, that basically with random search, you can get to a good model much more quickly, and it's just sort of a shortcut to regular research. So that's, that's a useful technique.

And then so the individual models, we can also tune them a little bit as well. So even within a grid search, we might have models that have some sort of iterative component to their training. So let's say like the GBM is an example of an algorithm where there's iterations of the GBM training. So you train 1 tree, then 2 trees, then 3, 4, 5. So number of trees is sort of the iteration that you go over. And there's a term that we call early stopping, which is just sort of a fancy word for tuning a hyper-parameter that has to do with the iteration of the algorithm. So you might say for the GBM, let's say your maximum number of trees would be 20,000, but then that might not be the optimal number of trees. You might have severely overfit your model if you train all 20,000 trees. So what you can do is take a validation set and evaluate each time you train a tree, you know, how does it do on that validation set? And then when the performance starts to either stabilize or decrease, then you want to cut off the training. So it doesn't start to overfit. So that's, what's called early stopping. So even within the grid search, you might have also a little bit more tuning to do on the individual models. And then the last thing that I'll mention is something called Bayesian Hyperparameter optimization, and what that is, is basically a smarter way to get to the best model. So it in that space, generally, what you're trying to do is learn, so you have this what's called a surrogate model, and that model actually is a model that represents how the hyperparameters relate to the performance.

So what you do there is you train a model with some hyperparameters and some values, and then you record what the performance is, and then you hand off all that data to the surrogate model. And then it will kind of crunch on that and just give you a new set of parameters to try that it thinks are gonna be useful. So then you train your model, you report back the performance, and then that model then gives you another set and you go on like that. So it's sort of a guided search. The one drawback to those techniques is that it's an iterative process. So you can't just paralyze this massively and just get the best model quickly. You have to wait for the model to work. So anyway, these are a bunch of different ways to not only generate models but also to tune them and to get good models.

Ensembling

So, all right, so now I'm gonna talk about the last topic, ensembling. So there's a lot of different ways that you can ensemble together. So the simplest thing to do is just to average the models together. That can be a good approach if your models are all fairly similar in performance, but if you're averaging and you have one model that's really bad, that's gonna bring the performance down. So you don't wanna do that if that's the case. So then there's another type of ensemble called stacked ensembles or stacking or super learning, those are all the same thing. And that's what we do in H2O, we have the stacked ensemble method, and I will explain a little bit more about that on the next slide. And then the last type of ensembling just to mention is something called ensemble selection, which is a greedy approach to choosing which models to put in your ensemble.

You basically take a model, put it in, average among the other models in there, if the result is, you know, worse than you had before you throw the model out, and then you try again and you just keep adding the models until there's no more models to add. Okay. So we'll talk about the random grid and stacked ensembles approach. All right. So stacked ensembles, this will be sort of the only, the next two slides, the only like algorithm slides in the talk. So if this is of interest to you pay attention, if not just look at the image. Okay. So there's sort of the superhero image that I like to put when I talk about ensembles, which represents kind of what's happening there. Each algorithm in the ensemble has some sort of specialty or skill set, and they're all sort of different and good at different things.

So if you bring them together, they can form like a really good team. So with stacked ensembles, your job is to one, you have to specify which models will go in the ensemble, so that's kind of sort of an art in itself. The easy thing is just to put all the models in there, you know, all the models that you can think of, but even then you have to choose, like for each model. So let's say you wanna put a random forest in there, what are the hyperparameters gonna be, or do you just put in a whole bunch of things? So, anyway, they're not, there's some thought that has to go into constructing stacked ensembles. So anyway, the main setup is you have, let's say L base learners. Base learners is sort of the term for the models that go in the ensemble. And then there's a second algorithm that you call the metal learner. And so the metal learner is what's used to train the stacked ensemble, and this can be any algorithm really, and I'll go into that on the next slide. And so the first and main thing in the stacked ensemble process, this is the part that takes all the computation time, is just performing K-fold cross-validation on all those models. And you'll see on the next slide why we do that.

Okay. So one thing that's nice about cross-validation is you get a robust estimate of performance. More than, let's say you just have a test set and you evaluate on the test set, cross-validated performance is a more stable and a good way to evaluate the performance of the models. But the other thing that you get is, you get these cross-validated predicted values. So if you do the K-fold process, you do it K times, you end up getting, if N is the number of data points in your original dataset, you get N predicted values or N predictions. So why is that useful? So what we can use those for is actually training a model on those predictions and that's what the metal learner does. And so what the metal learner is designed to do is to learn how to combine the output from the base learners in an optimal way.

The term stacking, you know, maybe comes from taking these cross-validated predictions and squishing them together into this new matrix, which actually becomes the training data for the metal learner. So the features of that training set are the predictions from the base learners. One thing that sometimes people say about ensembles is that they take a long time to train. And so one thing I want to point out is that if you're already as part of your data science workflow going to go through the effort of cross-validating a bunch of models to select one, you've already done 98% of the work. So to train the metal learner on top of that output is actually very cheap in comparison. So my philosophy is that if you're already doing cross-validation, you're just one little step away from an ensemble, so you should go ahead and do it.

Why Combine Random Grid Search with Stacking?

Okay. So then why do we combine random grid search with stacking? So I said that one of the challenges of ensembles is trying to pick what models go in there. So one way to generate a whole bunch of models is a grid search. And one thing about stacking is that the stacked ensemble does well when you have two things, one that the individual models are strong, and two when those models make uncorrelated errors. So basically what that means is you want a diverse set of models in your ensemble. And so one way to get a diverse sort of randomized set of models is to do a random grid search and take all those models and stack them. And you can do that for the random forest, the GBM, the GLM, the deep neural net, train random grids on all those and combine them together and stack them.

What is H2O AutoML?

So that's the motivation about why we apply random grid search to stacking. Okay. So now we're just gonna talk about what is H2O autoML? So you probably all know a little bit about H2O, by now, but this is essentially the high-level overview, H2O has a bunch of machine learning algorithms. So when we're talking about building an autoML system, one prerequisite for that is that you have a bunch of algorithms, so H2O or any machine learning platform that has a bunch of algorithms is a perfect thing to use to build autoML on top. So anyway, this is high level. What, H2O is all about, if you're not familiar. We'll skip over that though. So, all right. I mentioned this is kind of so H2O316 is our newest release, and this is a second release that we've had autoML.

Here's basically what we have so far. So we have the standard data processing steps that we have with all H2O algorithms, amputation, one-hot encoding and standardization. And then we have a random grid search over a custom hyperparameter space. So just like with Cartesian grid search with random grid search, it's still up to you to define what that hyperparameter space is. So you have to define for all of these parameters what are acceptable values to look over. And so if you're not an expert in machine learning or data science, you might not know exactly what to do there. So we sort of came up with what we think our good hyperparameter range is to look over. And then we put those into the random grid search. We also individually tune the models with early stopping.

We also do early stopping on the grid itself. And so here's the algorithms that we have right now and H2O autoML. So GBMs, random forests, deep neural nets, and GLMs. And soon we'll also have XG boost in there. And then when we're done with training all those models, we do multiple stacked ensembles. So in 316, we started doing multiple ensembles instead, just the one. And we'll continue to do that. And one ensemble is an ensemble of everything that's in there. And the second is what we're calling the "Best of Family" ensemble, which basically takes the best random forest, best GBM, best deep neural net, best GLM and ensembles just those together. We treat random forest and an extremely randomized forest as two separate algorithms.

So there's actually a total of five, five algorithms in that ensemble, which the reason for doing that is that it's optimized for production use. So if you want to deploy an ensemble, it might be better to deploy one that has a small number of models rather than like a thousand models. Cause you have to make predictions on each one of those models to get the ensemble prediction and what it returns is a leaderboard, which ranks all the models in the run.

Preview of Code

Before we go into the hands on demo, I'll just give you a preview of what the code looks like. So this is in R there's basically one function, it's called H2O autoML. And you import data just like you would and you basically need three things.

One, what is your training set? So training frame argument. What is the response column? And so what are you trying to predict? And if you know, there's also an X argument, which if you wanna take a subset of your features, you have to define that otherwise, it will just use all your features. And then you need to either give it some sort of maximum run time or maximum number of models. So you can say in this case, we're saying run this for 10 minutes and then stop and give me the leaderboard. Okay. So that's what looks like in our and this is in Python, same thing, you define the autoML object, tell it to run for 600 seconds or 10 minutes, and then you train and then you get the leaderboard. So it's fairly straightforward and you don't really have to know anything to use it basically.

So you just let it go. And I have been talking to some people that I've been using this and they I've heard some people say they just use it and then just take whatever model's done at the end and go with it. And I've heard other people say they run this for a whole bunch. Then they pick a model and then start to like hand tune it more on their own. So there's, you can use it either as a tool to accelerate your search or just a tool to completely automate. and then this is what it looks like in flow. It's basically just one little interface. Tell it which training frame you need, response column. There's some other optional things that you can specify and click build and then it will go. And so this is kind of what I mean by a leaderboard.

So at the end, you get this object, a data frame of some sort, and it's ranking all the models. By default, it'll rank them based on their cross-validated performance. And for each task, so we have regression, binary classification, or multi-class classification. We define some default metric for those. So in binary classification, the default metric we say is AUC, so it will return ranked by AUC. You can of course re-sort however you'd like, and in the next version you'll just be able to specify at the beginning which metric you'd like to optimize within the search. So for all the early stopping, you can say I wanna optimize based on log loss and it will do the early stopping based on that. And it will return the leaderboard based on the metric that you choose. So this is a typical leaderboard, so not always, but most of the time the stacked ensembles will be on the top and usually the all models. So there, there's two different names here. One's called "All models", the other one's called "Best of family". And you can see that the performance is similar. So yeah, you basically just choose whichever one you like, if you care only about performance, you might choose the all models. If you care more about productionizing this and having it be like the most efficient ensemble, maybe choose the second one down. Or if you want just a single model, you just take the GBM, you know, you choose what you want to do at the end.

H2O3 Roadmap

Okay. So I'm just quickly gonna mention a few things about our H2O3 roadmap. So alright. So this is basically some things that we have planned for the next two quarters. So Q1, we have a new algorithm, Cox-proportional hazards. GLM we're adding ordinal regression, GBM quasibinomial distribution. We're going to spend more time on NLP. So things like TF, IDF, we're constantly as part of the autoML push, we're improving all the stacked ensemble functionality. So there's a bunch of things, but one of them is defining a custom metal learner. Right now we have that hard-coded to one that we think is good, but you might wanna experiment with that. So autoML new ensembles. Coming up with smarter ways to group the models together and ensemble them. We're adding XG boost to autoML.

And then for H2O3, adding distributed XG boost and factorization machines. And this link down here, this is a link to all the autoML improvement tickets. So this includes stuff that has to do with stacking or other periphery things that help autoML. So if you wanna see the whole list of things that we have planned, check that out and feel free to give us your feedback, cause this is all, you know, very experimental what we're doing here. This is not something that, you know, there's a default autoML algorithm and we're just implementing it at scale. We're also inventing new ways of doing things. And part of how we do that is getting feedback from people and see what works, you know, maybe have some interesting data set that we haven't tried out and it has different performance than you would expect, you know, that things like that would be good for us.

Okay. And this is just a slide, we'll put all these slides online, but if you're just looking for a quick slide with all the links that I think are important this would be a good time to take a picture, but again, you can also find these slides online. Actually, you can find them at the slide decks URL and we'll put all those up there. Okay. And if you have questions about H2O, stack overflow is the best place for code questions. Google group is good if you have like more specific questions or roadmap questions, and then for Gitter is kind of like a chat room, kind of the focus there is to bring in new people from the community to do development and sort of using that as an open source collaboration room.

Hands-on Demo

But people also go in there and ask questions cause we're usually there. Okay. So let's move on to the hands-on. How do we do this? Okay. So quick lab. Let's see if this works, I don't know. I'm gonna have to get your opinion in the audience if you're having issues with this. But basically, if you were in the last session, you've probably already set up a quick lab account, but if not, so let me preface this by saying quick labs is optional. On the next slide, I will show you a link to where you can get the code if you wanna just run it on your own machine. So quick labs is basically just a browser-based environment with our studio and with Ipython or Jupyter notebooks that you can run the code on the cloud and you don't have to worry about installing anything. So this is the instructions, I'll give you guys a few minutes maybe to get that set up and maybe I'll show you how to do that. So if we click on this, that should bring something like this up. And if you don't have an account, you sign or you create one. So hopefully this works.

Okay. So there's a little, let me make this bigger. Okay. So once you've logged in, this is the only lab that we're showing right now. So Introduction to autoML, and you just click on it. And what we'll do is you'll see this green button, you can start there and it actually takes a few minutes, I think, to get it all set up. So while we're waiting for that, let's just show people where else they can go to get the code. So this URL here will bring you to our GitHub tutorials repo that has all the same notebooks. So if you're doing that option, then click on that. Anyone else need to get that link? Okay. And then that just brings you to our GitHub.

So this is the H2O tutorials repo. And there's an H2O World set 2017 folder and an autoML subfolder. And the readme describes the two parts of this tutorial. So there's one, part one is binary classification. So we'll show you how to do autoML in that case. And there's different things that we highlight in the two tutorials. So this one is meant to be more straightforward and then the next one is regression and we'll try out some of the more, not advanced features, but other features of autoML. This will specify all the things that we're gonna do. Okay.

So I think this is an old window. Nope. All right. So let's bring up, I think I lost my window. Here we go. Okay. So my lab is still setting up, so I'm gonna give it a few more minutes. And while this is getting started, this actually takes at least two to three minutes to get it all set up. if anyone has any questions, I think now is a good time to answer a few questions. So what's that? Slido questions? Oh does someone know how to bring up the Slido thing it all? Cause I don't know if I have a link to that set. Okay. All right. Let's see.

Okay. So let me skip to the second one. Currently, we are not able to use balance classes parameter with any kind of grid search. Is there any way to deal with that? I think they're asking probably about doing that with autoML. If that's not enabled for regular grid search, that probably should be added, but I think in autoML, somebody has already asked or requested the exposing the balance classes parameter. So that will be added in the next release, but we're also going to do some of that automatically. So if you just give us your data, we're gonna try to balance the classes the way that we think is good. Let's see.

So the quick labs isn't working for me. So I'm gonna use this other instance, but essentially if anyone is having luck with this, then what you'll see on the right-hand side is once the lab boots up, you'll see an IP address to go to for R, or a separate IP address to go to for Python. So depending on what you want to use, I'll also show how to do this in flow. So I'm just gonna demonstrate that, there's not a place to go in QuickBooks for that. So yeah, I think what I'll do just because of the way that Jupyter notebooks are nicely formatted, I'm gonna go through the Jupyter notebook, which is Python, and point out a little, maybe switch over to R a little bit as well. And so that should be H2O if you get any passwords that you need, just type in H2O. I'll also log in here, see what happens.

Okay. So if you're doing the Jupyter version and you'll see this, this sort of interface here, and we're gonna go to Python and autoML, and that should pop up. So part one is the binary-classification demo. So we'll click on autoML binary-classification, product back orders, and we'll bring that up. Okay. We'll wait a little bit, and then let me show you a trick if you're using the code locally here. So this H2O tutorials repo, one thing you can do is clone that repo, but it's really large because there's like data sets and other things in there. So if you wanna just download this one file, then if you go to Python, for example, and click on this here, if you wanna just download a single file you can right-click this raw button. Oops. That's not actually what I'm meant to do.

Sorry. So control, click. And if you do save the link as it'll actually just save that single file to your desktop. So that's one option to get the file, or you can just follow along visually. So let's see if this is working. Okay. So this is actually running in an environment that is either part of the QuickBooks, or I'm not sure. Okay. So that's still not working. All right. So let's go through this. All right.

Binary-Classification

So this is a binary classification demo. So the goal here is to do a binary prediction and the data set. Well, we'll get to the data set. Let's do the first thing, which is to start up the H2O cluster. So to execute cells in a Jupiter notebook, if you're not familiar, you just do shift enter, so let's start that up. So when you import H2O, and then do an H2O dot in it, what H2O first does it looks to see if there's already a cluster running that it can connect to at whatever IP address is in there.

So if you don't specify an IP address, it will use local host. So this is on some machine elsewhere, but what it's doing is it's checking it actually doesn't take this long in real life. So this must be just a cloud issue, but this will pop up in a few seconds, hopefully. Okay. So generally that's like instant. So what we see here is just prints out some stats about the cluster. So we've got this cluster, was started 26 seconds ago. This is the version, this version is four days old. We didn't specify the amount of Ram for the cluster, but right now we have 14 gigs. We have 16 cores. Yeah, so this is just some metadata about the cluster. So now that we have that running, let's load a binary classification data set.

So there's this data set that is predicting product backorders. So basically the idea is to know in advance if a product is going to be back-ordered. And there's all sorts of metadata about the products having to do with like inventory, current inventory, transit times, demand, forecasts, and prior sales. So these are just some of the features that are included. And then the response is, you know, back-ordered or not back-ordered. So we're gonna use this to load data. This is just a little bit of extra logic that says, look for the file locally. If it's not there, go grab it off of GitHub. So it's done that. So now we've just used the H2O import file, which is our standard way to get data into H2O. We have a CSV file here, but you might be aware, you can load a lot of different types of data into H2O from a lot of different sources.

So CSV is just easy to work with. So we go with that. So for classification problems in H2O, the way that H2O knows that you wanna do a classification is by looking at the encoding of the outcome. So the response column. So if it's a categorical column, it knows you're trying to do classification. So sometimes you might have a data set that it's a binary classification problem, but the way that it's encoded is with zeros and ones. And so then if you load that into H2O naively, without telling it anything, it will just assume that that's numeric and it should do a regression. So if you ever find yourself where you thought that you were trying to train a classification model, but somehow you got a regression model, that's the reason why. So we're just gonna make sure that the response column is already encoded as a categorical.

So we're gonna do DF, which is our data frame, and do .describe to this will show us all the columns here. So here's like inventory lead time, blah, blah, blah, forecast sales, and other different interesting things about products. So the response column is here, I went on backorder. And if you look, you can see it's already enum is the Java name for categorical. So enum factor, categorical, these all mean the same thing. So if you see enum you're on the right track and it's already encoded as enum because the way that it looks in the file is a yes or no. So it's a string and it knows what that means.

Okay. And this is just showing you how to convert it to a factor if it's not already there. So the way that we specify our model is we say what the response column is. So we call that Y in H2O and then X would be the predictor columns. So a lot of times the way your data's formatted, you just have one response column and everything else can be used for prediction. But sometimes you might have an ID column in there, or some other columns that you don't want to use in your prediction. So you can remove those from the list. So in this example, we're gonna specify both Y the response and X the set of predictor columns, because we have this SKU, which is a product ID, unique identifier. We don't want that in our predictions.

Probably if you leave it in, most algorithms will sort of learn to ignore that. And it probably won't cause you any issues, but just to be principled, we will remove it. So let's define our Y and here, I'm just saying X is all the columns, and then I'm just removing the Y and the SKU. Okay. So now what we're gonna do, let me make this a little bit bigger, see if that's too big. Okay. So we're gonna set up autoML in this first line, so that doesn't do anything that just sets it up. And so we're gonna say train for max models of 10. And when we say 10, we actually mean the base models. So that does not include the ensembles at the end, so 10 models, and then there'll be two ensembles at the end. so there'll be a total of 12 models in our leaderboard.

And if you want reproducibility you can get that to some degree by setting the seed. So I'm not gonna go into too much detail about deep learning and why our particular implementation is not reproducible on multiple cores, but so you'll notice that the deep learning model might change from run to run, but all the other models should stay consistent. So let's do that. So we set it up and then do the .train and tell it which data we want to train on. And let's just run that. And like most of our algorithms, we have progress bars that try to estimate, you know, visually show you how much time is left. So this will probably take like a minute or so. So if anyone has a quick question, I know we don't really have the Slido thing. Yeah.

How can you go home to continue this? Yeah. oh, so what you, I think what you're asking is how do you like, just do this outside of this session? So you wanna be, you can get the code that I'm running in our GitHub repo, which I mentioned earlier, and then you just need to have H2O installed on your machine. So if you're a Python user, you can PIP install, that's an easy way to do it. R you can get it from CRAN, you can also always get the latest version on our website. So H2O.ai/download is another place we have all the versions, and they're always the latest. So we have stable releases once every little while. And then every night we actually release H2O as well. So we have nightly releases. So if there's new stuff and especially with autoML, that we're always adding new stuff, you could check out the nightly releases and all the documentation is also versioned. So if we add something new in one of the nightlys and you go to the autoML user guide page, you should see something new pop up there. Okay. So let's go back still training. So one more question. Yeah.

Okay, so he's asking, how do you know what hyperparameters were of the models that you got? So, one thing is, once you get the leaderboard, you'll see you have all the model IDs there. And so you just grab the model and then you can in each model, it stores all the parameters. So that's the easiest way. And okay, so we're done. So, yeah. So you always have full, like insight into what, what your model is afterwards. Okay. So we've trained now. Let's take a look at the leaderboard. Okay. So in Python, it's stored here,

So let's make a new data frame called LB, and then let's take a look at the top of the LB object. Okay. So here we go. This is what our results are. So the top model here got an AUC of .947, one right below it. So this is something that can happen. So when the GBM is, so I should say, GBMs are often near the top of the leaderboard. It depends on your data, of course. So if you have sort of dense numeric data, GBMs do pretty well. If you have really wide data or sparse data, maybe, you know, something like deep learning or GLM is better. So one thing that people have asked is, if they're a little bit knowledgeable about which algorithms kind of fit with which types of data, then what they'd like to be able to do is turn off certain things.

So I had somebody ask me "I have sparse text data, I don't want to use any tree-based models on it, how can I turn that off in autoML?" And right now you can't in this version, but we will add the ability to switch off different model types so that you can have a little bit more control over what you're doing. So if you know, in advance, you either don't want any tree models, you can turn all those off. And if you, you know, conversely don't want deep learning or GLMs, you could turn that off. That's not implemented right now, but we'll be adding that. So anyway this is a leaderboard, an example, leaderboard okay. And that we just printed out the top of that. If you wanna see the whole thing, this is how you do that.

The head method has a rows argument. You can just tell it to print out how many rows you want. So this is the whole thing here. So one thing you'll notice is way at the bottom deep learning is not doing so well. So we need to pay a little bit more attention to what we're doing with the different deep learning models in here. Another reason that that's, you only see one deep learning model instead of a whole bunch, is that we've only done 10 models. So we didn't give it that much time to get going and search the whole space. So if you only give it just a little bit of time, it kind of tries to optimize what it thinks it's gonna be more useful, like GBMs. So if you were to run this for a few hours, you'd see a lot more diversity in the model, and you'd probably start to get better deep learning models here. And then GLM is usually at the bottom as well, I shouldn't say in general, deep learning is down there, but GMs are generally far worse than the GBMs and random forest, but that also depends a lot on your data.

Code To Look Inside Ensembles

Okay. So some people say "I'm interested in ensembles, but I would like to sort of understand what's going on more", so I wrote up a little bit of code to show you what it looks like inside of an ensemble. So this is probably a function that we will wrap up into like a better utility function, but basically, I'm gonna ask from the leaderboard to get the ID of the ensemble or one of the ensembles. And then I'm gonna grab that model and then get its metal learner and then ask what is the variable importance for the metal learner? And what that will show you is it'll show you how much each of the base learners are contributing to the ensemble. And that's kind of an interesting thing to see. So let's grab the metal learner. So if we just wanna look at numbers, we can do that, but it's a little bit nicer to see plots.

So this will take a minute. It's doing something, but let me go switch over here. I'll cheat by showing you what it looks like over here. So little bit hard to see the labels on the side, but I'll read them to you. So this says XRT, which stands for extremely randomized trees. So this is a variant of random forest, we're actually using the random forest algorithm in H2O, but turning on an extra randomness parameter that makes this basically a more random, random forest. And that's one the ensemble has decided that that one is actually very good and is helping a lot. So it's weighted the most. Next is a GBM and another GBM, a regular random forest, a few more GBMs and you'll see towards the bottom, we have deep learning and this other GBM model.

So for whatever reason, the ensemble decided, you know, it's not for whatever reason, but it's based on math, that these are not good. And that we're just gonna ignore both of these. So this kind of gives you a representation of what's going on. In autoML right now, we're using a GLM for metal learner with non-negative weights. So what you're seeing is actually the real coefficient magnitudes for each of these. So these can be interpreted directly, if you were to use a GBM or some other method for a metal learner, we also have variable importance for those. So you could print the same plot, but this shows you exactly the linear combination of these and how much is going in to generate that ensemble prediction. So that's kind of interesting, I think. Okay, so once you're done, if you wanna save the leader model, so one thing, if you decide that you want some other model on the leader board, you just look and see what the idea is, and you grab that model into memory, and then you save that model.

If you wanted to apply the save model function directly onto the leader, then you can do that. So this is just how to save a binary version of this model. And then we also have the mojo implemented for all of our algorithms. So you can save either binary, or if you wanna productionize this model at the end, you probably wanna get the mojo as well. Okay. I only have a few minutes, so I'm just going to instead of running the whole extra part two of this, I'm just gonna point out a few things. By going, I'm just gonna go back over to GitHub and we're gonna look at the regression and the setup is the same, basically.

Power Output

So this time we're predicting power output from a power plant. So we're going to load the data. We're going to look at the data we're going to run autoML. One thing that we're showing in this demo is if you want to score the leaderboard on some particular piece of data. So if you have your own test set or something, some other piece of data that you wanna score, you can pass in, there's a leaderboard frame argument, and you can pass in your own test set. So this demo actually goes into showing that version. And then it shows a second version where you don't use a leaderboard frame, and it will show that you actually get better performance if you don't use a leaderboard frame.

The reason for that is that you have to chop off a piece of your data to create that frame. So the first autoML is only training on like 80% of the data and then using 20% for the leaderboard. But with cross-validation, we could just rank the leaderboard based on cross-validation metrics. We don't actually need to waste any data for scoring. So it's sort of like up to you how you wanna do it, but you'll probably get the most use of your data if you just give it a training frame and let it use all that data. So here's the second version where I just use the entire data frame. And then here's the two leader boards, you know, depending on what you care about, let's just look at the RMSC we got 3.36, so that's in megawatts.

If there's any energy people here. And then we did a little bit better on the second one, we got 3.23, which is a little bit smaller than 3.36, but the fun part about this is that this data set is from a publication in 2014, where someone wrote a paper and they had this data set and they were able to get their best model, was able to get 0.3787 RMSC. So we've already beat their model with running this for 60 seconds. So you know, a fun activity to do is go out and download all the papers where people are reporting results and see if you can beat them with autoML in one line of code. So that could be fun. And then if you want, you can take the leader model and generate predictions, just like any other model.

It's just another H2O model. So we have a predict function. and then if you wanna evaluate the performance separately, you can also do that. All right. So that's all. And I think maybe we could put up the Slido thing again and try to just answer a few questions at the end. Okay. okay.

That's a good question. Is there any way to utilize autoML with GPU? So one thing is, when we add XGboost to this, that will probably also enable you to use GPUs. But we also have the H2O4GPU project, which we might end up either doing a separate autoML for that project or bringing H2O4GPU inside of H2O, and then making that part of autoML. So right now the focus is on H2O3, which is primarily CPU based. And, but that is on our roadmap. We're very excited about GPUs. Okay. Okay. Okay. So there's a lot of questions, so we're not gonna be able to answer all of them now. So come and find me, or if you can't find me in person, send a question to Google stream or Google group called H2O stream, or find me on Twitter. If you publicly bug me about a question, I'll probably feel obligated to answer it. So anyway, thank you very much. And hope you enjoyed learning about autoML.