Invoice 2 Vec Creating AI to Read Documents - Mark Landry - H2O AI World London 2018

Slides from the talk can be viewed here: https://www.slideshare.net/0xdata/inv...

Bio: Mark Landry is a competition data scientist and product manager at H2O. He enjoys testing ideas in Kaggle competitions, where he is ranked in the top 100 in the world (top 0.03%) and well-trained in getting quick solutions to iterate over. Most at home in SQL, he found H2O through hacking in R. Interests are multi-model architectures and helping the world make fewer models that perform worse than the mean.

Talking Points:

Speakers:

Mark Landry, H2O.ai Fellow, Director Data Science & Product, H2O.ai

Branden Murray, Principal Data Scientist, H2O.ai

Read the Full Transcript

Invoice 2 VEC

Mark Landry:

We are going to talk about one of the products that we've been working on just for a couple of months. It's titled Invoice 2 VEC. There's a few things, but we're going to talk about what that means. Our path, as you know, is H2O helping a client create AI to read documents, to extract document information. So we'll be talking about that.

There are four of us on this team. So Branden and I have been working with a particular client, PWC, who spoke earlier today. I've been working with them for about three years, Branden, for two. And for this project we've stepped up a little bit. So we've doubled our team. We have a couple other data scientists helping us out for this one.

And then for all the products we build, of course, you know, it's really not just the data science team. We have software engineers helping us out build the front ends that you saw actually on the screens. Our stuff was behind them. And the customer team too. So there's a lot of people. In fact, one of them is practically in our team as well. So it's a fairly big group for this one, I suppose, especially in H2O terms for making this product. We've been working on it for about two, almost three months now. So we'll show you where we're at. We are not done, but we'll show you where we are heading, what the ideas are, what we struggled with. So quickly we'll look at the problem, and then we'll look at it a little deeper later.

We are extracting specific information from business documents. The information we're pulling out of them changes in the different types of documents, but that's the general idea. So we have an example of one here. You can see an invoice on the left. And in this one we've circled a few of the things we're looking at. Again, even for a specific document, we can look at it multiple different ways. We might talk about that a little bit, but on the right is where we're really headed. We're just standard old CSV. We're looking at the formatted information that we need to pull out of it. It's a somewhat standard task, one you are probably familiar with, other people are doing it. What we'll talk about here is a little deeper look at the problem and the challenges in it, those that we've run into, and some that are just native to this problem from when we started.

Branden Murray:

We'll jump right into the high end ones. So there's a lot of algorithms going on here. We're using many different things to do many of the different tasks. But, deep learning is the most exciting. So, Branden's going to talk about some of the image recognition, the bounding box identification type algorithms that we've been using for this task. And, time permitting, we should be able to do a little bit about what we've learned and how we've prepared the data for this task. It's not a standard one when you're teaching these image recognitions. It's not cat, dog, mouse either, so it's a little different. We will walk through what we've done for that, where we have succeeded, where we failed already in these three months. And then last, we'll try to finish up with how this connects into Driverless.

So the product you've been hearing about all day, we are using it for this product, and we'll talk about that. And then also the real goal here is that the results of our work can be fed back into the product so that we can actually have some recipes out of this. So we'll take a look at where we're headed there. That would be, you know, quite a while away, but that's where we are heading. So the problem challenge again, you know, pulling information out of these documents. When I tell this to a lot of people, they go back to, isn't this a solved problem? Why aren't you using OCR? Why aren't you using XYZ Library? Why aren't you just using YOLO? Why is this a thing you're working on? You know, and it's true there are many off-the-shelf solutions that do a really good job at doing some of this task. For example, on the top, we have a document on the left, a PDF that we would typically see. It's not an invoice. And on the right, a free tool on the internet when we first Google search will give you something that looks really clean, really nice. Not just OCR where you're getting a text back, it's preserved all the structure. It's put a lot of high-end features in there. It looks quite a lot like that document on the left, and that's for free. So, you know the state of the art for pulling some of this information is pretty good. There are a lot of off-the-shelf tools, but if you take a closer look and on the bottom we zoom in to, what for us is, is not just a mistake, but it's a critical mistake.

So, this has used an English language OCR, and so in the balance column, a lot of our data is tabular. That's a little different than typical OCR, typical NLP. So, we are looking at orientation that's important to our problem as well. Here in the column of balance we see it's supposed to be euros, but EUR isn't a word. And neither is USD to be honest, but it decided that the probability that it meant "ETA '' was enough that it just switched it, so it used ETA. Even though it probably read the EUR very well. And that's part of what you get with some of the OCR tools. They have deep learning underneath them, they are language specific. You can pick which one you want.

It's not that we chose the wrong language, this is in English, but the tool has some pros and cons, and for us, that would be a critical con. So we can't just OCR it, although the OCR is really good on everything else in this document. But, it's not just one tool either. Even if we could run this a couple different ways and resolve this problem, and we will actually, there are other things too. And there is a lot of judgment that goes on. I will skip to the third bullet point. In some cases, we are interested in the way that this document would be accounted for. Like Gary spoke about how in the 1400's we had double entry accounting, so we want to see how this document would be accounted.

Dates are really interesting to us, but not every date. Not every date on the page. We might have seven, we might have a hundred dates and only one of the ones is the correct date. So OCR will give us all those dates, it'll give us the text, we can read it, but that's not the entire challenge. We have to then go extract, not just what's there in nice format, or read the words. We do actually have to figure out which is the right one. That's our task. And that's why this is more in the machine learning realm at that point. So now you're talking about a classification model trained on data, and that's exactly what we're doing here. There's other people doing that too, even specifically, but we have yet to see something that would do what we want to do right out of the box. Where we would use it actually.

So, technologies we anticipate being in our stack that we are experimenting with now, certainly in many different ways and in series and loops: OCR, natural language processing, image recognition, and of several different types too. With that, I think I will jump this over to Branden.

Our Experience With Deep Learning

All right, so I'm going to talk a little bit about how we've been applying our experience with deep learning models and doing computer vision on the documents. The reasons, as Mark kind of alluded to do that, is because although a lot of these documents have embedded texts in them, a lot of the texts in them are not that good. So, sometimes it would just be a bunch of gibberish and sometimes it would be slight misspellings, but we need things to be exact so we can match the exact words in the document to the accounting documents that we need to match it to. And then another reason is that the embedded text is usually just one giant long string of text, so you lose all the structure. Like Mark said, you might have a bunch of different invoice dates in a document. The invoice date is usually going to be somewhere at the top of the document, maybe on the right side, or right in the middle, or something like that.

So what we do is we're starting to use object detection models to identify bounding boxes of where the targets are in the document.

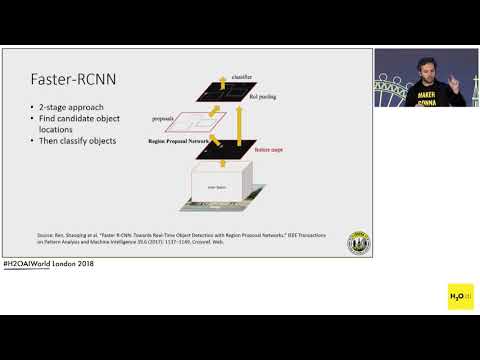

So far, we have experimented with three different frameworks. We've done Faster-RCNN, and RetinaNet, which have both shown some promise so far. We've also tried YOLOv3, which hasn't been that great yet, but we'll keep trying. I'll talk briefly about Faster-RCNN and RetinaNet, since those two have worked the best so far. And they have slightly different takes on how to find objects. So Faster-RCNN is a two-stage model, which basically takes an image, we apply a convolutional network to it which outputs the feature map. And from that feature map, we apply a Region Proposal Network, which basically finds in an image all of the possible boxes that the foreground might be in. In our case, the foreground is going to be text. It will generate probably a thousand or two-thousand different possibilities of where a bounding box might be around specific text.

Once you have all these thousands of possibilities, a second stage applies a classification network to it. That classification network will identify something and say, this is an invoice date with a 90% chance, or this is a stock code with a 4% chance, or something like that. And then once we have all of those, we can pretty much say everything above 80%, we're going to keep. And anything lower than that, we're going to throw it away, because it's not useful, it's probably wrong. Then the second one that has shown promise is RetinaNet. This doesn't have two stages like Faster-RCNN does. This is a one stage detector, but the thing that makes this one unique is that they introduce the new loss function that hasn't really been used before and that helps the one-stage detectors.

One benefit of the one-stage detectors is that they are a lot faster than two stages. So what this last function actually does, is punish all the easy examples a lot more than it does the harder examples. So, for something that's hard we're going to force the model to focus on all the hard examples. If it's struggling with finding an invoice state, that's going to force the model to start focusing on finding that a lot more. This is an example of RetinaNet results that we have so far. I think we started using RetinaNet about three weeks ago, so this is in its very early stages. As you can see in the upper left there, we found the invoice number. All the green boxes are the actuals and all the other colors are guesses for different things.

We can see in the upper left that it got the invoice number pretty much exactly correct, but right down there, right next to it totally missed the invoice date and it totally missed the company name. It didn't guess anything there. And then we can see down in the bottom there are a few false positives and one where it just has a box of emptiness, which is kind of surprising, but that should be pretty easy to fix. I should also say that these models were trained on using 14 or 15 different invoice formats, which in terms of invoices is like nothing. There's thousands and thousands of different formats. So considering we use so few so far, I think this shows pretty good promise. And I think Mark's going to talk about the training set.

Teaching the Model to Understand the Why

Mark Landry:

What he mentioned is one of the things we've learned. For us, looking at this from the outset training data is going to be critical, because for these models, we don't readily have answers. You can think the natural process of figuring this out would say, let's get a hold of some documents and the way they were accounted for. That's the natural way to grab data without trying to go do it specifically for your process. But, there are a couple problems with that. One is, you know, the security of the clients. Say, that's actually kind of hard to find that pool. We're not the only ones in that boat. If you look, there are some other people solving this problem and they've reported similar examples like, confidentiality, security–it's important, you can't just go rating documents all the time.

But, the real problem is that it doesn't actually help us for deep learning. What that would do, let's say we had that, so we have an invoice, we have an invoice date here; again, like I mentioned, there might be a hundred invoice dates and 50 of them might all be the right one. For us, we want to learn the why. That's what deep learning is. We really want to teach the why behind which one is the right one. Not what the answer is, like the actual date. You know, January 3rd doesn't matter at all. We want it to learn the flexibility of the thing that follows invoice date in many different languages, many different representations. Sometimes it doesn't say that at all. That's the thing that we want it to learn. And so, we've gone after these bounding box models. You can look in the bottom right, this is a spreadsheet view of it, but this is the data we've had to collect; which is bounding boxes. And for those that are familiar with this world, you know, annotations, it goes by a lot of different names for obtaining these labels.

This is how you do cat dog mouse problem. The world isn't labeled often with images. We're not the only ones trying to do this. There are services out there that will do that for you. But, then again, security comes into place and some other kinds of questions.

What the original plan was, was to create templates. And so we would, we would take care to get a few documents really correct. And you can still get through these almost as quickly as you can using an online sort of thing where you go with high speed. But, if we capture it in a certain way, we could actually get real examples of that recorded data. Get that structured data and load a lot of different examples. It's not learning that the item of interest is a chair, you know, anything can pop in that chair blank or the dates can be rotated, formats changed, you know, move 'em around a little bit.

But, that's been the struggle, we can't easily move around a formatted PDF, or at least we haven't yet. We're experimenting with that. So far, we have not done a great job. Where that left us was putting a lot of energy into getting a few templates that we could generate a thousand of, but a thousand different versions of one document, even if we switch up the characters and all that stuff. We are overfit massively to the structure of these. It learns that structure, and if you put a new structure in, it stopped too early–It can't generalize to solve the new template. And that's where we are at now. The more templates we add, the better off it is. The batch size is down to a hundred, but really it could be as, as low as one.

That's been one of the big lessons for me. I still like the power of having these templates where I can doctor them and work with them in a way that's not typical with images. The PDFs are structured a lot more than regular images. I want to use that control and try to essentially perturb these just enough so that the deep learning doesn't overfit, but just enough. We haven't gotten there yet. So, a simpler method is what I alluded to earlier. Online annotation is a simpler way. There are people that have this as a solved problem and their tools are just a little faster than ours, but when you're doing a lot of these, a little faster is helpful. And so we've thrown a thousand of these invoices into a tool where we're just drawing boxes over things, which is an interesting process too. As I have said a few times, we want different label types. We have different models doing different things. And so, the one here, I've got giant boxes of tables highlighted in the blue. Because, one of the styles of models is that we don't want to worry about every single line item. We want to have a model that is really doing a simple first pass.

And so things you can do at a header level, if you look at header in detail, whether we had one line, a hundred lines, one page, 10 pages, show us where the table of the data is. That's just one model we want to use in our arsenal. And that's a different labeling state. Now, if we wanted to use this same thing to get something else, we're going to have to click again and draw these boxes.

It's been interesting, we knew it would be labor intensive going into it, because we had to go from zero to something. Now, are there Mechanical Turk and other sorts of ways of doing this? Yes, there are, and our process is not automated well enough. I think we would make good use of that, but we would look further because for deep learning you need more and more data and variety. We're missing variety and we need to get there. But, that's where we are. Again, we're three months in, but we are paying a lot of attention to this. Our fourth member, Shanshan Wang, is a data scientist aimed at this task.

She's really our training data owner. She's experimenting with lots of ways of adjusting the PDFs to get us where we can get these deep learning models to work really well when we need them most, which is when we have crummy scans and all of our other methods aren't returning very good information.

How This Plugs Into Driverless AI

The last bit is how this plugs into Driverless AI. The part we're currently using Driverless AI for, I think is a good story, because it shows the power of Driverless AI. Early on when we were creating some of these templated data sets, we have lots of the data that would fool an NLP algorithm, not so much that would fool the image recognition.

For the ones that need structure, we have a hard time manipulating structure, but the content we can manipulate a lot. So, those are more successful and those are the ones we're paying attention to in a different kind of track, if you will. Branden and Shanshan tend to be doing the deep learning models. I've been doing some of these other ones.

First we started with almost first principles, like just just pick the first date you see, just as a benchmark. How well does that do? Not very well, but at least we have something. The next one was to use Driverless. And realizing that a really simple way of what I'm looking for is that when we do have that embedded text, or use OCR to obtain it–which is the majority of the documents, the large majority in fact of the documents you can obtain reasonably good text with that.

That's on the right, if we look at every single line, just in simple form, we can use our existing labels to figure out whether these targets were in there. Once we have the bounding boxes, we have another way of going after them in simple terms. And so, the rightmost column is actually going to say the target class, and this is just a standard machine learning problem at that point. So with text, you know, with NLP. We have that in Driverless, so once I created this data set, fed it in the Driverless, and again, we're still struggling with the variance, even for the NLP, but we've gotten a lot better since I've tried this model. But, it was at a hundred percent, 99.9 AUC, almost immediately within five minutes of running a Driverless model.

The work was to get the data to go into it. The model itself was really simplistic because it's actually pretty easy to figure out all the terms we fooled it with. We have a list of terms that we put in there to jostle it a little bit, and it figured those out pretty quickly. Enough that it's picking up some of the structure. It hasn't figured out too much vertical position on this one, but it does a really good job of figuring out what we were looking for. So, the way of using Driverless was actually pretty easy. It's more of thinking of how you get the data into Driverless, and I think that that's a data munging problem. So here we're so familiar with our training process, I think we will see this time and time again, just in the way that we're going to have multiple different image models going after different variations of the targets.

I anticipate we'll have multiple different Driverless models out there, all doing different things. So right now, the Driverless model will only take you halfway, just like an image model will. If it shows you the bounding box, that's great. We still need a formatted answer and structured data. That's not necessarily the hardest part. But, we need it from here too, because I've labeled the entire string, you know, it's a pretty stupid parser...simplistic, I should say. Every new line is it, and we just encoded as a target whether the presence of that string was there or not. So, we then have to extract the data out of this one too. But that's fairly simple actually with what we're looking for with two of these three targets–trivial, in fact. So this was nice to see.

I didn't have to think much. That's the idea of Driverless. I spent all my time thinking of everything else about this problem. The model itself, I didn't actually code at all. I didn't have to do any of the typical NLP transforms, you know you know, those are done. Some of 'em are done. We're making that better. But you know, we have all the way our target encoding and our tech CNN was used for this one to do the job. So that was pretty good.

The Real Goal of This Project

The real goal of this project, and this is exciting you know, a lot of the work we've done previously we haven't been able to include back into the tool other than reports. Before, I was working directly with a customer, and as you know, you find something new every time you deal with a customer data set. That was true before I worked at H2O. With Kaggle I was amazed at the diminishing returns. The slope is a little different than normal. It feels like you can keep doing competition after competition and just every data set is going to bring out something different. Here, we have the opportunity to push this back into Driverless AI and that's connecting to you at some point. Our goal is that you can pull down the results of our work. Sometimes that might be too narrow minded for a lot of people. And so, you know, a specific version would be this invoice to VEC, something like that. Like we'll load a document, maybe it's not an invoice, maybe it's something else. We have the terms we've looked at very much like a deep learning, pre-trained model in that world and a vector of what we think the probability is of the known classes that we've gone after in the past are trained on ours. That's one possibility.

More generally, either in addition or if that proves too hard, but I don't think it will. Our goal is to get that in there in some way, shape, or form, because we're going to use it ourselves. That is the best way of building these products. We used to build H2O3 that way. When a customer didn't have what they need, let's work on that. And that's the way we're trying to do this one. But, in general, there are a couple other ways we can do that as well. Just taking the tips and tricks, that's how Driverless was built.

It's taking grand masters who have done hundreds and hundreds of data sets. The learnings of that is how Driverless came up from specifically Dimitri, but as it's been added onto. That's sort of what we could do too.

As we are experimenting with the image domain that we're not paying too much attention to otherwise at H2O, we are able to advise on how that comes into the tool. Or maybe we just extend the NLP models because we have that kind of need as well. We're already using the NLP models, I can already see we need variance of that. So we'll probably see maybe all three of these classes improve. So, and that's it. We're out of time. Thanks for listening and hopefully you'll see the results of our work soon.