Venkatesh Ramanathan, Paypal - Fraud Detection Using H2O’s Deep Learning

Venkatesh talks about how Paypal uses H2O for Fraud Detection.

Talking Points:

PayPal’s Application of Deep Learning to Assist in Fraud Prevention

Is Arno Candel's Fix Specific to the Implementation or the Whole Product?

What is the Ratio Between the Number of Neurons and the Number of Features Supported?

Down Sampling Strategies to Improve Deep Learning Performance

Speakers:

Venkatesh Ramanathan, Data Scientist, PayPal

Read the Full Transcript

Venkatesh Ramanathan:

Thank you. I could have built a hundred models using H2O in this short time delay. Sorry for that delay.

PayPal’s Application of Deep Learning to Assist in Fraud Prevention

I'm going to be talking about Fraud Prevention using deep learning. This is the outline for my presentation with a little bit about me. I'm a data scientist at PayPal, primarily working on Management and Fraud Detection. Prior to that, I have years or maybe decades of experience developing server size software. I also have a PhD in computer science where I specialized in applying Machine Learning and natural language processing. So, the outline about PayPal. You've heard some presentations from previous presenters. I will talk little about what we do at PayPal and specifically our Risk Management group which deals with Fraud Prevention. Also, I'll talk about why I chose deep learning and H2O for solving this problem. I'll go over my experimental setup and some of the lessons I learned using H2O and deep learning. I'll share some results. You'll get some quantitative numbers on real data sets from this presentation. Also it will include some of my thoughts on using H2O and deep learning.

Okay, I think most of you should know about PayPal, but if you're not up to date with the recent news on what PayPal is doing, we have over 150 million members and active digital wallets and we are operating in over 200 countries. We have a deep relationship with every customer segment, and our cool competencies are at risk. And the reason we are able to do all this growth is due to our incredible competency in the Risk Management side of PayPal. We are an innovative leader. These are some of the recent innovations. So the one on the left, the payment QR code, is actually for when we started off as an online payment company. We are also going into the offline world. You'll be able to use it in a store to buy any other products in the offline world. The next one is the variable tech. That's also another recent innovation.

PayPal's State of the Art Feature Engineering

We also do a lot of innovation in the Fraud Prevention area. Some of the key highlights of what we do at PayPal is in the Fraud Prevention area. We have state of the art feature engineering. You can think that we have millions of transactions, dealing with billions of dollars and being able to detect fraud reliably and prevent it. We need this state of the art feature engineering. We have state of the art machine learning tools, both homegrown and commercial. And we also have a lot of statistical models. However, as you can imagine, the volume of scale we deal with in our server, the software and the infrastructure is highly scalable. We also have a superior team of data scientists, researchers, financial and even intelligent analysis and security experts.

The Three Multilayered Ways to Prevent Fraud

There's a little bit more of Fraud Prevention at PayPal. Primarily, this is actually a kind of a Fraud 101. We have three multilayered ways to deal with fraud. The first one is the transaction level that anytime a transaction happens, the online payment happens. We apply state of the art machine learning algorithms and statistical models to detect whether it's a flag that behavior is fraudulent, so that we can prevent fraud before it actually happens. We also employ more sophisticated models. Once the transaction goes through the different stages. We employ further, again, machine learning based models, which in the SLA, we have more time to evaluate the fraudulent nature of the behavior and act on it. So that way we can, if there are fraudulent activities, reverse the transactions because of the multilayered approach at the account level.

We monitor account level activity through the entire life stage of the account. We look for abusive patterns. Some of the simple examples like you have been physically located in the US, but suddenly there's a payment happening in Australia. So we'd be able to detect IP based or region based anomalies. Those are some simple anomalies. The network level, for example, what the frauds do is that they collect money from several accounts and accumulate it into a centralized account, which later on they move into further accounts so that they can basically eliminate the trace of that moment. So we have different sophisticated algorithms to detect these kinds of accounts.

So processes are increasingly complex. Some of the examples I show are quite simple. Obviously in reality, with the volume of the data which we are dealing with and the volume of transactions, it's not that simple. So we need something to be able to model those cost relations among the data. And we also need a cost effective solution, which is scalable. We have a mission that, if it's not scalable, it's not usable. So that's why I looked into deep learning. And why choose deep learning is because you can see that most of the fraud behavior, the attackers are changing the activity patterns almost in real time. So, for example, they can contact a Denial of Service attack, and while the attack is going on, they can stick into our system.

Utilization of Deep Learning in Fraud Prevention

So we need to be able to detect these behaviors in real time. And to model that, we need to have features and machine learning models to capture this relationship, which a human cannot even observe. So deep learning actually has been widely employed in image video processing and object recognition tasks. As to my knowledge, it's not played in the area of Fraud Prevention. That's why I decided to give it a try. And why H2O? I said that our solution, whatever we have for the volume of data we have, needs to be scalable. In H2O, one thing is that it's a highly scalable infrastructure. I'll go a little bit more in depth about some of the experiences I went through. It's superior performance based on all the benchmarks results, which they have published.

Flexible deployment, which actually really appeals to me. I'll go a little bit more detailed on that. It works seamlessly with the big data frameworks. So we have lots of big data technologies in PayPal, Spark, and all the stuff. So we want to be able to seamlessly work with those environments. And it also has a simple interface for data scientists and everybody to experiment on using this framework. That's why I picked H2O and deep learning. So, on the data set, this is actually a subset of the data to deal with fraud, which you can see that itself is one 60 million records. This is a subset, which is one of 60 million, but that's a scale we are talking about. It's about 1500 features. 150 of them are categorical.

Using H2O and R in Deep Learning

In the compressed data, it's about 500 gigabytes, compressed from the ACFS. The infrastructure which I used for this particular thing is 800 cluster, which is running the water version of Hadoop, which is CDH3. Our decision to make is whether it's fraud or not fraud. So we have all this data, we have all these features. So I want to see how I can do an experiment using H2O. So my first setup is that we have the cluster already there, and H2O has a fantastic R interface. In fact, prior to that, I haven't written a single line of R. I've done a lot of Java code, but not a single line of R code. But, you can see that by seeing the simple interface, which they have provided using R, I switched to R for this particular work.

So, I tried this as a client. My very first client asked anybody who's using a new software, and it's a failure. It's unable to successfully operate on the data set and the size of the data. The biggest failure was that H2O was unable to form a cloud. So H2O infrastructure, if you look at it, the architecture we see is that we have a hundred cluster HTFS and H2O actually can start maps as part of the cloud. And basically, let's say you want to process hundreds of gigs of data, you need to have enough maps to load that hundreds of gigs of data into a map process. So you start the cloud as a map process, then you go and apply your machine learning and import the data and do machine learning models on it.

But this actually failed partly because of H2O's need for memory to have upfront. They do work, if you have a disc and this uses a fast pace, but the performance really tanks when you start using it. But so the same goal of H2O is actually in the memory data system. So obviously if you don't have any memory upfront it's not usable. I was not able to use this, but, as some of the presenters actually earlier pointed out, we have different clusters where the on-based, which supports multi-tenant, where we will be able to operate each tool in that environment. But this particular data set that we had is running a cloud version of Hadoop. So it's partly due to that.

Limitations of Shared Resources in Deep Learning

And also we have limitations on, as a single user, how much map you can ask because it is a shared control of resources on how much a user has. So, unfortunately I was not able to go much with this setup. And my next setup was, actually this is some of the earlier experiments. I also tried this particular approach. You have the hardware HDFS. So can I start a cloud outside this hardware environment? So basically, I had a final H2O cluster, which is running on individual servers, but all the data is still there in HDFS in a compressed form. So, again, R as a client, I tried the setup, actually, this one also failed because this is partly because I didn't pay attention to the document.

So they don't currently support Snappy Compression which is actually, we've been working with it for years, and have given some suggestions to use it to extend it. Our data was all Snappy Compressed. We have a different process which actually creates this data and H2O currently doesn't support Snappy. So what I did was that I actually recompressed the data. The reason is that we have lots of data. The most optimally used data, we had to keep it in compressed form in the other cluster so that it's usable for everybody else. So I used data, and it's using the same setup again, it's failed. But the first issue which I had was that the import was extremely slow. So for example for one gig of data, it was taking more than an hour to import, and suck the data into a cloud.

Problems with Parallelized Data and How to Solve Them

So one of the things is that in H2O, the rest of the process was highly parallelized. The import process is on a single server, it's not parallelized. So it was using a single thread in the data. It is actually where my team's response really comes into play. I push on the forum. It immediately responded and he looked at it and investigated it. Some of the issues, which I had was that when I was doing this, all the stuff with PayPal, actually, there was a lot of sensitive information. We can't let the user log into our system to inspect it, or we can't exchange any of this information data. So actually Cliff and Arno, I'll go later on, but they have to be intelligent about what is actually going on without actually me revealing 1% of their information.

They have to detect what exactly is going on to be able to fix the problem, right? So in this case, Cliff figured out that this is the reason why it's working. He quickly turned around at a fix, which parallelized the import process. So the input actually fixed, from one gig to the same data I was able to ingest in 10 minutes. There are still optimizations to work around what type of disc we are using. So, Cliff was getting much better performance on their cluster. So I'm working with them to investigate it. But this is a significant increase in the import process. So, this also failed. I imported the data. I tried to run a deep learning course, but again it failed.

Fixing Issues with Missing Value Data in Deep Learning

What is happening is this is where the data comes into play, right? When you're running deep learning, the first generation of the first one, so a few months back, three or four months back, when I started it, we had lots of missing values. Which means that 99% of the data had some missing values. And H2O's deep learning, initially what it was doing was skipping most of the rows. So eventually we didn't have enough data to strain at all. So this is, again I really have to credit Arno in identifying the problem. Again, you can imagine that I'm giving you snippets of information.

How Arno Candel Solved Missing Value Data Problems

This was the issue I'm having, and I can't give you the data, but go figure it out, right? Arno was able to find out because I put some statistics and gave it to him. This is happening because in 99% of the values, you had missing values and our first release actually skips all the values, even if a single value is missing, a single column is missing, skipping the entire role. So he turned it into an improvement. Input values if it is missing. So he added that fix quickly turned around a fix for that. So get me to my next stage. So I have Cliff's fix and Arno's fix for reading with the missing values. Okay, so, was I able to get it to work? No. Again, deep learning was so slow.

What was happening here? There are several tuneables within deep learning. And one of the key ones was that, they have a number that is a neuron-based titration that is a map based citation. So there is a key interval called the number of training samples per iterations. Which is actually how many samples a single map can process. So there are several changes which Arno has since made after I tried to intelligently detect what the number should be, right? So previously those fixes weren't there. You have to come up with a number, but this is a number where, If you choose a number too small, then you'll have an incredibly long time to run your deep learning. But if you choose too high, you'll end up having an unstable model. So there is some intelligence as well, but Arno has added some changes since I tried it out.

Is Arno Candel's Fix Specific to the Implementation or the Whole Product?

Audience Member:

Very quick question. Cliff's fix and Arno's fix. Are these specific to your implementation or a part of the product?

Venkatesh Ramanathan:

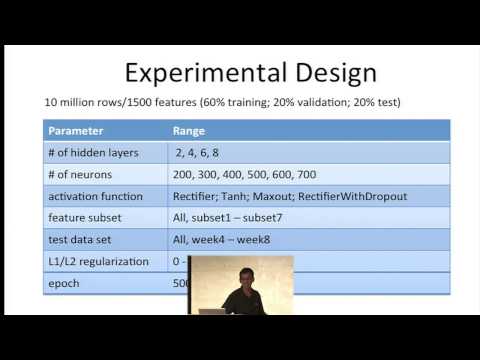

It is part of the product. Arno didn't know me, I just posted on the forum. He gets this thing, immediately he responds to it. He does all this as part of the open source fixes. So we are communicating anonymously. So you can see that it keeps on going. So you know what, I guess you all have to be sitting here until dinner time to find out whether I was finally able to apply the deep learning, right? Thankfully no. So finally I was able to get a setup which got to work. So, this is actually several of Arno's fixes and Cliff's fixes. Everything is there, all going to open source. So just to get the presentation going. So I also, instead of using all the 100 million records, I used 10 million, which is still quite a big number with the 1500 columns compared to, another standard publicly available data set.

Setting the Right Parameters in a Deep Learning Model

So my split is 60, 20, 20 split. This is my experimental phase. This is where I started this thing. So what I wanted to find out was that these are the parameters which I add. Deep learning has several parameters, like the number of hidden layers, number of neurons, and several activation functions. They have this adapting learning rate to organize it. So many parameters. So I want to drill down deep into that, which subsets actually work the best on our data set. So you can see that the area for, two, four, six, and eight layers, the after six hidden layers, the performance is steady. So, the depth of the neurons for the data set is that six was the right number.

After trying hundreds and hundreds of models. Finally, this is the most stable I was able to find. In terms of varying the number of neurons in the hidden layer. You can see that this is how this actually is, even though it's incredibly flexible and simple to use with H2O because they provide the grid search. You can vary these parameters quite easily. Even let the tool tell you how fast it is, and what the best number should be. In terms of activation functions, I tried out all of them. That rectifier with the dropout aided me the best performance. Let's see a subset of features.

What is the Ratio Between Number of Neurons and Number of Features Supported?

Audience Member:

How many features are in this Subset?

Venkatesh Ramanathan:

1500 features? So all of those users are using all the entry set of features. So now, what I wanted to do was , first of all, can I do it with less?

Audience Member:

Basically, my real question is what is the heuristics of the number of neurons compared to the number of features? How many trade records do you have as a ratio of weights, and then neurons, right? So, if you have hundreds of neurons, how many features are they supporting? Would you need hundreds of neurons if you have just a couple features or how many neurons would you need?

Venkatesh Ramanathan:

Oh, so that number, I don't have that handy actually. I can look up the information,

Audience Member:

But the features are like in the hundreds, right?

Venkatesh Ramanathan:

Features? No, no. The total number of raw features is 1500 features.

Audience Member:

So, so you have a feature subset of what? How many features?

Venkatesh Ramanathan:

So all of these results actually, if you look at it, we have 1500 features total. Okay? So then what I wanted to do was, can I use less than those 1500 features and I tried several subsets. So the reason I did that was that I wanted to see it, because we spent a lot of money on feature re-engineering, which is really huge. I wanted to see whether we can not invest in some other features? One of the powers of deep learning is that it's able to inherit or derive all those non-linear features, right? So I wanted to try it. So, in fact, one of the things which I said was subset seven, which actually didn't have that many nonlinear features, even had the worst performance, right. 0.751%, you see?

Why PayPal’s AUC was Improved by Deep Learning

So my next step was, can I improve this? The subset seven, which didn't have that many round data features. It still had the same thing. Can I improve with deep learning? Which is actually, in this case I wanted to try, that using subset seven, can I improve my AUC, just using deep learning, right? So I tried with six layers and 600 neurons in this and I was able to increase AUC by 11%. So this shows that deep learning does work on this size of data.

Audience Member 3:

For the original transactions. Did you have a label of what's fraud and what's not fraud?

Venkatesh Ramanathan:

Yes, we do have it. Yes.

Audience Member 3:

How did you get that actually?

Venkatesh Ramanathan:

Oh, there are several intelligences around it. So I can't go into the details, but yes.

Training an H2O Deep Learning Network

Audience Member 2:

So did you train the deep learning one layer at a time? I thought that H2O couldn't do that yet.

Audience Member 4:

At this point it's all or nothing. So it's the whole network at once. It's possible to train an eight layer network as we just learned from scratch. That's with the adaptive learning rate. It's per neuron, per time step. It's all adaptive, so it figures it out itself.

How H2O Adapts to Deep Learning Models

Venkatesh Ramanathan:

That is one thing I want to emphasize with the adaptive learning rate that Arno has, is that that is one parameter. I didn't have to touch it. That is the beauty of H2O. It is adaptive. I also did one more test to see the temporal robustness of deep learning, whether it is that you train in one week. How often you have to update the model. So you tried down different types. You can see that it's temporarily robust as within 1% performance difference. I was able to see that the AUC stayed pretty steady. In conclusion, even for all of this actually, some of the lessons in the intercept time actually. So I want to especially thank Arno. He has done a lot of fixes without knowing me. This is the first time we are meeting in person. So I want to thank him and also Cliff and the rest of the H2O team for a fantastic step. Thank you.

Audience Member 4:

Thank you. We have time for a question or two. Here we go.

Down Sampling Strategies to Improve Deep Learning Performance

Audience Member 5:

As part of your final solution from down sampling from 160 million records down to only 10 million records, did you use any type of down sampling strategy to rebalance the zeros and ones? Was that also part of your reason?

Venkatesh Ramanathan:

Actually, I didn't want to downsample it, mainly because of my current server configuration. We don't have enough hardware to run through deep learning. So that's the reason, but we didn't want to. I am continuing to work on the entire thing, but I did a downsampling sampling.

Audience Member 4:

Just to clarify. Deep learning doesn't require more memory. If you have more data, it's just to store the data, that's what needs memory. So the model itself doesn't get bigger. It just takes longer to process all the points. But I would be surprised that it wouldn't work. So basically, as long as it fits in the memory of all the machines, it's fine. There's one default option that says, replicate training data. And per default it does that. It means every node gets all the data and in your case you have so much data that wouldn't fit on every single node, then it can turn that replicate training data flag off and every node only gets a piece of the data. And at that point, I think the model will still be better averaging pieces of the data there. Each has a model, and then averaging those might be better than sub sampling initially. So you could try that flag, turn off replicate training data. And then run it on the whole data set. Sure. Because if you have time for 500 epochs, then you also have time for 50 epochs on 10 times as much data, right? That might be just as good or even better.

Venkatesh Ramanathan:

This is actually what I want to say that when you ask a question on something in the H2O forum, Arno doesn't tell you to try this. He just tells you the reason why something is. So thank you for that. I think that is really informative.

Producing Node Data in a Deep Learning Model

Audience Member 6:

So when you try this, actually, it's a deep learning question. So when you try these different amounts of training data on the different nodes, it then chooses a sample of the training data to throw into that's different, so that you don't reproduce the entire training set on every node. So is it random then in each epoch?

Audience Member 4:

It happens during the import actually. So when you import a big data set onto 10 node, let's say, then each node gets one 10th of the data. And the default mode is that now deep learning regtransfers everything to everybody. So it's a lot of network overhead actually per default. And that's only done because normally your data is small and doesn't cost too much to send all the pieces to everybody. But if you turn this flag off, it will actually train faster because it doesn't have to send everything to everybody. Everybody just has what it already has, right? Because the import distributed it. So if you turn this flag off, basically the training starts immediately. Everybody just processes. Its local data, has a new model, everybody has a different model based on that local data, and it finds a local minimum on that data basically. And then you average the models and then you get basically generalization and you get a model that has seen everything in a way. So it's not theoretically clear how good that is, obviously, but it's a heuristic that seems to work fine in real data. So we can definitely go from here and try that, but it should be even faster because it doesn't need to redistribute the data.

Averaging Model Data in a Deep Learning Network

Audience Member 3:

How frequently do you average all the models? Is it with every epoch, every back prop?

Audience Member 4:

You can specify the number of points to process before you average the models and then send it back out. So that's exactly the length of a map review step. And that number can be one row per node, or it can be a hundred million rows per node. You can keep processing your local data without communicating and averaging. So the number is totally flexible. You can literally specify it to the one last row that you want. And if it's a fraction, if it's not a multiple of the data set, it actually just processes a random subsample. So it's almost like random forests where you sample some points. So let's say you say, I want 20% of my data set to be processed per map reduction step, then that's what happens. You sample 20%. The next time you sample a different 20%. So it's almost like a bagging concept.

Classifying Events in a Neural Network

Audience Member 7:

So you describe training parts of your neural network. So my question is that for the classification part, when you get the actual events, do you still use H2O in this phase?

Venkatesh Ramanathan:

Use standardization?

Audience Member 7:

Classification, like for example, you trained your network, you came up with your final train model. This is the time for the actual classification or getting events. You classify them.

Venkatesh Ramanathan:

All my research is on the test set after classifying.

Audience Member 7:

What do you use for the actual real time classification? It's still H2O?

Venkatesh Ramanathan:

We are still working through the stages of what it takes to do a production deployment. This is offline.

Audience Member 7:

Okay. So we haven't yet had the online part.

Venkatesh Ramanathan:

That's correct. Yeah. This is on the offline, but it is a data set which is a realistic data set. What will happen if you do end up deploying in production?

Audience Member 7:

I see. Okay.

Venkatesh Ramanathan:

So we have different sets of data. One for building models and one for testing it.

Audience Member 7:

It. Okay, thank you.

Venkatesh Ramanathan:

Most of the section ones are offline.

Audience Member 4:

All right. Thank you.