There’s a new major release of H2O, and it’s packed with new features and fixes! Among the big new features in this release, we’ve introduced support for Generalized Additive Models, added an option to build many models in parallel on segments of your dataset, improved support for deploying on Kubernetes, upgraded XGBoost with newly added features, improved import from secured Hive clusters, and vastly improved our AutoML framework. The release is named after Miloš Zahradník .

Generalized Additive Models

We are super excited to bring you a brand new toolbox in open source H2O: GAM (Generalized Additive Model). GAM is just like GLM except that you can replace predictor columns with smooth functions of those columns and then apply GLM to the selected dataset predictor columns plus the smooth functions!

Our implementation follows closely with the R’s mgcv toolbox described in “Generalized Additive Models An Introduction with R” by Simon Wood. In addition, we gave our GAM implementation all the powers of H2O GLM by calling GLM directly after adding extra penalties to the objective functions to control smoothness. Hence, you can apply lambda search, enable regularization, take advantages of ADMM and the various GLM solvers loaded with line searches. GAM supports all the families supported by GLM.

As this is our first GAM offering, we do not have all the bells and whistles that we would like to have. Try out our GAM, send us your comments and help us make it better!

Segment Model Building

H2O is known for its ability to build models on datasets of practically any size, assuming your cluster has enough hardware resources. Some use cases, however, require building many small models on subpopulations (segments) of your entire dataset instead of (or in addition to) building just a single large model. For these applications, we’ve added a new feature: Segment Model Building. Both the Python and R API were extended and now provide a new function train_segments . With this function, you can take any H2O algorithm, specify segment_columns (columns that will be used to make groups of observations, e.g. a Product Category) and train many models in parallel on all available nodes of your H2O cluster. You can then access each individual model and work with them in the way you are already familiar with.

- Python reference: http://docs.h2o.ai/h2o/latest-stable/h2o-py/docs/modeling.html#h2o.estimators.estimator_base.H2OEstimator.train_segments

- R reference: http://docs.h2o.ai/h2o/latest-stable/h2o-r/docs/reference/h2o.train_segments.html

Kubernetes Support

Native Kubernetes support has been implemented in this release. H2O is now able to automatically form a cluster while running on Kubernetes with little configuration – only the presence of a headless service is required, in order for H2O nodes to form a cluster. All the capabilities are built inside H2O’s JAR, and custom Docker images are therefore fully supported.

The headless service address used for node discovery is passed to the Docker image via the H2O_KUBERNETES_SERVICE_DNS environment variable. H2O will then automatically query the respective DNS and obtain pod IPs of other H2O Nodes. The lookup process can be customized by defining other constraints in the form of environment variables H2O_NODE_LOOKUP_TIMEOUT and H2O_NODE_EXPECTED_COUNT .

For more information, see Pavel’s blog post here .

XGBoost Upgrade and New features

We have adopted XGBoost release 1.0 into our codebase, and as a result, we have seen significant improvements in performance when running on multi-core CPUs with this upgrade.

We have also improved our Java-based implementation of XGBoost predictor to the point that it is now the default implementation used when scoring XGBoost models. Doing fewer round-trips between Java and native code has also brought performance improvements when training XGBoost models.

We have also worked on bringing XGBoost MOJO on par with other MOJOs, adding the option to compute leaf node assignments.

Hive Improvements

We have improved user experience when connecting to a secured Hive service. We have simplified and streamlined the delegation token management code so that there are no delays on cluster startup, and the H2O cluster is ready to interact with Hive immediately after start. We have also improved security by adding an option that allows users to activate delegation token refresh without being forced to distribute their Kerberos keytab with the H2O cluster.

We also made improvements to the Direct Hive import feature by improving our Parquet file parsing support and fixing our compatibility with parameterized SQL data-types.

Other Algorithm Improvements

AUCPR is now calculated according to paper Area under Precision-Recall Curves for Weighted and Unweighted Data . This method is consistent with how XGBoost calculates AUCPR and is preferred over the previously used linear interpolation.

Support for fractional binomial was added to GLM. In the financial service industry, there are many outcomes that are fractional in the range of [0,1], e.g. LGD (Loss Given Default in credit risk). We have implemented the fractional binomial in GLM to accommodate such cases.

The Word2Vec algorithm now supports continuous-bag-of-words (CBOW) word model as well as Skip-Gram method to train the word2vec embeddings.

Improvements in MOJO/POJO

GBM POJO support has been extended by SortByResponse and EnumLimited categorical encodings, providing an option to export the POJOs for such configured models.

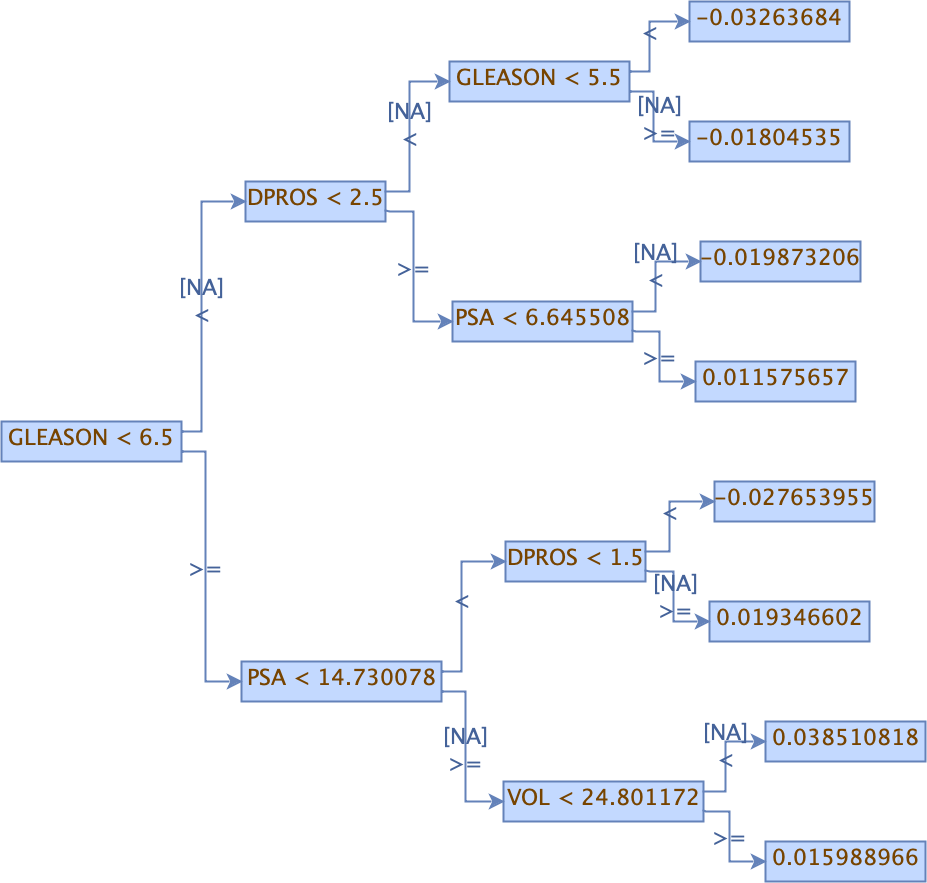

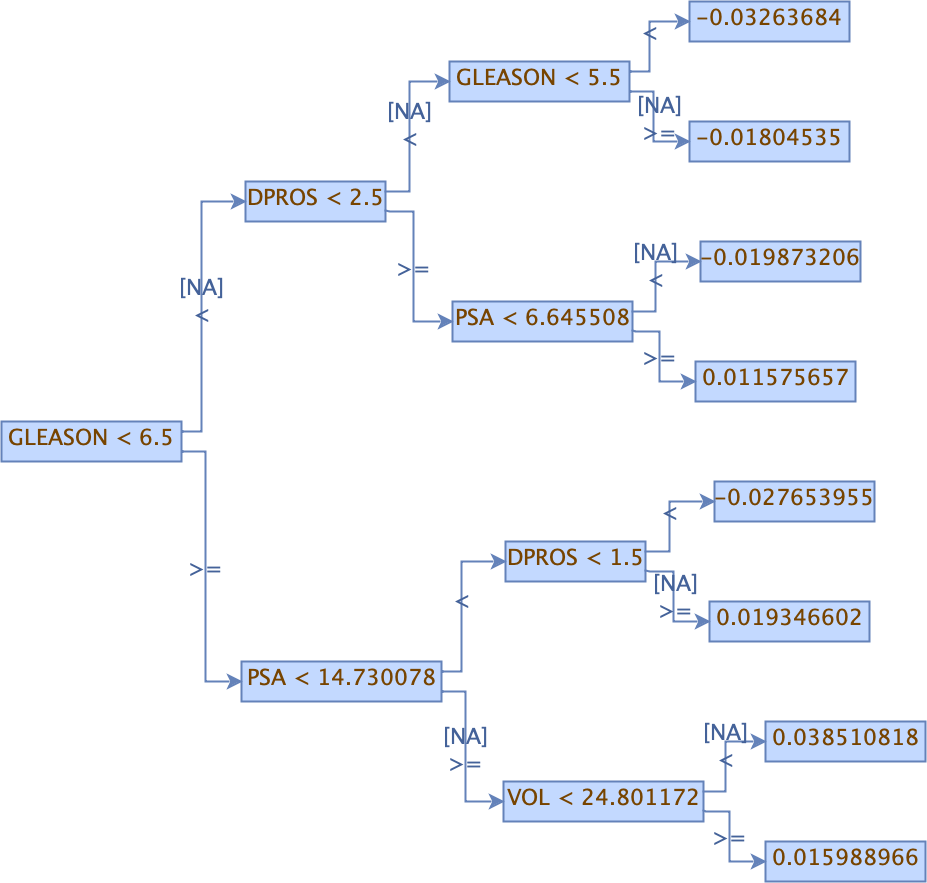

A new tree visualization option has been introduced as an alternative for users who cannot use our existing solution that requires Graphviz. The new option generates .png output directly and does not require any additional packages; hence it can be used on environments where Graphviz installation could be troublesome. The .png output can be generated by the following command: java -cp h2o.jar hex.genmodel.tools.PrintMojo –input model.zip –output tree.png –format png , where model.zip is the MOJO and tree.png is an output filename. Please note that Java 8 or later is required for visualizing trees in .png format.

Stacked Ensemble MOJOs can now be imported into H2O for scoring. This is part of a more generic H2O functionality named “H2O MOJO Import,” allowing MOJO models (regardless of H2O version) to be imported back into H2O, inspected, and used for making predictions inside the H2O cluster.

AutoML Improvements

This release sees the introduction of a new (and still experimental) modeling phase before the training of Stacked Ensembles in AutoML. After the original “exploration phase” consisting of the training of a collection of default models followed by several random grid searches, we introduced an “exploitation phase” that currently tries to fine tune the best GBM and the best XGBoost trained during the exploration phase. Because this is experimental, this feature is disabled by default (exploitation_ratio=0.0 ), but it can easily be enabled from clients by setting the AutoML exploitation_ratio parameter to a positive value (e.g., exploitation_ratio=0.1 to dedicate approximately 10% of the time budgeted to exploitation).

Another notable change related to the previous exploitation_ratio also deals with the time budget (provided by the max_runtime_secs parameter or the 1 hour default budget if no max_models was provided). This budget is now distributed between both default models and grids. (In previous versions, default models were always trained until convergence, possibly quickly exhausting the entire time budget.) As a consequence, this allows AutoML to build more models for larger datasets (though they’re less likely to converge if the time allocation is too small) and to benefit from the Stacked Ensembles at the end of the AutoML training. Users can usually expect significant improvements when increasing the time allocation. Finally, with more models built during the exploration phase, the new exploitation phase can focus on improving only the best models.

We also worked on many issues that enhance user experience with Stacked Ensemble and AutoML, such as opportunities to add base models to Stacked Ensemble by using grid search object directly and exposing and populating base_models and metalearner attributes when accessed through h2o.get_model or automl.leader when the Stacked Ensemble is the leader.

Improvements in Constrained K-means R/Python API

Constrained K-means was introduced in a previous version; however, the performance was not optimal. Within this release, we improved the calculation of Constrained K-means and added the possibility to set the minimum number of data points in each cluster through R and Python API.

Still, the Minimum-cost Flow problem can be efficiently solved in polynomial time, so if the user uses big data, the calculation will take significantly more time than classic K-means.

To solve Constrained K-means in a shorter time, the user can use the H2O Aggregator algorithm to aggregate data to smaller sizes first and then pass this data to the Constrained K-means algorithm to calculate the final centroids to be used with scoring. The results won’t be as accurate as the results of a model with the whole dataset; however, it should help solve the problem of huge datasets.

Demos using H2O Constrained K-means in combination with H2O Aggregator can be found here and here .

Documentation for Constrained K-means can be found here .

Documentation Updates

This version of H2O includes a number of new additions and improvements to the documentation.

New Addition Highlights

One highlight is documentation for the newly added GAM algorithm. Documentation for GAM can be found here .

Documentation has also been added for the new Fractional Binomial family in GLM. This documentation can be found here .

Improvement Highlights

Additional documentation improvements in this release include:

- In the Python client documentation, added examples to the Grid Search section.

- In the R client documentation, added examples for all functions and improved the Target Encoding example.

- Added more confusion matrix threshold details for binary and multi class classification in the Performance and Prediction chapter.

- Improved the tabbing style for all examples in the User Guide.

Credits

This new H2O release is brought to you by Wendy Wong, Michal Kurka , Pavel Pscheidl , Jan Sterba, Veronika Maurerova , Zuzana Olajcova, Erin LeDell , Sebastien Poirier, Tomas Fryda, and Angela Bartz .