H2O.ai Blog

Filter By:

26 results Category: Year:Explaining models built in H2O-3 — Part 1

Machine Learning explainability refers to understanding and interpreting the decisions and predictions made by a machine learning model. Explainability is crucial for ensuring the trustworthiness and transparency of machine learning models, particularly in high-stakes situations where the consequences of incorrect predictions can be signi...

Read moreBias and Debiasing

An important aspect of practicing machine learning in a responsible manner is understanding how models perform differently for different groups of people, for instance with different races, ages, or genders. Protected groups frequently have fewer instances in a training set, contributing to larger error rates for those groups. Some models...

Read moreShapley Values - A Gentle Introduction

If you can’t explain it to a six-year-old, you don’t understand it yourself. – Albert Einstein One fear caused by machine learning (ML) models is that they are blackboxes that cannot be explained. Some are so complex that no one, not even domain experts, can understand why they make certain decisions. This is of particular concern when s...

Read moreHow Much is My Property Worth?

Note : this is a guest blog post by Jaafar Almusaad .How Much is My Property Worth?This is the million-dollar question – both figuratively and literally. Traditionally, qualified property valuers are tasked to answer this question. It’s a lengthy and costly process, but more critically, it’s inconsistent and largely subjective. Mind you, ...

Read moreBuilding an AI Aware Organization

Responsible AI is paramount when we think about models that impact humans, either directly or indirectly. All the models that are making decisions about people, be that about creditworthiness, insurance claims, HR functions, and even self-driving cars, have a huge impact on humans. We recently hosted James Orton, Parul Pandey, and Sudala...

Read more3 Ways to Ensure Responsible AI Tools are Effective

Since we began our journey making tools for explainable AI (XAI) in late 2016, we’ve learned many lessons, and often the hard way. Through headlines, we’ve seen others grapple with the difficulties of deploying AI systems too. Whether it’s: a healthcare resource allocation system that likely discriminated against millions of black peop...

Read more5 Key Considerations for Machine Learning in Fair Lending

This month, we hosted a virtual panel with industry leaders and explainable AI experts from Discover, BLDS, and H2O.ai to discuss the considerations in using machine learning to expand access to credit fairly and transparently and the challenges of governance and regulatory compliance. The event was moderated by Sri Ambati, Founder and CE...

Read moreFrom GLM to GBM – Part 2

How an Economics Nobel Prize could revolutionize insurance and lending Part 2: The Business Value of a Better ModelIntroductionIn Part 1 , we proposed better revenue and managing regulatory requirements with machine learning (ML). We made the first part of the argument by showing how gradient boosting machines (GBM), a type of ML, can mat...

Read moreFrom GLM to GBM - Part 1

How an Economics Nobel Prize could revolutionize insurance and lending Part 1: A New Solution to an Old ProblemIntroductionInsurance and credit lending are highly regulated industries that have relied heavily on mathematical modeling for decades. In order to provide explainable results for their models, data scientists and statisticians i...

Read moreBrief Perspective on Key Terms and Ideas in Responsible AI

INTRODUCTIONAs fields like explainable AI and ethical AI have continued to develop in academia and industry, we have seen a litany of new methodologies that can be applied to improve our ability to trust and understand our machine learning and deep learning models. As a result of this, we’ve seen several buzzwords emerge. In this short po...

Read moreInsights From the New 2020 Gartner Magic Quadrant For Cloud AI Developer Services

We are excited to be named a Visionary in the new Gartner Magic Quadrant for Cloud AI Developer Services (Feb 2020), and have been recognized for both our completeness of vision and ability to execute in the emerging market for cloud-hosted artificial intelligence (AI) services for application developers. This is the second Gartner MQ tha...

Read moreAI & ML Platforms: My Fresh Look at H2O.ai Technology

2020: A new year, a new decade, and with that, I’m taking a new and deeper look at the technology H2O.ai offers for building AI and machine learning systems. I’ve been interested in H2O.ai since its early days as a company (it was 0xdata back then) in 2014. My involvement had been only peripheral, but now I’ve begun to work with this comp...

Read moreInterview with Patrick Hall | Machine Learning, H2O.ai & Machine Learning Interpretability

Audio Link: In this episode of Chai Time Data Science , Sanyam Bhutani interviews Patrick Hall, Sr. Director of Product at H2O.ai. Patrick has a background in Math and has completed a MS Course in Analytics.In this interview they talk all about Patrick’s journey into ML, ML Interpretability and his journey at H2O.ai, how his work has ev...

Read moreKey Takeaways from the 2020 Gartner Magic Quadrant for Data Science and Machine Learning

We are named a Visionary in the Gartner Magic Quadrant for Data Science and Machine Learning Platforms (Feb 2020). We have been positioned furthest to the right for completeness of vision among all the vendors evaluated in the quadrant. So let’s walk you through the key strengths of our machine learning platforms. Automatic Machine Learn...

Read moreWhy you should care about debugging machine learning models

This blog post was originally published here. Authors: Patrick Hall and Andrew Burt For all the excitement about machine learning (ML), there are serious impediments to its widespread adoption. Not least is the broadening realization that ML models can fail. And that’s why model debugging, the art and science of understanding and fixing p...

Read moreNew Innovations in Driverless AI

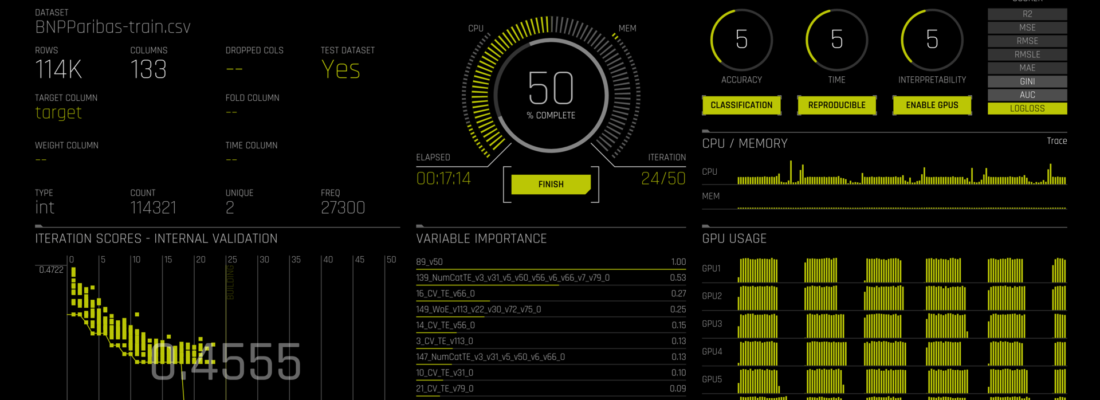

What’s new in Driverless AIWe’re super excited to announce the latest release of H2O Driverless AI . This is a major release with a ton of new features and functionality. Let’s quickly dig into all of that: Make Your Own AI with Recipes for Every Use Case: In the last year, Driverless AI introduced time-series and NLP recipes to meet the...

Read moreToward AutoML for Regulated Industry with H2O Driverless AI

Predictive models in financial services must comply with a complex regime of regulations including the Equal Credit Opportunity Act (ECOA), the Fair Credit Reporting Act (FCRA), and the Federal Reserve’s S.R. 11-7 Guidance on Model Risk Management. Among many other requirements, these and other applicable regulations stipulate predictive ...

Read moreBuilding an Interpretable & Deployable Propensity AI/ML Model in 7 Steps…

To start with, you may have a tabular data set with a combination of: Dates/Timestamps Categorical Values Text strings Numeric Values A business sponsor wants to build a Propensity to Buy model from historical data.How many Steps does it take? Let’s find out. We are going to use H2O’s Driverless AI instance with 1 GPU (optional...

Read moreCan Your Machine Learning Model Be Hacked?!

I recently published a longer piece on security vulnerabilities and potential defenses for machine learning models. Here’s a synopsis.IntroductionToday it seems like there are about five major varieties of attacks against machine learning (ML) models and some general concerns and solutions of which to be aware. I’ll address them one-by-o...

Read moreH2O World Explainable Machine Learning Discussions Recap

Earlier this year, in the lead up to and during H2O World, I was lucky enough to moderate discussions around applications of explainable machine learning (ML) with industry-leading practitioners and thinkers. This post contains links to these discussions, written answers and pertinent resources for some of the most common questions asked ...

Read moreHow to explain a model with H2O Driverless AI

The ability to explain and trust the outcome of an AI-driven business decision is now a crucial aspect of the data science journey. There are many tools in the marketplace that claim to provide transparency and interpretability around machine learning models but how does one actually explain a model? H2O Driverless AI provides robust inte...

Read moreWhat is Your AI Thinking? Part 3

In the past two posts we’ve learned a little about interpretable machine learning in general. In this post, we will focus on how to accomplish interpretable machine learning using H2O Driverless AI . To review, the past two posts discussed: Exploratory data analysis (EDA) Accurate and interpretable models Global explanations Local...

Read moreWhat is Your AI Thinking? Part 2

Explaining AI to the Business PersonWelcome to part 2 of our blog series: What is Your AI Thinking? We will explore some of the most promising testing methods for enhancing trust in AI and machine learning models and systems. We will also cover the best practice of model documentation from a business and regulatory standpoint.More Techniq...

Read moreWhat is Your AI Thinking? Part 1

Explaining AI to the Business PersonExplainable AI is in the news, and for good reason. Financial services companies have cited the ability to explain AI-based decisions as one of the critical roadblocks to further adoption of AI for their industry . Moreover, interpretability, fairness, and transparency of data-driven decision support sy...

Read moreHow This AI Tool Breathes New Life Into Data Science

Ask any data scientist in your workplace. Any Data Science Supervised Learning ML/AI project will go through many steps and iterations before it can be put in production. Starting with the question of “Are we solving for a regression or classification problem?” Data Collection & Curation Are there Outliers? What is the Distribu...

Read moreInterpretability: The missing link between machine learning, healthcare, and the FDA?

Recent advances enable practitioners to break open machine learning’s “black box”.From machine learning algorithms guiding analytical tests in drug manufacture, to predictive models recommending courses of treatment, to sophisticated software that can read images better than doctors, machine learning has promised a new world of healthcar...

Read more