H2O.ai Blog

Filter By:

525 results Category: Year:The Evolution of AI in Banking: Key Insights from Industry Experts

In a rapidly evolving regulatory and technological landscape, AI is no longer just a buzzword in banking—it’s a business imperative. But what does real adoption look like in an industry known for risk sensitivity and regulatory oversight? That was the focus of H2O.ai’s recent webinar, "Turning AI Strategy into Results", where ...

Read moreSecuring Sovereign AI: Why FedRAMP ‘High’ Matters for the Future of Government AI

As someone who has spent a career working at the intersection of technology and government—first as a Federal CIO and CISO, now as the CISO at H2O.ai—I’ve seen firsthand the tension between innovation and security in the public sector. Today, I’m proud to share that H2O.ai has achieved FedRAMP® “In Process” status at the High Impact Level...

Read moreH2O.ai Tops the General AI Assistant (GAIA) Test

We're proud to announce that our h2oGPTe Agent has once again claimed the #1 spot on the prestigious GAIA (General AI Assistants) benchmark with an impressive 75% accuracy rate – the first time a grade of C has been achieved on the GAIA test set. Previously H2O.ai was first to get a passing grade on the GAIA test. This achievement places...

Read moreH2O.ai Lidera o benchmark "General AI Assistants” (GAIA)

Temos o orgulho de anunciar que nosso Agente h2oGPTe conquistou novamente o 1º lugar no prestigiado benchmark GAIA (Assistentes de IA Geral) com uma impressionante taxa de precisão de 75% – a primeira vez que uma nota C foi alcançada no conjunto de testes GAIA. Anteriormente, a H2O.ai foi a primeira a obter uma nota de aprovação no teste ...

Read moreAgentic AI at Scale: Unlocking Enterprise Value with Domain-Specific LLMs and Exabyte Data

We’d like to personally thank Savannah Peterson and Dave Vellante for an engaging and insightful discussion on theCUBE, where we discussed the importance of AI’s convergence with enterprise data at scale. NVIDIA GTC always spotlights where AI is headed, from new GPU architectures to state of the art large language models (LLMs). During th...

Read moreThe Battle for AI: Why Open Source Will Win | H2O.ai

Elon Musk just threw a $97.4 billion monkeywrench into OpenAI’s already tangled corporate transformation, exposing the real fight at hand: who gets to control the future of artificial intelligence? What started as a nonprofit mission to advance AI for humanity has turned into a high-stakes battle for dominance. OpenAI, once an open resea...

Read moreH2O.ai Tops GAIA Leaderboard: A New Era of AI Agents

We're excited to announce that our h2oGPTe Agent has achieved the top position on the GAIA (General AI Assistants, https://arxiv.org/abs/2311.12983) benchmark leaderboard (https://huggingface.co/spaces/gaia-benchmark/leaderboard), with a remarkable score of 65% - significantly outperforming other major players in the field. This achieveme...

Read moreDocument Classification with H2O VL Mississippi: A Quick Guide

In this tutorial, we'll explore using H2O.ai's Vision-Language model (H2OVL-Mississippi-800M) for document classification (for example, in document processing automation). Despite its relatively compact size of 0.8 billion parameters, this model demonstrates impressive capabilities in text recognition and document understanding tasks. ...

Read moreAgents | Building your first Agent step-by-step with h2oGPTe & LLM Chains

H2O.ai Announces Collaboration with AI Verify Foundation to Drive Responsible AI Adoption at Scale in Singapore

At H2O.ai, our mission has always been clear: to enable the responsible and scalable adoption of AI. As part of our commitment to providing our customers with the tools they need to build, test, and govern their AI systems effectively, we are announcing our latest collaboration with AI Verify Foundation. The AI Verify Foundation is a not...

Read moreAI for Climate Science: Insights from the LEAP Atmospheric Physics Competition

In April 2024, Kaggle hosted the LEAP-Atmospheric Physics using AI (ClimSim) competition. The competition aimed to use AI to improve climate modeling, challenging participants to develop machine learning models that could enhance climate projections and reduce uncertainty in future climate trends. The goal was to employ faster ML model...

Read moreModel Selection | Routing you to the best LLM

Learn how h2oGPTe routes user queries to the best LLM based on preferences for latency, cost, or accuracy for chat and retrieval augmented generation. Welcome to Enterprise h2oGPTe, your Generative AI platform for interacting with a wide range of LLMs for chat, document question answering with Retrieval Augmented Generation, new content ...

Read moreLLM DataStudio - V6.0 Release

H2O LLM DataStudio is a no-code application created to streamline data preparation tasks for Large Language Models (LLMs). The tool features three main components: Curate, Prepare, and Custom Eval. Curate - Conversion of documents (PDFs, DOC & audio/video files) into question-answer pairs and summarization pairs Prepare - Prepar...

Read moreFine Tuning The H2O Danube2 LLM for The Singlish Language

Singlish is an informal version of English spoken in Singapore. The primary variations lie in the style and structure of the text, and inclusion of elements of Chinese and Malay. Though Singlish is the common tongue in Singapore, it isn’t well defined or formalized. We fine tuned H2O.ai’s Danube-2 1.8B LLM on Singlish instruction data, wi...

Read moreAnnouncing H2O Danube 2: The next generation of Small Language Models from H2O.ai

A new series of Small Language Models from H2O.ai, released under Apache 2.0 and ready to be fine-tuned for your specific needs to run offline and with a smaller footprint. Why Small Language Models? Like most decisions in AI and tech, the decision of which Language Model to use for your production use cases comes down to trade-offs. ...

Read moreH2O Release 3.46

We are excited to announce the release of H2O-3 3.46.0.1! Some of the highlights of this major release are that we added custom metric support for XGBoost, allowed grid search models to be sorted with custom metrics, and we enabled H2O MOJO and POJO to work with MLFlow. Several improvements were also made to the Uplift model (like MLI ...

Read moreOpen-Weight AI Models: A Path to Responsible Innovation

The recent Request for Comments (RFC) issued by the National Telecommunications and Information Administration (NTIA) on open-weight AI models has sparked an important conversation about the future of AI. As we consider the potential benefits and risks associated with making AI model weights more accessible and transparent, it is clear ...

Read moreTransformando Empresas Latinoamericanas con Inteligencia Artificial: Estrategias y Perspectivas

En la actualidad podemos reconocer que hay una alta emoción en foros y publicaciones acerca del uso de inteligencia artificial (IA) en diferentes ámbitos empresariales, muchas veces se habla de los grandes cambios que conlleva el uso de la IA en procesos de negocios sin embargo estos casos de uso exitosos en su mayoría pertenecen a com...

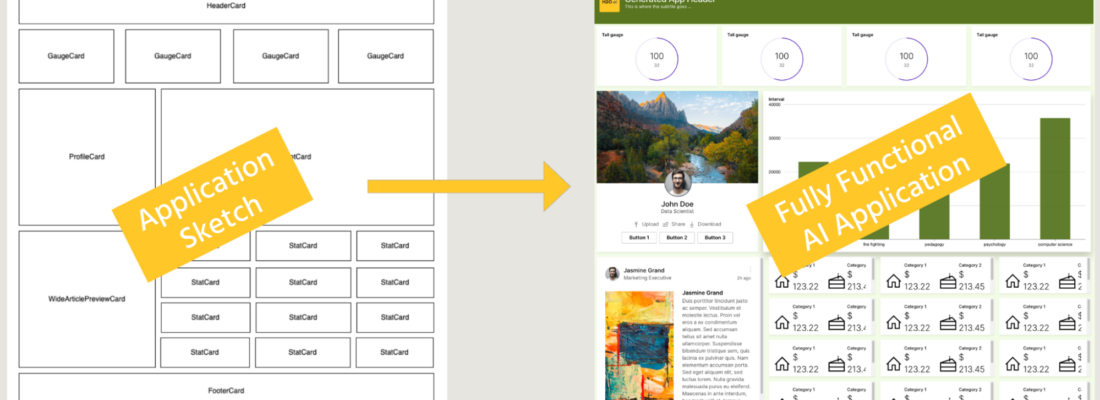

Read moreUnlocking GenAI Magic: GenAI AppStudio Revolutionizes App Development with LLMs! (Part 2)

GenAI AppStudio provides a no code way to take user sketches and generates the code for you. DEMO Introducing GenAI AppStudio GenAI AppStudio is a no-code platform specifically crafted for non-technical users, to easily transform app ideas into reality with a few simple steps. One of its key features is the ability to sea...

Read moreH2O LLM DataStudio: V4.1 Release

H2O LLM DataStudio is a comprehensive no-code application designed to simplify data preparation tasks for Large Language Models (LLMs). This tool comprises three key components: Curate, Prepare, and Augment. Curate - Conversion of documents (PDFs, DOC & audio/video files) into question-answer pairs and summarization pairs Prepare ...

Read moreIntroducing the H2O GenAI App Store: A Playground of Generative AI Innovation

As the world becomes increasingly interconnected and reliant on data-driven decisions, the need for powerful and innovative AI solutions has never been more critical. At H2O.ai, we've been at the forefront of AI and machine learning for the last decade, providing you with the tools and platforms to harness the power of data. Today, we're ...

Read moreMy New Blog Page Title

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Morbi vel risus erat. Lorem ipsum dolor sit amet, consectetur adipiscing elit. Vivamus id tortor egestas, mollis augue eu, venenatis felis. Curabitur facilisis nunc sit amet odio tempor pharetra. Integer nunc magna, tincidunt eu elit a, aliquam gravida metus. In molestie rhoncus aug...

Read moreApresentamos a H2O GenAI App Store: um Playground de Inovação em Inteligência Artificial Generativa.

This blog was originally published in English here: https://h2o.ai/blog/2023/gen-ai-app-store/ À medida que o mundo se torna cada vez mais interconectado e dependente de decisões orientadas por dados, a necessidade de soluções de IA poderosas e inovadoras nunca foi tão crítica. Na H2O.ai, estivemos na vanguarda da IA e do aprendizado de ...

Read morePresentamos la H2O GenAI App Store: Un Playground de Innovación en Inteligencia Artificial Generativa.

This blog was originally published in English here: https://h2o.ai/blog/2023/gen-ai-app-store/ A medida que el mundo se vuelve cada vez más interconectado y dependiente de decisiones basadas en datos, la necesidad de soluciones de inteligencia artificial (IA) potentes e innovadoras nunca ha sido tan crítica. En H2O.ai, hemos estado a la ...

Read moreH2O Release 3.44

We are excited to announce the release of H2O-3 3.44.0.1! We have added and improved many items. A few of our highlights are the implementation of AdaBoost, Shapley values support, Python 3.10 and 3.11 support, and added custom metric support for Deep Learning, Uplift Distributed Random Forest (DRF), Stacked Ensemble, and AutoML. Please r...

Read moreBoosting LLMs to New Heights with Retrieval Augmented Generation

Businesses today can make leaps and bounds to revolutionize the way things are done with the use of Large Language Models (LLMs). LLMs are widely used by businesses today to automate certain tasks and create internal or customer-facing chatbots that boost efficiency. Challenges with dynamic adaption of LLMs As with any new hyped-up thi...

Read moreEntrenando Tu Propio LLM Sin Programación

This blog was originally published in English here: https://www.analyticsvidhya.com/blog/2023/09/training-your-own-llm-without-coding/ Introducción La Inteligencia Artificial Generativa, un campo fascinante que promete revolucionar cómo interactuamos con la tecnología y generamos contenido, ha causado sensación en el mundo. En este artí...

Read moreH2O LLM DataStudio Part II: Convert Documents to QA Pairs for fine tuning of LLMs

Convert unstructured datasets to Question-answer pairs required for LLM fine-tuning and other downstream tasks with H2O LLM Data Studio Curate. Every organization needs to own its GPT as simply as it needs to bring its data, algorithms, and models (read more here). A common problem we see in organizations is that they want to be able to...

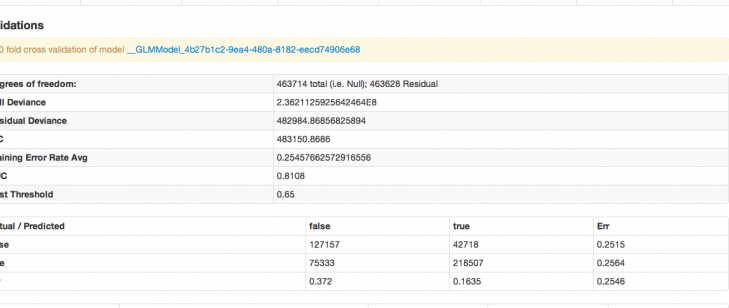

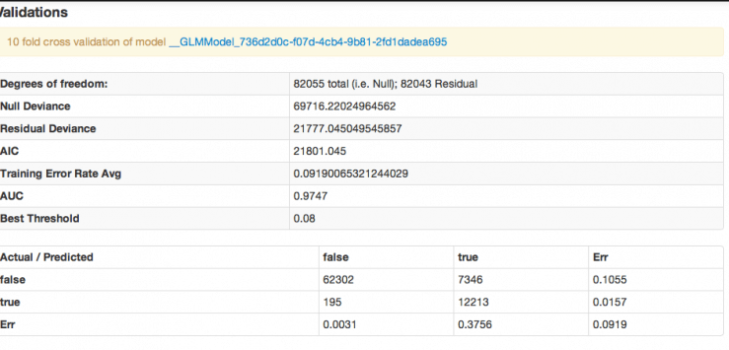

Read moreBuilding a Fraud Detection Model with H2O AI Cloud

In a previous article [1], we discussed how machine learning could be harnessed to mitigate fraud. This time, we’ll delve into a step-by-step guide on leveraging H2O AI Cloud to construct efficient fraud detection models. We’ll tackle this process in three critical stages: build, operate, and detect. First, we’ll utilize Driverless AI in ...

Read moreA Look at the UniformRobust Method for Histogram Type

Tree-based algorithms, especially Gradient Boosting Machines (GBM’s), are one of the most popular algorithms used. They often out-perform linear models and neural networks for tabular data since they used a boosted approach where each tree built works to fix the error of the previous tree. As the model trains, it is continuously self-corr...

Read moreTesting Large Language Model (LLM) Vulnerabilities Using Adversarial Attacks

Adversarial analysis seeks to explain a machine learning model by understanding locally what changes need to be made to the input to change a model’s outcome. Depending on the context, adversarial results could be used as attacks, in which a change is made to trick a model into reaching a different outcome. Or they could be used as an exp...

Read moreH2O LLM EvalGPT: A Comprehensive Tool for Evaluating Large Language Models

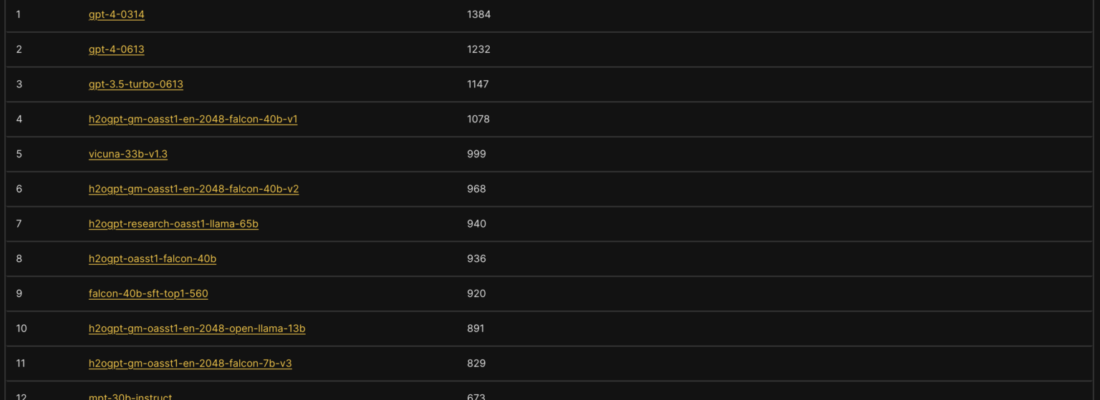

In an era where Large Language Models (LLMs) are rapidly gaining traction for diverse applications, the need for comprehensive evaluation and comparison of these models has never been more critical. At H2O.ai, our commitment to democratizing AI is deeply ingrained in our ethos, and in this spirit, we are thrilled to introduce our innovati...

Read moreReducing False Positives in Financial Transactions with AutoML

In an increasingly digital world, combating financial fraud is a high-stakes game. However, the systems we deploy to safeguard ourselves are raising too many false alarms, with over 90% of fraud alerts being false positives. These false positives, not only frustrating for consumers but also costly for financial institutions, can eclipse t...

Read moreWinner's Insight: Navigating the Parkinson's Disease Prediction Challenge with AI

Parkinson’s disease, a condition affecting movement, cognition, and sleep, is escalating rapidly. By 2037, it is projected that around 1.6 million U.S. residents will be confronting this disease, resulting in significant societal and economic challenges. Studies have hinted that disruptions in proteins or peptides could be instrumental in...

Read moreH2O.ai and Snowflake Enable Developers to Train, Deploy, and Score Containerized Software Without Compromising Data Security

H2O.ai today announced its participation as a launch partner for Snowflake’s Snowpark Container Services (available in private preview), which provides our joint customers with the flexibility to train, deploy, and score models all within their Snowflake account. This further expands the ease of use for data science teams to create machin...

Read moreH2O Releases 3.40.0.1 and 3.42.0.1

Our new major releases of H2O are packed with new features and fixes! Some of the major highlights of these releases are the new Decision Tree algorithm, the added ability to grid over Infogram, an upgrade to the version of XGBoost and an improvement to its speed, the completion of the maximum likelihood dispersion parameter and its expan...

Read moreGenerating LLM Powered Apps using H2O LLM AppStudio – Part1: Sketch2App

sketch2app is an application that let users instantly convert sketches to fully functional AI applications. This blog is Part 1 of the LLM AppStudio Blog Series and introduces sketch2app The H2O.ai team is dedicated to democratizing AI and making it accessible to everyone. One of the focus areas of our team is to simplify the adoption of...

Read moreH2O LLM DataStudio: Streamlining Data Curation and Data Preparation for LLMs related tasks

A no-code application and toolkit to streamline data preparation tasks related to Large Language Models (LLMs) H2O LLM DataStudio is a no-code application designed to streamline data preparation tasks specifically for Large Language Models (LLMs). It offers a comprehensive range of preprocessing and preparation functions such as text cl...

Read moreRecap of H2O World India 2023: Advancements in AI and Insights from Industry Leaders

On April 19th, the H2O World made its debut in India, marking yet another milestone in its global journey. The conference gathered an array of notable experts and enthusiasts from deep learning, artificial intelligence, and data science. A broad spectrum of topics was covered, shedding light on the strides made in AI technology and its ...

Read moreEnhancing H2O Model Validation App with h2oGPT Integration

As machine learning practitioners, we’re always on the lookout for innovative ways to streamline and enhance our processes. What if we could integrate the power of language models into our workflows, especially in the critical phase of model validation? Imagine running validation procedures, interpreting results, or even troubleshooting i...

Read moreBuilding a Manufacturing Product Defect Classification Model and Application using H2O Hydrogen Torch, H2O MLOps, and H2O Wave

Primary Authors: Nishaanthini Gnanavel and Genevieve Richards Effective product quality control is of utmost importance in the manufacturing industry. The presence of defective components can have adverse effects on various aspects, including escalating production costs, compromising product quality, diminishing product longevity, and l...

Read moreInsights from AI for Good Hackathon: Using Machine Learning to Tackle Pollution

At H2O.ai, we believe technology can be a force for good, and we’re committed to leveraging its power to create a positive impact in the world. As part of this commitment, we recently organized an AI for Good Hackathon during the H2O World India event, where participants had the opportunity to apply their data science skills to a real-wor...

Read moreDemocratization of LLMs

Every organization needs to own its GPT as simply as we need to own our data, algorithms and models. H2O LLM Studio democratizes LLMs for everyone allowing customers, communities and individuals to fine-tune large open source LLMs like h2oGPT and others on their own private data and on their servers. Every nation, state and city needs it...

Read moreBuilding the World's Best Open-Source Large Language Model: H2O.ai's Journey

At H2O.ai, we pride ourselves on developing world-class Machine Learning, Deep Learning, and AI platforms. We released H2O, the most widely used open-source distributed and scalable machine learning platform, before XGBoost, TensorFlow and PyTorch existed. H2O.ai is home to over 25 Kaggle grandmasters, including the current #1. In 2017, w...

Read moreEffortless Fine-Tuning of Large Language Models with Open-Source H2O LLM Studio

While the pace at which Large Language Models (LLMs) have been driving breakthroughs is remarkable, these pre-trained models may not always be tailored to specific domains. Fine-tuning — the process of adapting a pre-trained language model to a specific task or domain—plays a critical role in NLP applications. However, fine-tuning can be ...

Read moreWhat's new in the latest release of H2O AI Hybrid Cloud?

Check out the complete release notes here! v23.01.0 | Apr 14, 2023 Upgraded ComponentsCore Components AI App Storev0.22.0 The AI App Store is a platform for accessing and operationalizing AI/ML applications and services that are built using H2O Wave . The 23.01.0 Hybrid Cloud release introduces multiple UI enhancements to make the us...

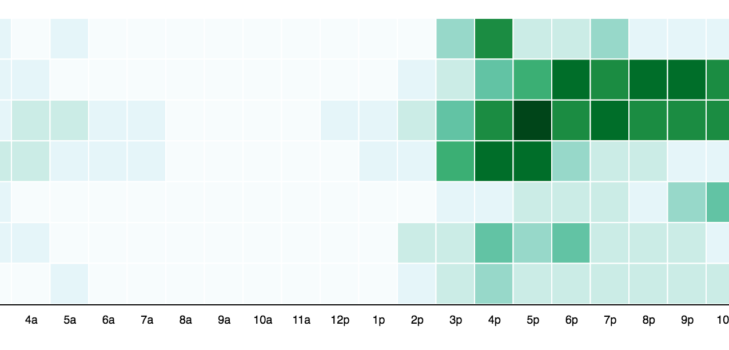

Read moreNavigating the challenges of time series forecasting

Jon Farland is a Senior Data Scientist and Director of Solutions Engineering for North America at H2O.ai. For the last decade, Jon has worked at the intersection of research, technology and energy sectors with a focus on developing large scale and real-time hierarchical forecasting systems. The machine learning models that drive these for...

Read moreHow Commonwealth Bank is transforming operations with Document AI

Sonal Surana , General Manager at Commonwealth Bank of Australia shares recent innovative ideas at H2O World Sydney. It’s been a rollercoaster of a ride this first year of our partnership with H2O.ai, and the momentum continues to get even more exciting. We’ve heard from Matt about our AI ambition and how front and center it is for CBA s...

Read moreIntroduction to H2O Document AI

Mark Landry, H2O.ai Director of Data Science and Product, and Kaggle Grandmasters showcases H2O Document AI during the Technical Track Sessions at H2O World Sydney 2022. Mark Landry: I’m Mark Landry, with some different titles than you see on the screen here. I’ve got a bunch at H2O, so I’ve been at H2O for about seven and a half years...

Read moreAI in Insurance: Resolution Life's AI Journey with Rajesh Malla

Rajesh Malla , Head of Data Engineering – Data Platforms COE at Resolution Life insurance takes the stage at H2O World Sydney 2022 to discuss AI transformation within the insurance industry. Resolution Life is the largest life insurer in Australasia. Malla discusses the use of H2O Driverless AI to predict claim triage and other insurance ...

Read moreAT&T panel: AI as a Service (AIaaS)

Mark Austin, Vice President of Data Science at AT&T joined us on stage at H2O World Dallas, along with his colleagues Mike Berry, Lead Solution Architect; Prince Paulraj, AVP of Engineering; Alan Gray, Principal-Solutions Architect; and Rob Woods, Lead Solution Architect, CDO to discuss what they’re doing today and where they see the ...

Read more[Infographic] Healthcare providers: How to avoid AI “Pilot-Itis”

From increased clinician burnout and financial instability to delays in elective and preventative care, the pandemic created a perfect storm of conditions that have strained the healthcare system in lasting ways. This storm continues unabated and is unleashing new challenges and exacerbating old ones. Artificial intelligence (AI) technol...

Read moreDeploy a WAVE app on an AWS EC2 instance

This article was originally published by Greg Fousas and Michelle Tanco on Medium and reviewed by Martin Turoci (unusualcode) This guide will demonstrate how to deploy a WAVE app on an AWS EC2 instance. WAVE can run on many different OSs (macOS, Linux, Windows) and architectures (Mac, PC). In this document, Ubuntu Linux will be used. T...

Read moreHow Horse Racing Predictions with H2O.ai Saved a Local Insurance Company $8M a Year

In this Technical Track session at H2O World Sydney 2022, SimplyAI’s Chief Data Scientist Matthew Foster explains his journey with machine learning and how applying the H2O framework resulted in significant success on and off the race track. Matthew Foster: I’m Matthew Foster, the Chief Data Scientist for SimplyAI. So, I’m going t...

Read moreAI and Humans Combating Extinction Together with Dr. Tanya Berger-Wolf

Dr. Tanya Berger-Wolf , Co-Founder and Director of AI for conservation nonprofit Wild Me , takes the stage at H2O World Sydney 2022 to discuss AI solutions for wildlife conservation, connecting data, people, and machines. AI can turn a massive collection of images into high-resolution information databases about wildlife, enabling scienti...

Read moreImproving Search Query Accuracy: A Beginner's Guide to Text Regression with H2O Hydrogen Torch

Although search engines are vital to our daily lives, they need help understanding complex user queries. Search engines rely on natural language processing (NLP) to understand the intent behind a user’s query and return relevant results. By formulating a well-formed question, users can provide more precise and specific information about w...

Read moreWhat it means—and takes—to be at AI’s edge with Dr. Tim Fountaine

Dr. Tim Fountaine, Senior Partner at McKinsey & Company joins us at H2O World Sydney 2022 to discuss why business leaders should care about AI, what mindset to adopt, and what actions to take to effectively bring AI into your organization. Dr. Fountaine discusses real-world examples and insights from McKinsey’s collaboration with the ...

Read more10 Consejos para Convertirte en un Científico de Datos Exitoso

La ciencia de datos llegó para quedarse. Los científicos de datos utilizan sus habilidades para ayudar a las empresas a tomar mejores decisiones sobre sus productos, servicios, a optimizar procesos, ahorrar y mejorar rentabilidad. Convertirse en un científico de datos de éxito implica muchos aspectos y el estudio continuo, ya que es un...

Read moreExplaining models built in H2O-3 — Part 1

Machine Learning explainability refers to understanding and interpreting the decisions and predictions made by a machine learning model. Explainability is crucial for ensuring the trustworthiness and transparency of machine learning models, particularly in high-stakes situations where the consequences of incorrect predictions can be signi...

Read moreH2O.ai at NeurIPS 2022

H2O.ai is proud to participate in the 36th Conference on Neural Information Processing Systems (NeurIPS) 2022, one of the biggest and most prestigious international conferences in artificial intelligence. NeurIPS 2022 will be a Hybrid Conference from Monday, November 28th through Friday, December 9th, with an in-person event at the New Or...

Read moreA Brief Overview of AI Governance for Responsible Machine Learning Systems

Our paper “A Brief Overview of AI Governance for Responsible Machine Learning Systems” was recently accepted to the Trustworthy and Socially Responsible Machine Learning (TSRML) workshop at NeurIPS 2022 (New Orleans). In this paper, we discuss the framework and value of AI Governance for organizations of all sizes, across all industries a...

Read moreH2O World Dallas Customer Talks

After three long years of not having an #H2OWorld, we finally held our first one in Sydney to a sold-out crowd! We then followed it up with H2O World Dallas in the same week! It was a fantastic and jam-packed event with customers, partners, colleagues, and community members sharing how they leverage H2O.ai to accelerate and transform AI l...

Read moreNew in Wave 0.24.0

Another Wave release has arrived with quite a few exciting new features. Let’s quickly go over the biggest ones.Wave init CLIHow many times you wanted to build a Wave app fast, but then you realized you need to start from scratch, copy over the skeleton of your app and work up from there? For these exact reasons, we introduced a new wave...

Read moreH2O.ai Raises $40 Million to Democratize Artificial Intelligence for the Enterprise

Series C round led by Wells Fargo and NVIDIA MOUNTAIN VIEW, CA – November 30, 2017 – H2O.ai, the leading company bringing AI to enterprises, today announced it has completed a $40 million Series C round of funding led by Wells Fargo and NVIDIA with participation from New York Life, Crane Venture Partners, Nexus Venture Partners and Tra...

Read moreH2O.ai Placed Furthest in Completeness of Vision in 2021 Gartner Data Science and Machine Learning Magic Quadrant in the Visionaries Quadrant. -- Copy

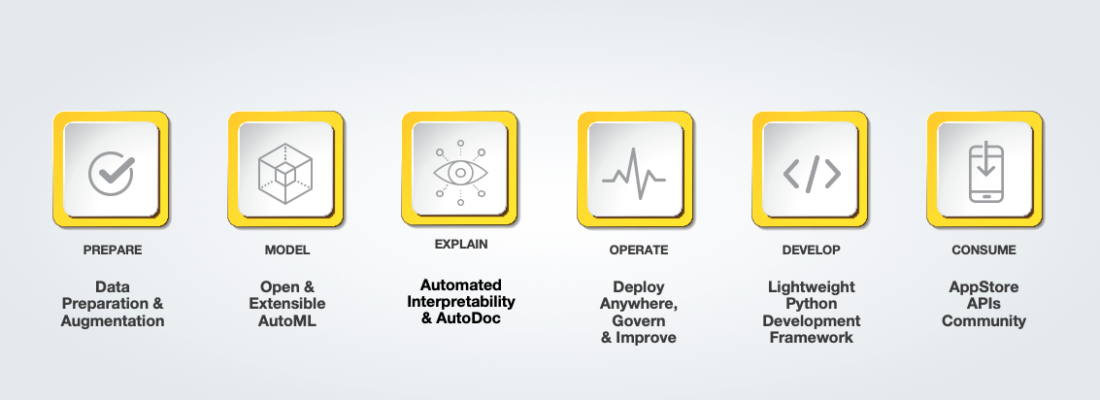

At H2O.ai, our mission is to democratize AI, and we believe driving value from data is a team sport. Data needs to be organized and prepared, often by data engineers, and then models need to be built by data scientists. With models built, they need to be put into production and maintained by IT and DevOps personnel. Finally, these models...

Read moreH2O.ai Expands Market Footprint in Healthcare AI by Signing Hackensack Meridian Health and Other Key Providers

We’re excited to attend the HLTH conference this week in Las Vegas, NV. This industry event has quickly become the go-to event for c-level executives across all parts of the healthcare industry. It’s both incredible and inspiring to see how quickly the event has grown in its five years, and that’s why we’re excited to share some news abou...

Read moreAn Introduction to H2O Wave Table

H2O Wave is a Python package for creating realtime ML/AI applications for a wide variety of data science workflows and industry use cases. Data scientists view a significant amount of data in tabular form. Running SQL queries, pivoting data in Excel or slicing a pandas dataframe are pretty much bread-and-butter tasks. With the growing u...

Read moreSaving Zebras: “Their stripes are like fingerprints. No two are alike.”

It’s been said that a picture is worth a thousand words. But to Tanya Berger-Wolf, a picture is far more valuable than that. To Berger-Wolf, photos, images and videos are key to protecting biodiversity and entire species around the world. Scientists have known for years that we are in the middle of the sixth mass extinction on our planet...

Read moreH2O Managed Cloud With AWS PrivateLink is Now Generally Available

A n essential part of responsibly practicing machine learning is understanding how you secure your data. H2O Managed Cloud offers a single-tenant cloud environment with multiple layers of security – but how do you get your data securely into the cloud for training, and how do you score sensitive information without exposing it to the inte...

Read moreH2O.ai Receives Innovation Award for H2O Hydrogen Torch

We don’t like to brag, but we do like to celebrate the work our Makers create, and more importantly, why they create it: for you. H2O.ai was proud to accept the award for “Best Deep Learning Technology” at the AI Tech awards. H2O Hydrogen Torch , a no-code deep learning training engine, was released less than a year ago in February 2022...

Read moreAI for Good: PetFinder.my Levels Up Furry Matchmaking

Nothing tugs at the heart strings quite like a poster in your neighborhood about a missing cat or dog. For years, technology has enabled lost pets to be reunited with their families in the form of a small microchip that contains an owner’s contact information. Now some organizations are turning to emerging technology to help the millions ...

Read moreH2O Wave joins Hacktoberfest

It’s that time of the year again. A great initiative by DigitalOcean called Hacktoberfest that aims to bring more people to open source is about to start. Hacktoberfest incentives people to make at least 4 valuable contributions (pull requests) to an open source repository and get the reward i...

Read moreThree Keys to Ethical Artificial Intelligence in Your Organization

There’s certainly been no shortage of examples of AI gone bad over the past few years–enough to give everyone pause on how (and if) this technology can truly be used for good. If it’s not Facebook selling data of its users , it’s self-driving cars from Uber that can’t recognize pedestrians in time to slow down or stop. So while the uses ...

Read more머신러닝 자동화 솔루션 H2O Driveless AI를 이용한 뇌에서의 성차 예측

Predicting Gender Differences in the Brain Using Machine Learning Automation Solution H2O Driverless AI아동기 뇌인지 발달은 기억, 주의력, 사회성 등 고등 인지 기능에 영향을 미치고, 청소년기와 성인기의 뇌 발달로까지 이어집니다.Brain cognitive development in childhood affects higher cognitive functions such as memory, attention, and sociability, and leads to brain development in adolescence ...

Read moreMake with H2O.ai Recap: Validation Scheme Best Practices

Data Scientist and Kaggle Grandmaster, Dmitry Gordeev, presented at the Make with H2O.ai session on validation scheme best practices, our second accuracy masterclass. The session covered key concepts, different validation methods, data leaks, practical examples, and validation and ensembling. Key Concepts While the validation topics cove...

Read moreIntegrating VSCode editor into H2O Wave

Let’s have a look at how to provide our users with a truly amazing experience when we need to allow them to edit pieces of code or configuration. We will use one of the most popular and well-known code editors called Monaco editor which powers VSCode. The resulting app will have the editor on the left side and a markdown card on the righ...

Read more5 Tips for Improving Your H2O Wave Apps

Let’s quickly uncover a few simple tips that are quick to implement and have a big impact. Do not recreate navigation, update it The most common error I see across the Wave apps is ugly navigation that seems to be laggy. Laggy navigation. The reason for this behavior is that we want to save the clicked value and set it e...

Read moreMake with H2O.ai Recap: Getting Started with H2O Document AI

Product Owner, Data Scientist, and Kaggle Grandmaster, Mark Landry presented at the Make with H2O.ai session on getting started with H2O Document AI. The session covered an overview of H2O Document AI , a tool to extract insights and automate document processing. The session also included a product demo, looking at documents as data sets...

Read moreAdvice for Those Getting Started on Their AI Journey

H2O.ai Innovation Day Summer ‘22 included a customer insights panel made up of Prince Paulraj, AVP, Data Insights and Chief Data Officer at AT&T , Chris Throop, Managing Director and Global Head of Data Science at Castleton Commodities International and Sean Otto, Director of Advanced Analytics at AES . One of the questions panelists...

Read moreAES Transforms its Energy Business with AI and H2O.ai

AES is a leading renewable-energy company with global operations. The business produces energy and distributes energy for both private, public, and governmental organizations. AES was recently named one of the World’s Most Ethical Companies for the ninth straight year and won the Edison Electric Institute’s (EEI’s) Edison Award– the indus...

Read moreThe H2O.ai Wildfire Challenge Winners Blog Series - Team Titans

Note : this is a community blog post by Team Titans – one of the H2O.ai Wildfire Challenge winners. You can check out their app here .BackgroundForest fires have been getting worse in recent years. According to a report by the WWF, the duration of fire seasons across the globe has increased by 19% on average. The fire season has been sta...

Read moreImproving Machine Learning Operations with H2O.ai and Snowflake

Operationalizing models is critical for companies to get a return on their machine learning investments, but deployment is only one part of that operationalization process. With H2O.ai’s latest Snowflake Integration Application, authorized Snowflake users can easily deploy models, significantly reducing deployment timelines and enabling a...

Read moreImproving Manufacturing Quality with H2O.ai and Snowflake

Manufacturers are rapidly expanding their machine learning use cases by leveraging the deep integration between Snowflake’s Data Cloud and the H2O AI Cloud. Many current manufacturing quality checks require that sensor data and image data be processed and analyzed separately. Standard tooling presents challenges in storing and referencin...

Read moreThe H2O.ai Wildfire Challenge Winners Blog Series - Team PSR

Note : this is a community blog post by Team PSR – one of the H2O.ai Wildfire Challenge winners.This blog represents an experience we gained by participating in the H2O wildfire challenge. We need to mention that competing in this challenge is like a journey in a knowledge pool. For a person who is willing to get the knowledge of buildin...

Read moreDeveloping and Retaining Data Science Talent

It’s been almost a decade since the Harvard Business Review proclaimed that “Data Scientist” is the sexiest job of the 21st century. Since then, there has been an explosion of job opportunities and university degree programs claiming to give students all of the skills they need to accel in the field of data science . Yet, the scarcity of ...

Read moreThe H2O.ai Wildfire Challenge Winners Blog Series - Team HTB

Note : this is a community blog post by Team HTB – one of the H2O.ai Wildfire Challenge winners. You can check out their app here . The Challenge The purpose of the challenge was to develop an AI application to improve the forecast of bushfires and wildfires, with the main aim of reducing the human losses that these phenomena can cause...

Read moreThe H2O.ai Wildfire Challenge Winners Blog Series - Team Too Hot Encoder

Note : this is a community blog post by Team Too Hot Encoder – one of the H2O.ai Wildfire Challenge winners. You can check out their app here .The ChallengeThe aim of the project is to predict the probability of wildfire occurrence in Turkey for each month in 2020. As a result of these predictions, it is aimed to carry out more intensive...

Read moreBias and Debiasing

An important aspect of practicing machine learning in a responsible manner is understanding how models perform differently for different groups of people, for instance with different races, ages, or genders. Protected groups frequently have fewer instances in a training set, contributing to larger error rates for those groups. Some models...

Read moreComprehensive Guide to Image Classification using H2O Hydrogen Torch

In this article, we will learn how to build state-of-the-art models in computer vision and natural language processing within a couple of minutes using H2O Hydrogen Torch. Introduction to H2O Hydrogen Torch H2O Hydrogen Torch (HT) aims to simplify building and deploying deep learning models for a wide range of tasks in computer vision...

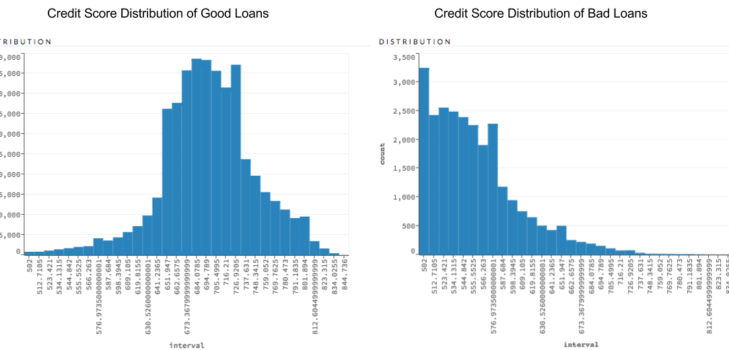

Read moreDemocratizing Lending through AI

According to the Federal Reserve , nearly 40% of adults in the U.S. sought credit in 2020, only slightly fewer than those who applied in the previous pre-pandemic year; among those who applied more than 1 in 10 were denied credit or were approved for less than they had sought. The reasons behind these denials are many, however, the same r...

Read moreSetting Up Your Local Machine for H2O AI Cloud Wave App Development

This article is for users who would like to build H2O Wave apps and publish them in the App Store within the H2O AI Cloud (HAIC). We will walk through how to set up your local machine for HAIC Wave App development. Instructions Developing with Wave H2O Wave is a framework for building frontends using only python or R. In this article...

Read moreData Science with H2O.ai: An Introduction to Machine Learning and Predictive Modeling

Our own Jonathan Farland recently recorded a talk about machine learning and predictive modeling. In his talk, Jon also gave an overview of open source H2O and H2O AI Cloud . This video is a great resource for getting up to speed with the latest technology from H2O in half an hour. Some of you may prefer to go through the slides while l...

Read moreGene Mutation AI

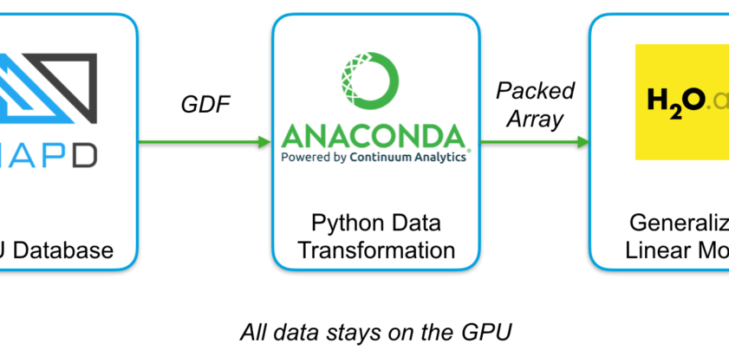

A genomics AI solution from H2O.ai Health Powered by NVIDIA GPUs and NVIDIA AI As precision medicine becomes more widespread, both medical diagnosis and drug discovery are increasingly relying on and leveraging the individual’s genomic and phenotypic profiles. From the multiple types and subtypes of cancer to heart disease, to obesity or ...

Read moreExpression Biomarker AI

A drug discovery AI solution from H2O.ai Health Powered by NVIDIA GPUs and NVIDIA AI In a healthy individual, each cell type has its own metabolic program, carrying out specific functions. This organization is disrupted in disease, either as a cause or a result of it, or both, and this disruption is reflected in the patient’s gene exp...

Read moreGene Mutation AI and the Future of Cancer Research

A genomics AI solution from H2O.ai Health Powered by NVIDIA GPUs and NVIDIA AI Cancer is a multifactorial disease with exact causes we have only recently begun to understand. While inherited germline mutations are understood to create a genetic predisposition to the disease, stochastic accumulation of somatic mutations over a person’s...

Read moreVaccine NLP

A population and public health NLP solution from H2O.ai Health Powered by NVIDIA GPUs and NVIDIA AI Social media platforms such as Twitter and Reddit have become invaluable tools for communication between individuals or groups and are widely used globally. As messages on these platforms can instantly be accessed by all users and remain on...

Read moreUnsupervised Learning Metrics

That which is measured improves – Karl Pearson , Mathematician. Almost everyone has heard of accuracy, precision, and recall – the most common metrics for supervised learning . But not as many people know the metrics for unsupervised learning . So, in this article, we will take you through the most common methods and how to implement th...

Read moreDemand Sensing with H2O Wave : Supply Chain Intelligence and Inventory Optimization for Retail, CPG, and FMCG Industries

Demand Sensing can help optimize inventories by analyzing and modeling short-term and real-time signals The supply chains across the Consumer Packaged Goods (CPG), Fast-Moving Consumer Goods (FMCG) and Retail sectors need to continuously monitor the drivers that may impact their internal models and processes. These include systems around ...

Read moreTackling Illegal, Unreported, and Unregulated (IUU) Fishing with AI

According to a report by the High-Level Panel for a Sustainable Ocean Economy, it is estimated that illegal, unreported, and unregulated (IUU) fishing accounts for 20 percent of the seafood and up to 50 percent in some areas. These activities not only affect the marine ecosystem but, in a way, are linked to climate change on the planet a...

Read moreAI Application to Demonstrate K-Means Clustering Using H2O Wave

Note : this is a community blog post by Shamil Dilshan Prematunga . It was first published on Medium . In this blog, I am going to highlight how cool H2O Wave is, by demonstrating my application called “K means App” which was built using Wave 0.20.0 . This is a simple application I have created to demonstrate one of the unsupervised lea...

Read moreA Quick Introduction to PyTorch: Using Deep Learning for Stock Price Prediction

Torch is a scalable and efficient deep learning framework. It offers flexibility and speed to build large scale applications. It also includes a wide range of libraries for developing speech, image, and video-based applications. The basic building block of Torch is called a tensor. All the operations defined in Torch use a tensor. Ok, l...

Read moreIntroducing H2O Hydrogen Torch: A No-code Deep Learning Framework

Over and over again we heard from customers, “deep learning is cool, but it’s hard and time consuming.” They kept asking “could someone just make it easier?” In typical “Maker” fashion, you ask, we deliver, H2O Hydrogen Torch . H2O Hydrogen Torch is a new product that enables data scientists and developers to train and deploy state-of-t...

Read moreHow to Create Your Spotify EDA App with H2O Wave

In this article, I will show you how to build a Spotify Exploratory Data Analysis (EDA) app using H2O Wave from scratch.H2O Wave is an open-source Python development framework for interactive AI apps. You do not need to know Flask, HTML, CSS, etc. H2O Wave has ready-to-use user-interface components and charts, including dashboard templa...

Read moreH2O.ai releases new H2O MLOps features that improves the explainability, flexibility and configuration of machine learning workflows.

H2O.ai now provides data scientists and machine learning (ML) engineers even more powerful features that give greater control, governance, and scalability within their machine learning workflow – all available on our H2O AI Cloud. Now, H2O MLOps enables you to: Deploy model explanations in production Explainability is core to understa...

Read moreMission Impossible: Improving Patient Care Through Automated Document Processing

Don’t tell Bob Rogers’ team something can’t be done. When Rogers embarked on an ambitious project to automate the processing of the more than 1.4 million electronically faxed documents received annually by the Center for Digital Health Innovation at the University of California, San Francisco (UCSF CDHI), advisors and vendors initially t...

Read moreAn Introduction to Unsupervised Machine Learning

There are three major branches of machine learning (ML): supervised, unsupervised, and reinforcement. Supervised learning makes up the bulk of the models businesses use, and reinforcement learning is behind front-page-news-AI such as AlphaGo . We believe unsupervised learning is the unsung hero of the three, and in this article, we brea...

Read moreRevisiting the Miracle of Istanbul

IntroductionOn May 25th, 2005, the UEFA Champions League final between AC Milan and Liverpool was held at the Atatürk Olympic Stadium in Istanbul. The match is still considered one of the greatest finals in football history. AC Milan took a 3-0 lead in the first half but Liverpool made a miraculous comeback in the second half to tie the g...

Read moreInstall H2O Wave on AWS Lightsail or EC2

Note : this blog post was first published on Thomas’ personal blog Neural Market Trends . I recently had to set up H2O’s Wave Server on AWS Lightsail and build a simple Wave App as a Proof of Concept. If you’ve never heard of H2O Wave then you have been missing out on a new cool app development framework. We use it at H2O to build AI-ba...

Read moreWhat Are Feature Stores and Why Are They Important?

Machine learning (ML) models are only as good as the data fed into them. In tabular problems, the data is a collection of rows (samples) and columns (features). So, you could say that tabular ML models are only as good as the features fed into them. But how do you manage features? Can you share them across the company? Can you easily reu...

Read moreA Beginner’s View of H2O MLOps

Note : this is a community blog post by Shamil Dilshan Prematunga . It was first published on Medium .When we step into the AI application world it is not one easy step. It has a series of tasks that are combined. To convert an idea to the workable stage we must fulfill the requirements in each stage. When we look at existing platforms, t...

Read moreShapley Values - A Gentle Introduction

If you can’t explain it to a six-year-old, you don’t understand it yourself. – Albert Einstein One fear caused by machine learning (ML) models is that they are blackboxes that cannot be explained. Some are so complex that no one, not even domain experts, can understand why they make certain decisions. This is of particular concern when s...

Read moreThe Bond Market & AI: How MarketAxess Brings it All Together

The vast majority of the equities market trades electronically while the bond market is still in its infancy by comparison, but MarketAxess is seeking to change that. Recently, we hosted a virtual event with the MarketAxess team where they explained how they were solving challenges in the world’s largest bond marketplace while leveraging ...

Read moreH2O Release 3.36 (Zorn)

There’s a new major release of H2O, and it’s packed with new features and fixes! Among the big new features in this release are Distributed Uplift Random Forest, an algorithm typically used in marketing and medicine to model uplift, and Infogram, a new research direction in machine learning that focuses on interpretability and fairness in...

Read more1st Place Winner's Blog - Kaggle 2021 Data Science and Machine Learning Survey

Kaggle, the largest global community of data scientists, conducted the 5th annual industry-wide survey that presented a truly comprehensive view of the state of data science and machine learning. A total of 25,973 responses were collected from participants from over 60 countries. Kaggle also launched the Data Science Survey Challenge in w...

Read moreWhy Companies Need to Think About MLOps

For years machine learning (ML) researchers have focused on building outstanding models and figuring out how to squeeze every last drop of performance from them. But many have realized that creating top-performing models doesn’t necessarily equate to having them deliver business value. Often the best models can be very complex and costly ...

Read moreAn Introduction to Time Series Modeling: Traditional Time Series Models and Their Limitations

In the first article in this series, we broke down the preprocessing and feature engineering techniques needed to build high-performing time series models. But we didn’t discuss the models themselves. In this article, we will dig into this. As a quick refresher, time series data has time on the x-axis and the value you are measuring (dema...

Read moreAnnouncing the Fully Managed H2O AI Cloud

The H2O AI Cloud is the leading platform to make and access your own AI models and apps. Customers have had access to the H2O AI Hybrid Cloud for the last year, where they could manage the platform themselves on their favorite cloud or on-prem infrastructure. Today, we’re excited to announce a fully managed version of the H2O AI Cloud. Y...

Read moreH2O.ai Tools for a Beginner

Note : this is a community blog post by Shamil Dilshan Prematunga . It was first published on Medium .Hey, this is not a deep technical blog. I’d like to share the experience I had with H2O tools when I was studying Machine Learning. As a Research Engineer, I am currently working on an area based on Telecommunication. Day by day with my e...

Read moreAmazon Redshift Integration for H2O.ai Model Scoring

We consistently work with our partners on innovative ways to use models in production here at H2O.ai, and we are excited to demonstrate our AWS Redshift integration for model scoring. Amazon Redshift is a very popular data warehouse on AWS. We wanted to expand on the existing capacities of using data from Redshift to train a model on the ...

Read moreBuilding Resilient Supply Chains with AI

A global pandemic, a fundamental shift in the demand for goods and services worldwide, and the recent blockage of a major international trade route have all highlighted the need to build and maintain resilient supply chains.At the foundation of resilient supply chains lie accurate and reliable forecasts. The majority of traditional softwa...

Read moreIntroducing the H2O.ai Wildfire Challenge

We are excited to announce our first AI competition for good – H2O.ai Wildfire Challenge .We’ve structured this challenge to be a global collaborative effort to do good for the world that we share. We want teams to submit their ideas and applications freely, knowing that other teams will learn from what they’ve done to improve their AI ap...

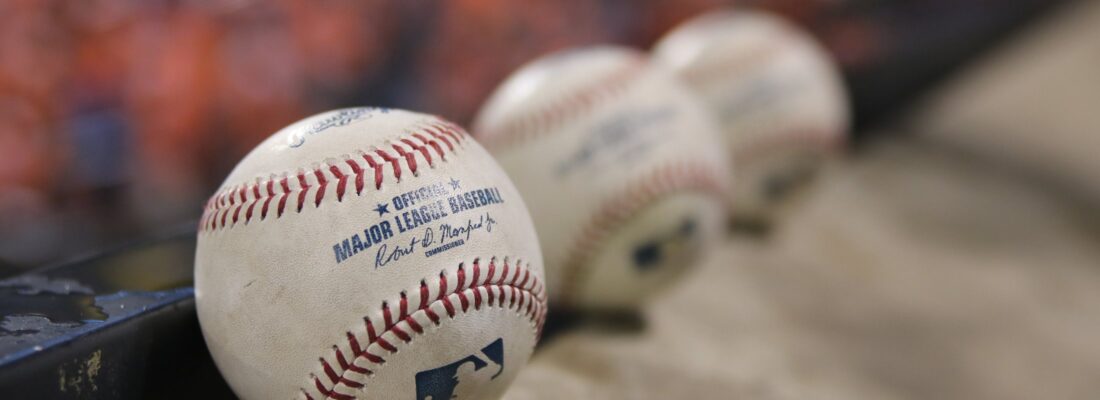

Read moreMLB Player Digital Engagement Forecasting

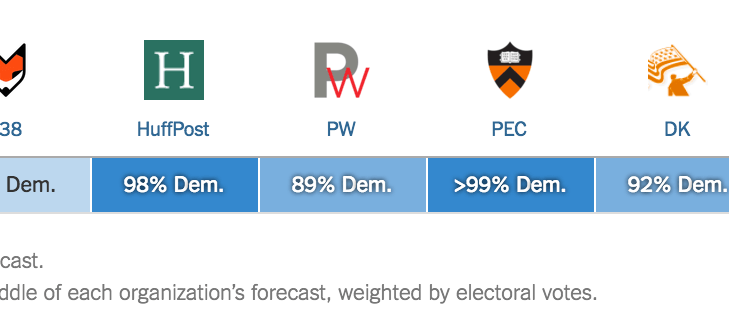

Are you a baseball fan? If so, you may notice that things are heating up right now as the Major League Baseball (MLB ) World Series between Houston Astros and Atlanta Braves tied at 1-1.MLB Postseason 2021 Results as of October 28 (source) This also reminded me of the MLB Player Digital Engagement Forecasting competition in which my coll...

Read moreAnnouncing the H2O AI Feature Store

We’re really excited to announce the H2O AI Feature Store – The only intelligent feature store in the market. We’ve been working on this for many months with our co-development partner: AT&T. This enabled us to build a first-of-its-kind platform that is designed to be enterprise-grade from day 1. It is built with best-of-breed techno...

Read moreAn Introduction to Time Series Modeling: Time Series Preprocessing and Feature Engineering

Time is the only nonrenewable resource – Sri Ambati, Founder and CEO, H2O.ai. Prediction is very difficult, especially if it’s about the future – Niels Bohr, Nobel Prize-Winning Physicist. Despite its inherent difficulty, every business needs to make predictions. You may want to forecast sales or estimate demand or gauge future inventory ...

Read moreNew Features Now Available with the Latest Release of the H2O AI Cloud 21.10

The Makers here at H2O.ai have been busy building new features and enhancing capabilities across our AI platform . Designed to support our core mission of democratizing AI, these additions to our platform simplify the ability to make AI you can trust, operate it efficiently and innovate with ready-made AI applications.Launched in January ...

Read moreTime Series Forecasting Best Practices

Earlier this year, my colleague Vishal Sharma gave a talk about time series forecasting best practices. The talk was well-received so we decided to turn it into a blog post. Below are some of the highlights from his talk. You can also follow the two software demos and try it yourself using our H2O AI Cloud .(Note : The video links with ...

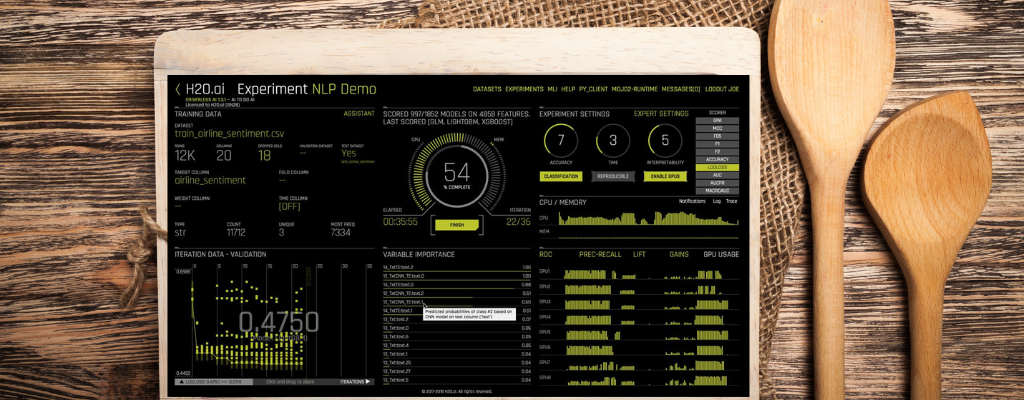

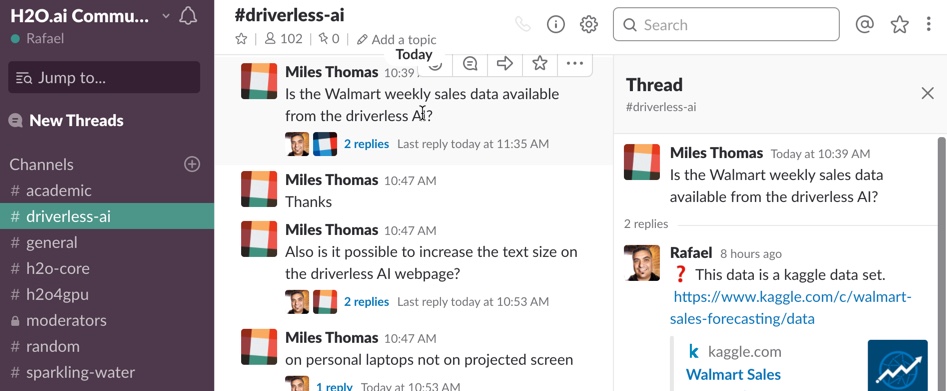

Read moreImproving NLP Model Performance with Context-Aware Feature Extraction

I would like to share with you a simple yet very effective trick to improve feature engineering for text analytics. After reading this article, you will be able to follow the exact steps and try it yourself using our H2O AI Cloud .First of all, let’s have a look at the off-the-shelf natural language processing (NLP) recipes in H2O Driver...

Read moreFeature Transformation with the H2O AI Cloud

It is well known throughout the data science community that data preparation, pre-processing, and feature engineering are one of the most cumbersome parts of the data science workload. So as we continue to innovate here at H2O.ai with our end-to-end automated machine learning (autoML ) capabilities, we challenged ourselves to evolve the...

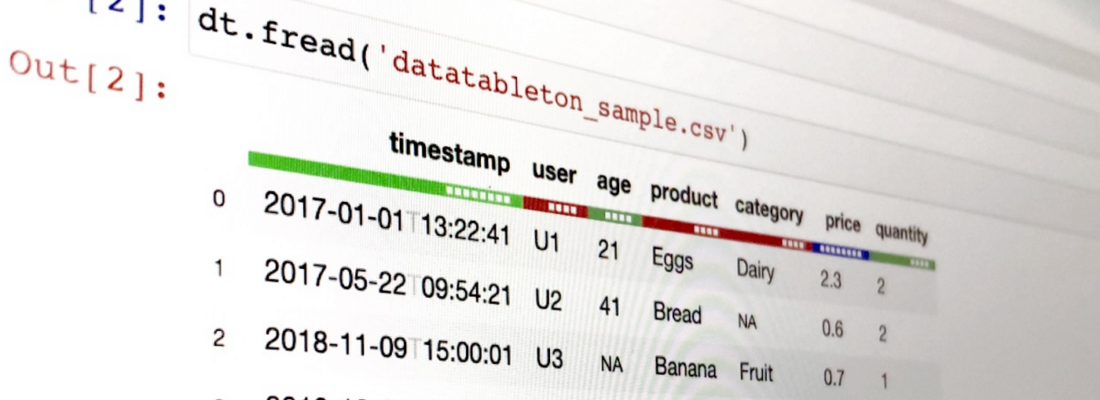

Read moreIntroducing DatatableTon - Python Datatable Tutorials & Exercises

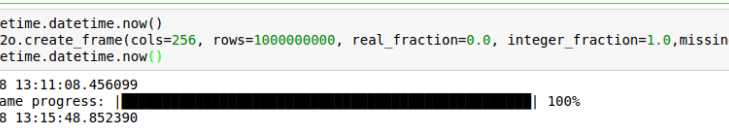

Datatable is a python library for manipulating tabular data. It supports out-of-memory datasets, multi-threaded data processing and has a flexible API.If this reminds you of R’s data.table , you are spot on because Python’s datatable package is closely related to and inspired by the R library.The release of v1.0.0 was done on 1st July,...

Read moreH2O Release 3.34 (Zizler)

There’s a new major release of H2O, and it’s packed with new features and fixes! Among the big new features in this release, we’ve added Extended Isolation Forest for improved results on anomaly detection problems, and we’ve implemented the Type III SS test (ANOVAGLM) and the MAXR method to GLM. For existing algorithms, we improved the pe...

Read moreFrom the game of Go to Kaggle: The story of a Kaggle Grandmaster from Taiwan

In conversation with Kunhao Yeh: A Data Scientist and Kaggle Grandmaster In these series of interviews, I present the stories of established Data Scientists and Kaggle Grandmasters at H2O.ai, who share their journey, inspirations, and accomplishments. These interviews are intended to motivate and encourage others who want to understand...

Read moreVisualizing Large Datasets with H2O-3

Exploratory data analysis is one of the essential parts of any data processing pipeline. However, when the magnitude of data is high, these visualizations become vague. If we were to plot millions of data points, it would become impossible to discern individual data points from each other. The visualized output in such a case is pleasing ...

Read moreInnovation with the H2O AI Cloud

Consumer expectations for responsiveness, personalization, and overall efficiency have risen dramatically over the past several years as technology has become ubiquitous across both our personal and professional lives. These rapidly growing expectations demand an expansion in focus from simply solving narrow use cases with machine learnin...

Read moreInterning with H2O.ai- Robie Gonzales

This blog post is by Robie Gonzales, who has interned with us for the last 8 months. Thank you for your awesome work, Robie! When I started my internship eight months ago, I had minimal knowledge about machine learning and artificial intelligence. Over the course of these months, my experience as a Full Stack Developer has allowed me to ...

Read moreAI-Driven Predictive Maintenance with H2O AI Cloud

According to a study conducted by Wall Street Journal , unplanned downtime costs industrial manufacturers an estimated $50 billion annually. Forty-two percent of this unplanned downtime can be attributed to equipment failure alone. These downtimes can cause unnecessary delays and, as a result, affect the business. A better and superior al...

Read moreWhat are we buying today?

Note : this is a guest blog post by Shrinidhi Narasimhan .It’s 2021 and recommendation engines are everywhere. Be it online shopping, food, music, and even online dating, the race to provide personalized recommendations to the user has many contenders. The technology of giving users what they need based on their buying strategies or digit...

Read moreThe Emergence of Automated Machine Learning in Industry

This post was originally published by K-Tech, Centre of Excellence for Data Science and AI, powered by NASSCOM. The link of the post can be found here. The concept of Automated Machine Learning has gained much traction recently. Automated Machine Le...

Read moreWhat does it take to win a Kaggle competition? Let's hear it from the winner himself.

In this series of interviews, I present the stories of established Data Scientists and Kaggle Grandmasters at H2O.ai, who share their journey, inspirations, and accomplishments. These interviews are intended to motivate and encourage others who want to understand what it takes to be a Kaggle Grandmaster. In this interview, I shall be ...

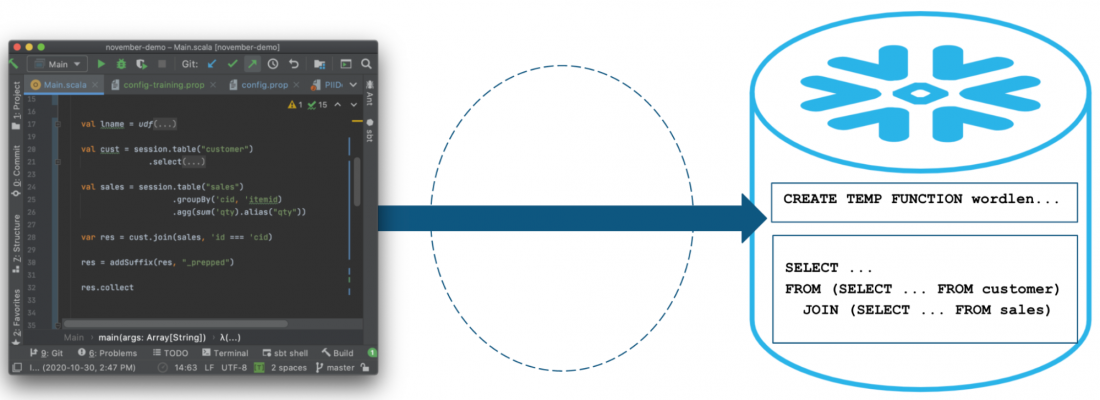

Read moreH2O Integrates with Snowflake Snowpark/Java UDFs: How to better leverage the Snowflake Data Marketplace and deploy In-Database

One of the goals of machine learning is to find unknown predictive features, even hidden from subject matter experts, in datasets that might not be apparent before, and use those 3rd party features to increase the accuracy of the model.A traditional way of doing this was to try and scrape and scour distributed, stagnant data sources on th...

Read moreGetting the best out of H2O.ai’s academic program

“H2O.ai provides impressively scalable implementations of many of the important machine learning tools in a user-friendly environment. Allowing for free academic use sets a generous example for commercial software developers — it is also the way forward in the era of open-source software.” – Professor Trevor J. Hastie, John A. Overdeck ...

Read moreRegístrese para su prueba gratuita y podrá explorar H2O AI Cloud

Recientemente, lanzamos nuestra prueba gratuita de 14 días de H2O AI Cloud, lo que le brinda la oportunidad de obtener una experiencia práctica con nuestra plataforma más nueva de machine learning. H2O AI Cloud es una plataforma de inteligencia artificial de principio al fin que permite a las organizaciones crear, compartir y usar rápidam...

Read moreHow Much is My Property Worth?

Note : this is a guest blog post by Jaafar Almusaad .How Much is My Property Worth?This is the million-dollar question – both figuratively and literally. Traditionally, qualified property valuers are tasked to answer this question. It’s a lengthy and costly process, but more critically, it’s inconsistent and largely subjective. Mind you, ...

Read moreNavegación más segura con Inteligencia Artificial

El mes pasado, el mundo fue testigo de cómo socorristas intentaron liberar un buque de carga que había encallado en el Canal de Suez. Este incidente bloqueó el tráfico a través de una vía navegable que es esencial para el comercio. Aunque la ubicación fue inusual, las colisiones de buques, las colisiones de buques con objetos fijos y los...

Read moreWhat it takes to become a World No 1 on Kaggle

In conversation with Guanshuo Xu: A Data Scientist, Kaggle Competitions Grandmaster, and a Ph.D. in Electrical Engineering. In this series of interviews, I present the stories of established Data Scientists and Kaggle Grandmasters at H2O.ai , who share their journey, inspirations, and accomplishments. The intention behind these interviews...

Read moreUnwrap Deep Neural Networks Using H2O Wave and Aletheia for Interpretability and Diagnostics

The use cases and the impact of machine learning can be observed clearly in almost every industry and in applications such as drug discovery and patient data analysis, fraud detection, customer engagement, and workflow optimization. The impact of leveraging AI is clear and understood by the business; however, AI systems are also seen as b...

Read moreShapley summary plots: the latest addition to the H2O.ai’s Explainability arsenal

It is impossible to deploy successful AI models without taking into account or analyzing the risk element involved. Model overfitting, perpetuating historical human bias, and data drift are some of the concerns that need to be taken care of before putting the models into production. At H2O.ai, explainability is an integral part of our ML ...

Read moreH2O.ai logra gran posicionamiento en integridad de visión en el cuadrante Visionarios del Cuadrante Mágico de Gartner 2021 para Data Science y Machine Learning

En H2O.ai, nuestra misión es democratizar la IA y creemos que impulsar el valor de los datos es un esfuerzo de equipo. A menudo, los ingenieros de datos deben organizar y preparar los datos y luego los científicos de datos deben crear modelos. Los modelos, una vez creados, deben ponerse en producción y el personal de TI y de DevOps debe m...

Read moreSafer Sailing with AI

In the last week, the world watched as responders tried to free a cargo ship that had gone aground in the Suez Canal. This incident blocked traffic through a waterway that is critical for commerce. While the location was an unusual one, ship collisions, allisions , and groundings are not uncommon. With all the technology that mariners hav...

Read moreH2O AI Cloud: Democratizing AI for Every Person and Every Organization

Harnessing AI’s true potential by enabling every employee, customer, and citizen with sophisticated AI technology and easy-to-use AI applications. Democratization is an essential step in the development of AI, and AutoML technologies lie at the heart of it. AutoML tools have played a pivotal role in transforming the way we consume an...

Read moreH2O.ai é a mais avançada por sua capacidade de execução no quadrante dos visionários no relatório do Gartner de Ciências de Dados e Machine Learning em 2021

*Este artigo foi originalmente escrito em inglês pelo SVP de Marketing, Read Maloney, e traduzido para português por Bruna Smith. Na H2O.ai, nossa missão é democratizar a Inteligência Artificial e acreditamos que o valor agregado, gerado a partir dos dados, é um trabalho em equipe. Os dados devem ser organizados e preparados, geralmente ...

Read moreH2O.ai Placed Furthest in Completeness of Vision in 2021 Gartner Data Science and Machine Learning Magic Quadrant in the Visionaries Quadrant.

At H2O.ai, our mission is to democratize AI, and we believe driving value from data is a team sport. Data needs to be organized and prepared, often by data engineers, and then models need to be built by data scientists. With models built, they need to be put into production and maintained by IT and DevOps personnel. Finally, these models...

Read moreLearning from others is imperative to success on Kaggle says this Turkish GrandMaster

In conversation with Fatih Öztürk: A Data Scientist and a Kaggle Competition Grandmaster. In this series of interviews, I present the stories of established Data Scientists and Kaggle Grandmasters at H2O.ai , who share their journey, inspirations, and accomplishments. These interviews are intended to motivate and encourage others who want...

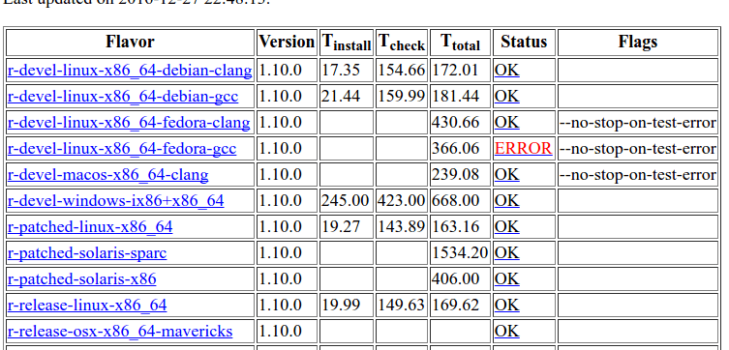

Read moreH2O-3 Improvements from Two University Projects

In September 2019 H2O.ai became a silver partner of the Faculty of Informatics at Czech Technical University in Prague. The main goal of this partnership is to make connections between students and companies to prepare an environment where students can use their knowledge in practice and gain real-work experiences. In general, within th...

Read moreData to Production Ready Models to Business Apps in Just a Few Steps

Building a Credit Scoring Model and Business App using H2OIn the journey of a successful credit scoring implementation, multiple stakeholders and different personas are involved at different steps – Business Inputs, Dataset procurement, Data Analysis, Predictive Machine Learning, Data Storytelling, and Dashboarding. H2O.AI platforms such ...

Read moreUsing Python's datatable library seamlessly on Kaggle

Managing large datasets on Kaggle without fearing about the out of memory error Datatable is a Python package for manipulating large dataframes. It has been created to provide big data support and enable high performance. This toolkit resembles pandas very closely but is more focused on speed.It supports out-of-memoy datasets, multi-thr...

Read moreSuccessful AI: Which Comes First, the Data or the Question?

Successful AI is a business process. Even the most sophisticated models, the latest algorithms, and highly experienced AI experts cannot make AI a practical success unless it is connected to a meaningful business goal . To make that happen, you need a good interaction between those with knowledge of the business and with the AI team. But ...

Read moreIntroducing H2O AI Cloud

Organizations have made large investments in modernizing their data infrastructure and operations, but most still struggle to drive maximum value from their data. Many companies experimented with building large teams of expert data scientists, and while this approach did produce some valuable models, the cost was high and the timeframes ...

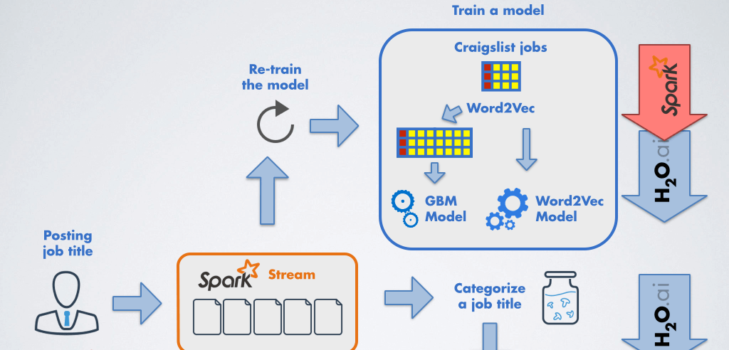

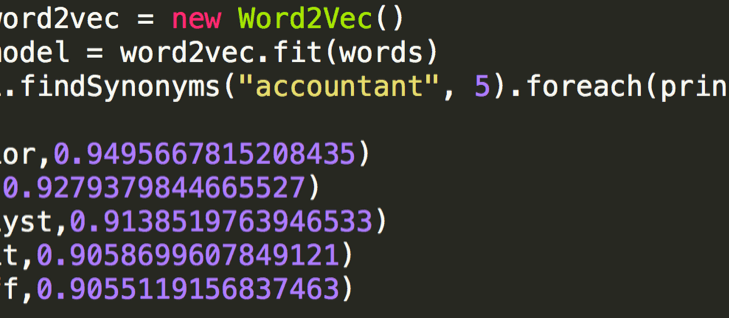

Read moreUsing AI to unearth the unconscious bias in job descriptions

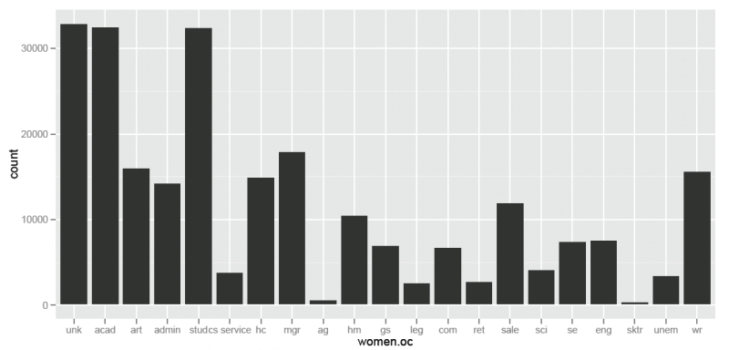

“Diversity is the collective strength of any successful organization Unconscious Bias in Job DescriptionsUnconscious bias is a term that affects us all in one way or the other. It is defined as the prejudice or unsupported judgments in favor of or against one thing, person, or group as compared to another, in a way that is usually con...

Read moreH2O Driverless AI 1.9.1: Continuing to Push the Boundaries for Responsible AI

At H2O.ai, we have been busy. Not only do we have our most significant new software launch coming up (details here ), but we also are thrilled to announce the latest release of our flagship enterprise platform H2O Driverless AI 1.9.1. With that said, let’s jump into what is new: Faster Python scoring pipelines with embedded MOJOs for r...

Read moreMeet the Data Scientist who just cannot stop winning on Kaggle.

In conversation with Philipp Singer: A Data Scientist, Kaggle Double Grandmaster, and a Ph.D. in Computer Science. In this series of interviews, I present the stories of established Data Scientists and Kaggle Grandmasters at H2O.ai , who share their journey, inspirations, and accomplishments. These interviews are intended to motivate an...

Read moreLiqui.do Speeds Credit Scoring for Fair Lending with H2O.ai

Liqui.do is a technological and innovative company developing a platform for leasing equipment for small and medium enterprises. As part of its business to provide a variety of credit options for companies that want to finance capital purchases, Liqui.do needs to rapidly and accurately assess the credit risk and scoring of a customer in o...

Read moreNew Improvements in H2O 3.32.0.2

There is a new minor release of H2O that introduces two useful improvements to our XGBoost integration: interaction constraints and feature interactions.Interaction ConstraintsFeature interaction constraints allow users to decide which variables are allowed to interact and which are not.Potential benefits: Better predictive performance...

Read moreIntroducing H2O Wave

For almost a decade, H2O.ai has worked to build open source and commercial products that are on the leading edge of innovation in machine learning, from AutoML to Explainable AI . We are thrilled to announce the release of what we believe to be the future of AI Applications: H2O Wave . Wave is an open source, lightweight Python developmen...

Read moreGrandmaster Series: The inspiring journey of the ‘Beluga’ of Kaggle World 🐋

In conversation with Gábor Fodor: A Data Scientist at H2O.ai and a Kaggle Competitions’ Grandmaster. In this series of interviews, I present the stories of established Data Scientists and Kaggle Grandmasters at H2O.ai , who share their journey, inspirations, and accomplishments. These interviews are intended to motivate and encourage othe...

Read moreAutomate your Model Documentation using H2O AutoDoc

Create model documentation for Supervised learning models in H2O-3 and Scikit-Learn — in minutes.The Federal Reserve’s 2011 guidelines state that without adequate documentation, model risk assessment and management would be ineffective. A similar requirement is put forward today by many regulatory and corporate governance bodies. Thus ...

Read moreMitos e verdades sobre o AutoML

Todas as revoluções que tivemos até hoje, tanto as tecnológicas quanto industriais, possuem uma semelhança: elas estão ligadas à forma como os seres humanos lidam com as máquinas. Antes, os processos eram feitos de forma muito manual e, com o tempo, acabaram sofrendo uma evolução natural voltada para a automação. Com o aprendizado de máqu...

Read moreMaximizing your Value from AI

Some organizations have already identified the benefits that can be gained from Artificial Intelligence and Data Science, bringing in talented resources to enable them to build AI models and solutions. But more often than not, the business doesn’t understand the capabilities and huge potential of AI well enough, nor the investments that a...

Read moreAI in the Financial Industry: 8 Key Takeaways from the Bill.com + H2O.ai Fireside Chat

The current global pandemic crisis presents various challenges to businesses in all industries, including financial services institutions, who are monitoring and dealing with the effects of COVID-19 across the world. At a time of a pandemic, it is important that teams get together to share their insights and experience, with the goal of i...

Read moreThe Importance of Explainable AI

This blog post was written by Nick Patience, Co-Founder & Research Director, AI Applications & Platforms at 451 Research, a part of S&P Global Market Intelligence From its inception in the mid-twentieth century, AI technology has come a long way. What was once purely the topic of science fiction and academic discussion is now...

Read moreBuilding an AI Aware Organization

Responsible AI is paramount when we think about models that impact humans, either directly or indirectly. All the models that are making decisions about people, be that about creditworthiness, insurance claims, HR functions, and even self-driving cars, have a huge impact on humans. We recently hosted James Orton, Parul Pandey, and Sudala...

Read moreH2O on Kubernetes using Helm

Deploying real-world applications using bare YAML files to Kubernetes is a rather complex task, and H2O is no exception. As demonstrated in one of the previous blog posts . Greatly simplified, a cluster of H2O open source machine learning nodes is brought up in the following manner: A headless service to make initial node discovery and ...

Read moreMaking AI a Reality

This blog post focuses on the content discussed in more depth in the free ebook “ Practical Advice for Making AI Part of Your Company’s Future”. Do you want to make AI a part of your company? You can’t just mandate AI. But you can lead by example.All too often, especially in companies new to AI and machine learning, team leaders may be ta...

Read moreCombining the power of KNIME and H2O.ai in a single integrated workflow

KNIME and H2O.ai , the two data science pioneers known for their open source platforms, have partnered to further democratize AI. Our approaches are about being open, transparent, and pushing the leading edge of AI. We believe strongly that AI is not for the select few but for everyone. We are taking another step in democratizing AI by ...

Read moreThe Challenges and Benefits of AutoML

Machine Learning and Artificial Intelligence have revolutionized how organizations are utilizing their data. AutoML or Automatic Machine Learning automates and improves the end-to-end data science process. This includes everything from cleaning the data, engineering features, tuning the model, explaining the model, and deploying it into p...

Read moreH2O Release 3.32 (Zermelo)

There’s a new major release of H2O, and it’s packed with new features and fixes! Among the big new features in this release, we’ve added RuleFit — an interpretable machine learning algorithm , introduced a new toolbox for model explainability, made Target Encoding work for all classes of problems, and integrated it in our AutoML framewor...

Read more5 Key Elements to Detecting Fraud Quicker With AI

The number of transactions using electronic financial instruments has been increasing by about 23% year over year. The global COVID-19 pandemic has only accelerated that process. Electronic means have become the primary vehicle of how people purchase their goods. With this sudden increase in transactions, fraud detection systems are stres...

Read moreEmpowering Snowflake Users with AI using SQL

At H2O.ai we work with many enterprise customers, all the way from Fortune 500 giants to small startups. What we heard from all these customers as they embark on their data science and machine learning journey is the need to capture and manage more data cost-effectively, and the ability to share that data across their organization to mak...

Read more3 Ways to Ensure Responsible AI Tools are Effective

Since we began our journey making tools for explainable AI (XAI) in late 2016, we’ve learned many lessons, and often the hard way. Through headlines, we’ve seen others grapple with the difficulties of deploying AI systems too. Whether it’s: a healthcare resource allocation system that likely discriminated against millions of black peop...

Read moreAccelerating AI Transformation in Healthcare

The healthcare industry is evolving rapidly with volumes of data and increasing challenges. Early adopters of AI and machine learning in the healthcare space have embraced new data-driven initiatives and are reaping the benefits not only in terms of patient care but also in their own operations. Hospitals, physicians, and laboratories can...

Read more5 Key Considerations for Machine Learning in Fair Lending

This month, we hosted a virtual panel with industry leaders and explainable AI experts from Discover, BLDS, and H2O.ai to discuss the considerations in using machine learning to expand access to credit fairly and transparently and the challenges of governance and regulatory compliance. The event was moderated by Sri Ambati, Founder and CE...

Read moreThe Benefits of Budget Allocation with AI-driven Marketing Mix Models

Excerpt of the white paper: “The Latest in AI Technologies Reinvent Media and Marketing Analytics @ Allergan” Authors: Akhil Sood, Associate Director @ Marketing Sciences, Allergan Dr. Michael Proksch, Senior Director @ H2o.ai Vijay Raghavan, Associate Vice President @ Marketing Sciences, AllerganIntroductionThe call for accountability in...

Read moreMy Experience at the World’s Best AI Company

Blog post by Spencer Loggia When H2O announced that remote work would continue through the summer due to Covid-19, I was a little disappointed. I expected that it would be difficult to connect with others as a new employee, especially as an intern. My internship now comes to an end, and I realize how completely wrong I was. I’ve met and w...

Read moreWhat it is like to intern at H2O.ai

Blog post by Jasmine Parekh Let’s be honest, 2020 is not going to go down as a glory year in history, unless something absolutely miraculous happens in the next few months. Generations of highschoolers down the line will sit in history class learning about the pandemic that halted the world. In the face of the virus, everyone around the w...

Read moreDesmistificando a Inteligência Artificial e seu papel no sucesso dos negócios

A Inteligência Artificial tem sido um termo bastante utilizado atualmente, mas será que todos sabem, na prática, o que ela significa e como se beneficiar dessa tecnologia inovadora? Assim como toda buzzword, a IA também gera muitos mitos. Entre eles, a crença de que a aprendizagem de máquinas irá substituir o trabalho dos cientistas de da...

Read moreModèles NLP avec BERT

H2O Driverless AI 1.9 vient de sortir, et je vous propose une série d’articles sur les dernières fonctionnalités innovantes de cette solution d’Automated Machine Learning, en commençant par l’implémentation de BERT pour les tâches NLPBERT , ou “Bidirectional Encoder Representations from Transformers” est considéré aujourd’hui comme l’éta...

Read moreExploring the Next Frontier of Automatic Machine Learning with H2O Driverless AI

At H2O.ai, it is our goal to democratize AI by bridging the gap between the State-of-the-Art (SOTA) in machine learning and a user-friendly, enterprise-ready platform. We have been working tirelessly to bring the SOTA from Kaggle competitions to our enterprise platform Driverless AI since its very first release. The growing list of Driver...

Read moreIn a World Where… AI is an Everyday Part of Business

Imagine a dramatically deep voice-over saying “In a world where…” This phrase from old movie trailers conjures up all sorts of futuristic settings, from an alien “world where the sun burns cold”, a Mad Max “world without gas” to a cyborg “world of the not too distant future”.Often the epic science fiction or futuristic stories also have a...

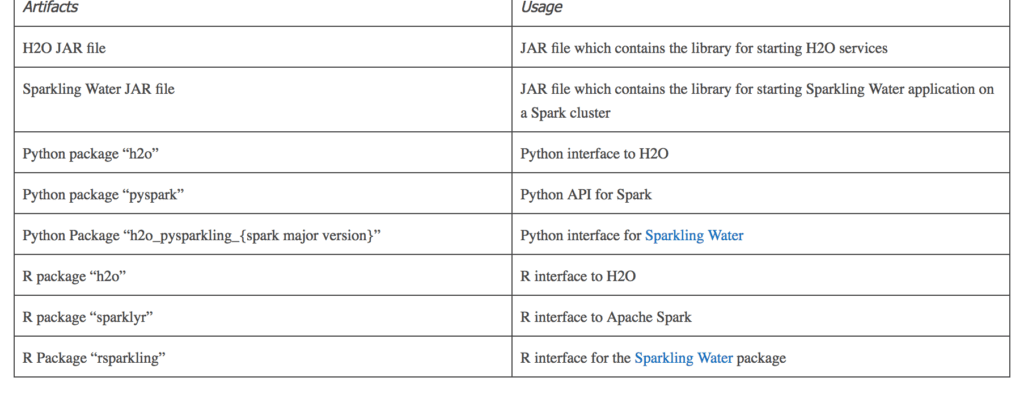

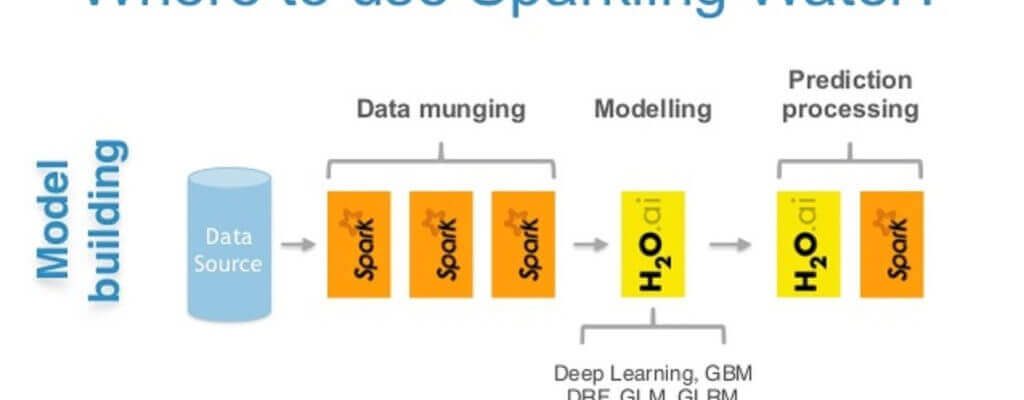

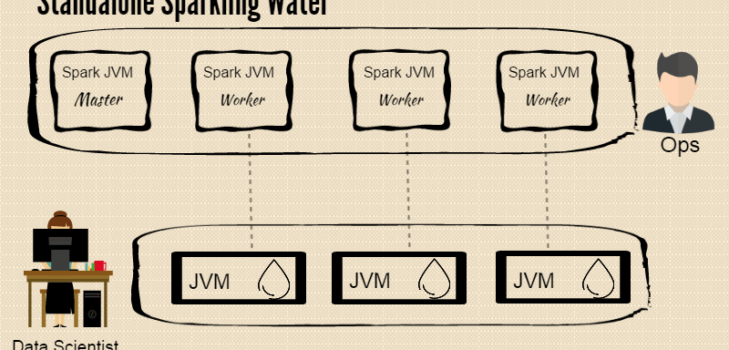

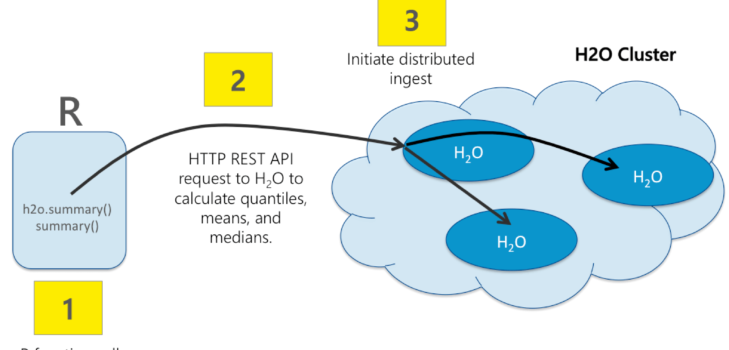

Read moreRunning Sparkling Water in Kubernetes

Sparkling Water can now be executed inside the Kubernetes cluster. Sparkling Water provides a Beta version of Kubernetes support in a form of nightlies. Both Kubernetes deployment modes, cluster and client, are supported. Also, both Sparkling Water backends and all clients are also ready to be tested. Sparkling Water in Kubernetes is ...

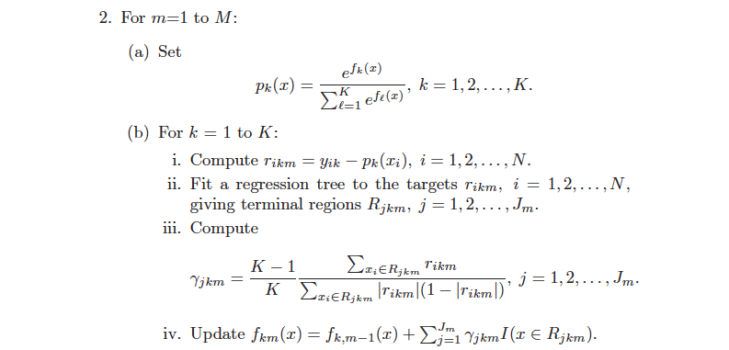

Read moreFrom GLM to GBM – Part 2

How an Economics Nobel Prize could revolutionize insurance and lending Part 2: The Business Value of a Better ModelIntroductionIn Part 1 , we proposed better revenue and managing regulatory requirements with machine learning (ML). We made the first part of the argument by showing how gradient boosting machines (GBM), a type of ML, can mat...

Read moreA Inteligência Artificial está transformando e alavancando negócios. Entenda como e por quê

Você sabia que inteligência artificial e machine learning não são conceitos novos? Pois eles surgiram pela primeira vez em 1956 na universidade de Dartmouth, nos Estados Unidos, mas vêm mudando e evoluindo significativamente ao longo do tempo. Hoje, a quantidade de dados que uma empresa dispõe para análise é gigantesca e seu crescimento é...

Read moreOn-Ramp to AI